Robot Applications

Copyright © 2014 Dr. Korondi Péter, Halas János, Dr. Samu Krisztián, Bojtos Attila, Dr. Tamás Péter

A tananyag a TÁMOP-4.1.2.A/1-11/1-2011-0042 azonosító számú „ Mechatronikai mérnök MSc tananyagfejlesztés ” projekt keretében készült. A tananyagfejlesztés az Európai Unió támogatásával és az Európai Szociális Alap társfinanszírozásával valósult meg.

Reviewed by: Dr. Husi Géza

Published by: BME MOGI

Editor by: BME MOGI

ISBN 978-963-313-136-7

2014

- 1. Introduction: Trends in robotics

- 2. Robot middleware

- 3. Universal robot controller

- 4. Internet-based Telemanipulation

- 4.1. Abstract

- 4.2. Introduction

- 4.3. General approach of telemanipulation

- 4.4. Master devices as haptic interfaces

- 4.5. Animation of the operator’s hand wearing the sensor glove

- 4.6. Overview of control modes

- 4.6.1. Basic Architectures

- 4.6.2. Nonlinear scaling (Virtual coupling impedance)

- 4.6.3. Time delay compensation of internet based telemanipulation

- 4.6.4. Friction compensation for master devices

- 4.7. A complete application example: A handshake via Internet

- 4.8. Conclusions for telemanipulation

- 4.9. References for telemanipulation

- 5. Holonomic based robot for behavioral research

- 6. Fuzzy automaton for describing ethological functions

- 8. Models of Friction

- 9. PCI universal motion control system

- 10. PCI CARD – Specifications

- 10.1. Pin-outs and electrical characteristics

- 10.2. Mechanical dimensions

- 10.3. Connecting servo modules

- 10.4. Axis interface modules

- 10.4.1. Typical servo configurations

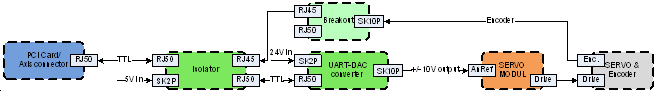

- 10.4.1.1. Analogue system with encoder feedback

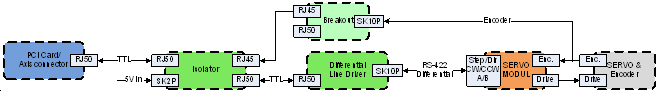

- 10.4.1.2. Incremental digital system with encoder feedback and differential output

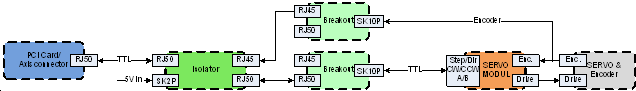

- 10.4.1.3. Incremental digital system with encoder feedback and TTL output

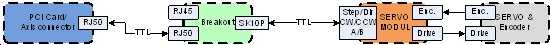

- 10.4.1.4. Incremental digital system with differential output

- 10.4.1.5. Incremental digital system with TTL output

- 10.4.1.6. Absolute digital (CAN based) system

- 10.4.1.7. Absolute digital (CAN based) system with conventional (A/B/I) encoder feedback

- 10.4.2. AXIS – Optical Isolator

- 10.4.3. AXIS – DAC (Digital-to-Analogue Converter)

- 10.4.4. AXIS – Differential breakout

- 10.4.5. AXIS – Breakout

- 12. HAL settings

- 13. RS485 modules

- References

- 1.1. Robot industry market projections [1]

- 1.2. Flexiblity factors

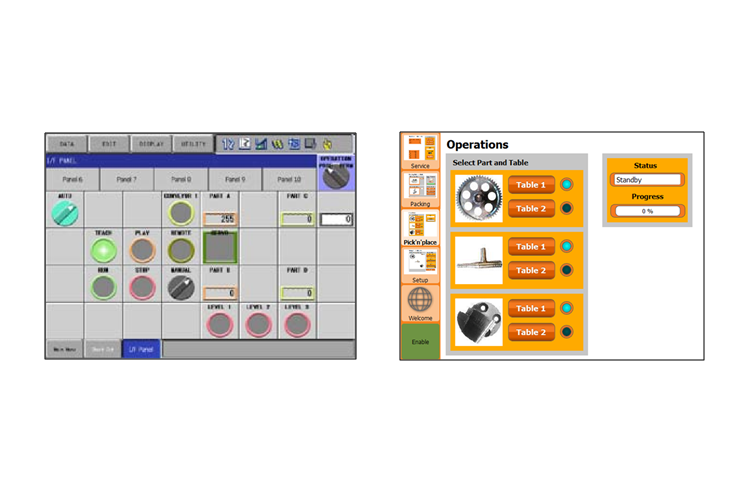

- 1.3. Traditional (upper) and flexible (lower) user interface for industrial robots

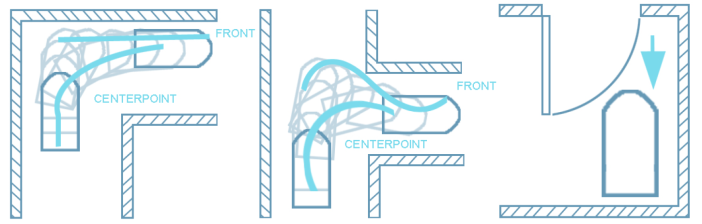

- 1.4. Steered vehicle path planning

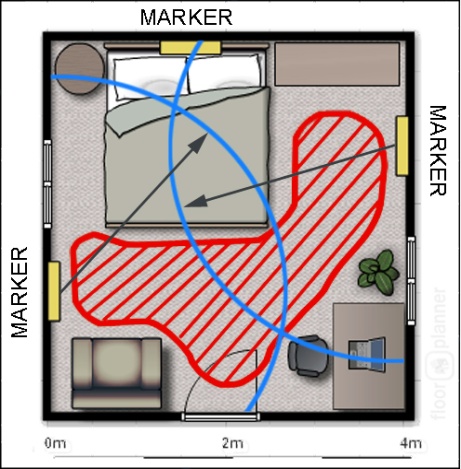

- 1.5. Marker localisation concept

- 1.6. QR code

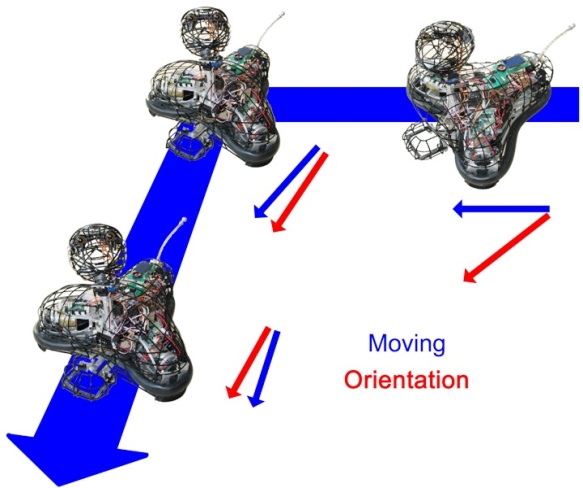

- 1.7. Omnidirectional movement

- 1.8. Aesthetic markers, [10]

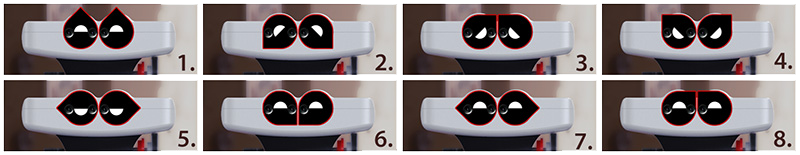

- 1.9. Robot eye concept

- 1.10. Ethon robots and the aesthetic marker concept

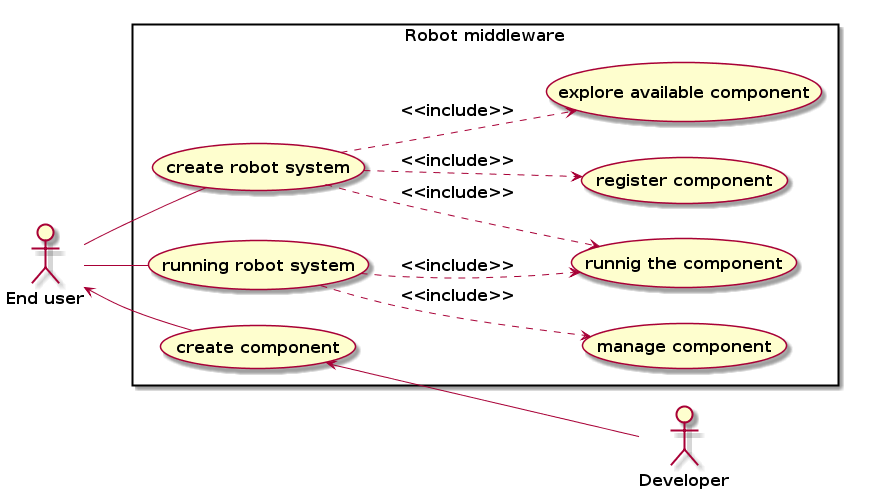

- 2.1. Main use cases of robot middleware

- 2.2. The graphical interface of RTC Builder

- 2.3. The architecture of RT component

- 2.4. The graphical interface of the System Editor of the OpenRTM-aist

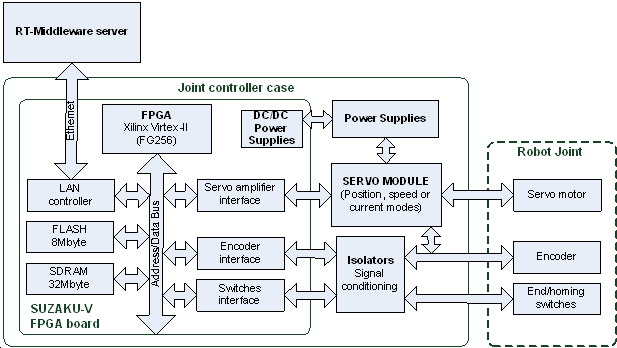

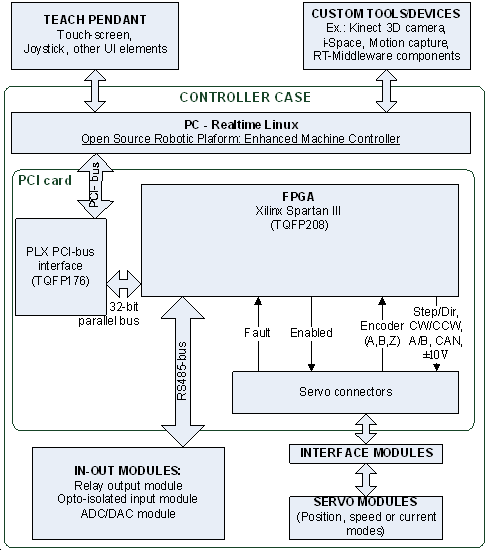

- 3.1. The block diagram of the joint controller RTC

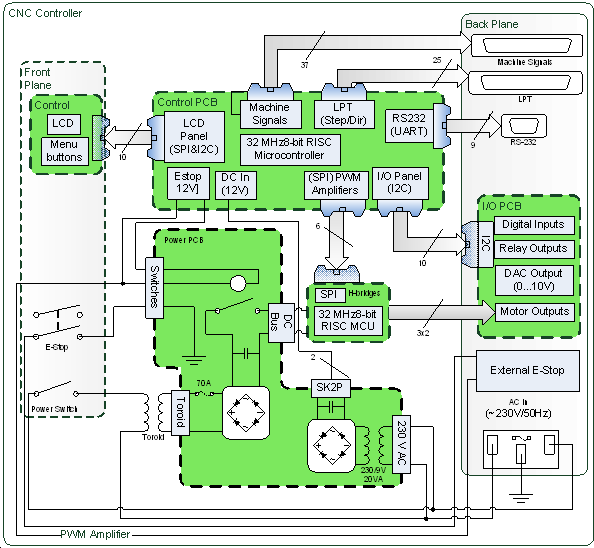

- 3.2. Block diagram of the 3 axis CNC controller

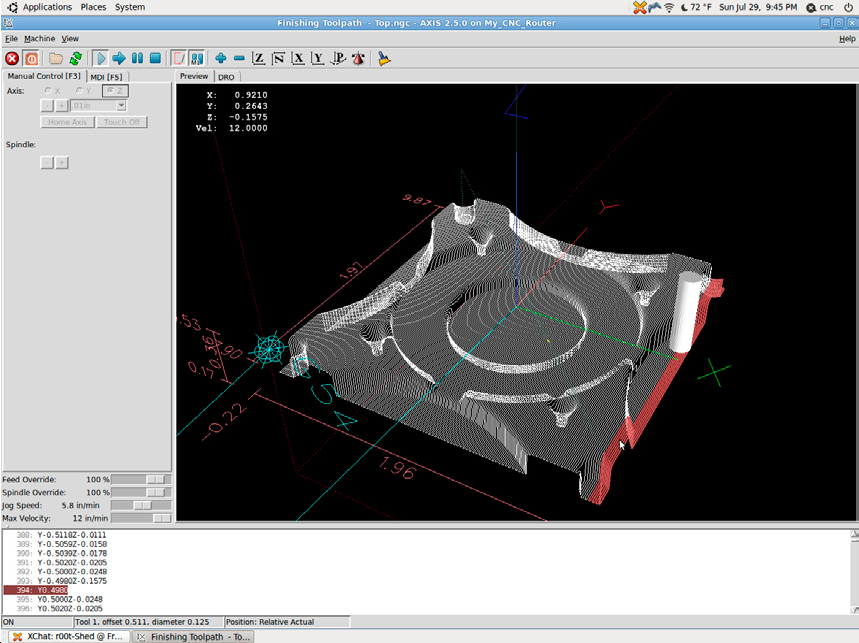

- 3.3. Default (a) graphical user interface of LinuxCNC software system

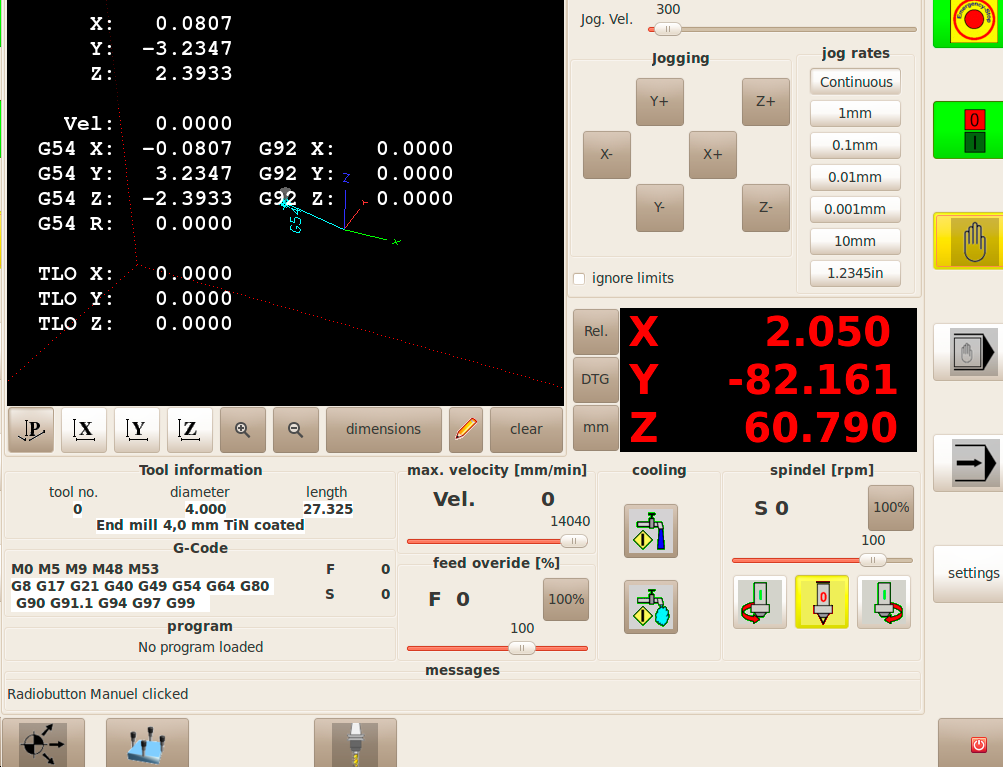

- 3.4. Customized (b) graphical user interface of LinuxCNC software system

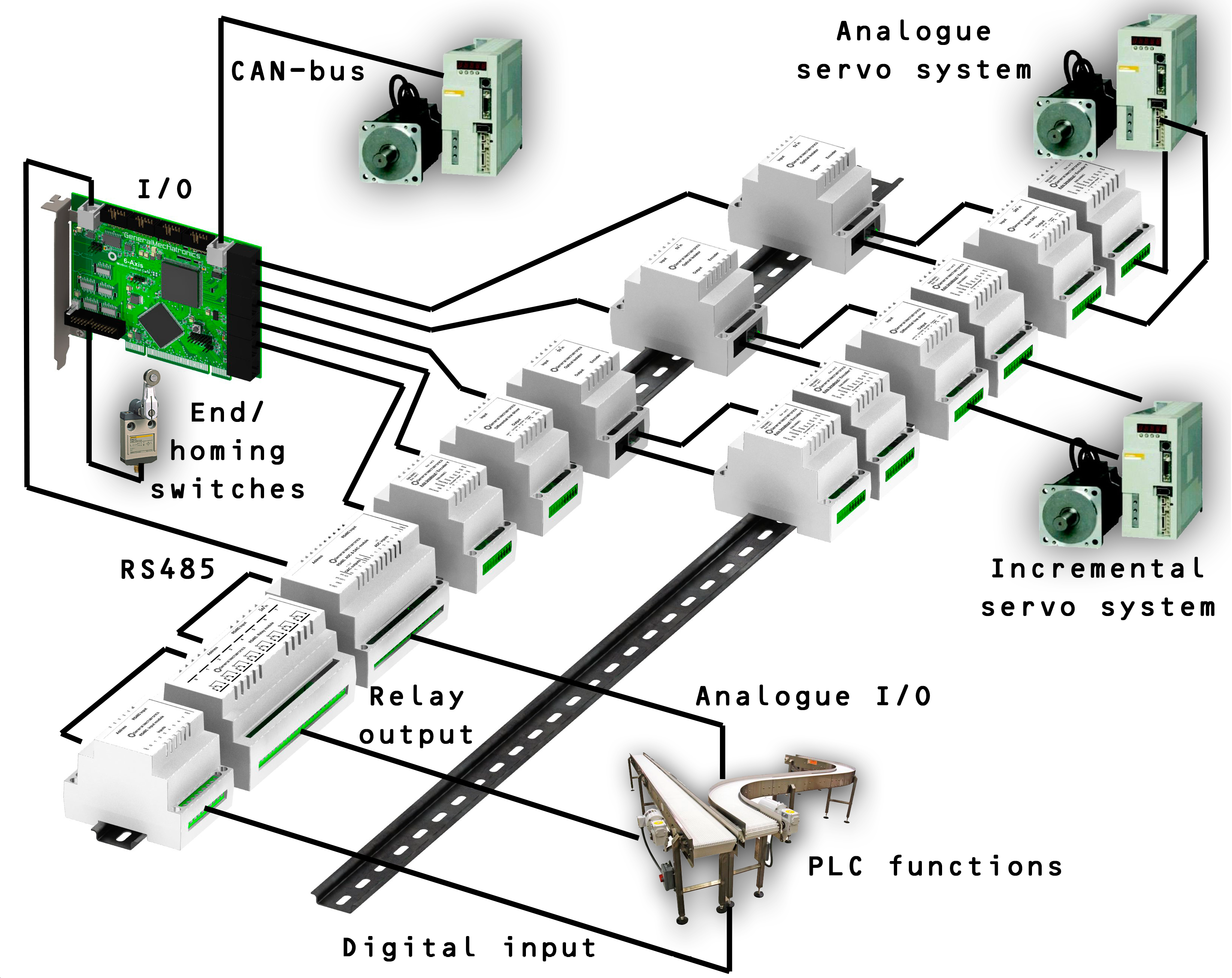

- 3.5. (a) Typical system layout of the LinuxCNC based motion controller

- 3.6. (b) Typical system layout of the LinuxCNC based motion controller

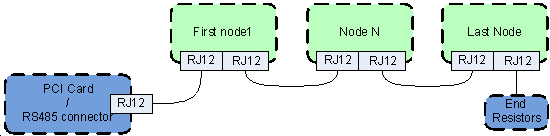

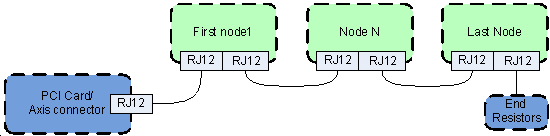

- 3.7. RS485 expansion (a) bus

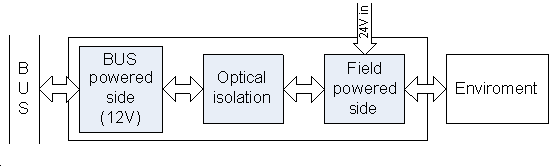

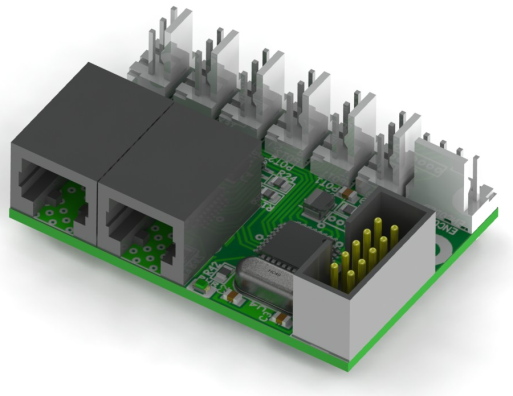

- 3.8. RS485 expansion (b) module concepts

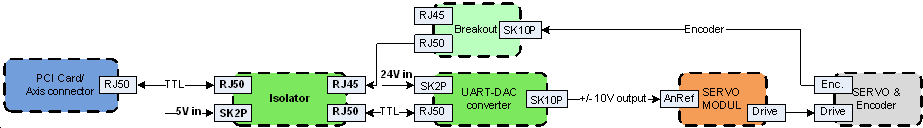

- 3.9. Examples of (a) analogue incremental servo interface

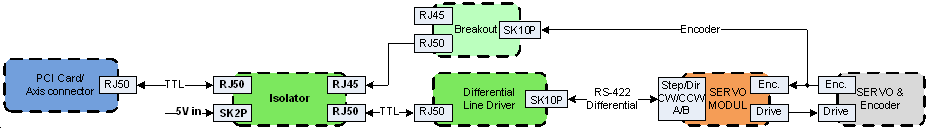

- 3.10. Examples of (b) differential incremental servo interface

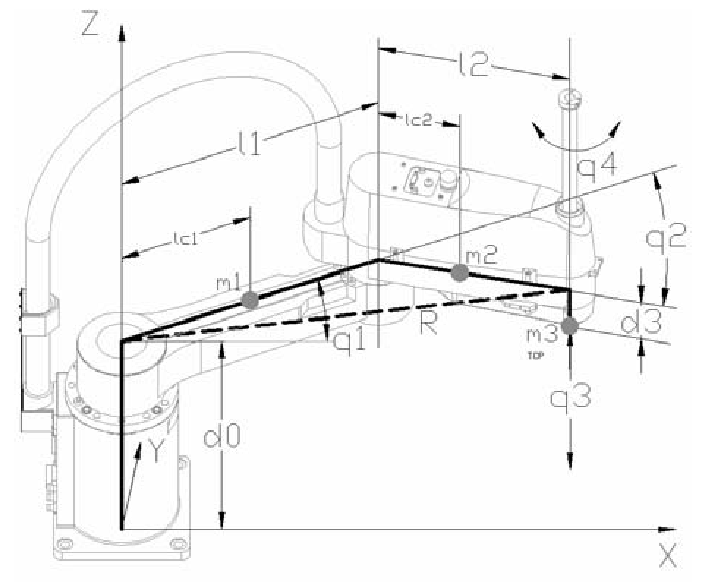

- 3.11. Mechanical drawing of the Adept 604-S SCARA robot. m1, m2, m3 are the masses, l1,l2,d0,d3 are the length, q1,q2,q3,q4 are the angles of the corresponding joints. (These data are necessary only for the calculations of robot dynamics: lc1 and lc2 are the masses position on joint 1 and joint 2, respectively.)

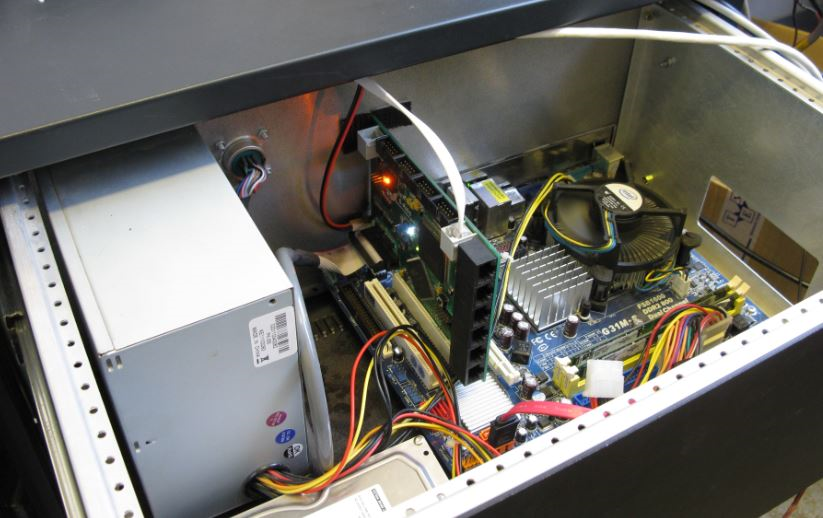

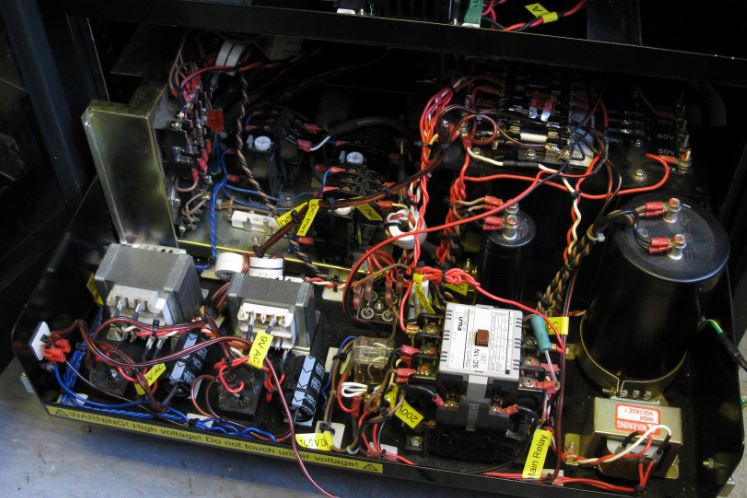

- 3.12. Shows (a) control of the modular controller

- 3.13. Shows (b) power amplifier of the modular controller

- 3.14. Shows (c) power electronics shelves of the modular controller

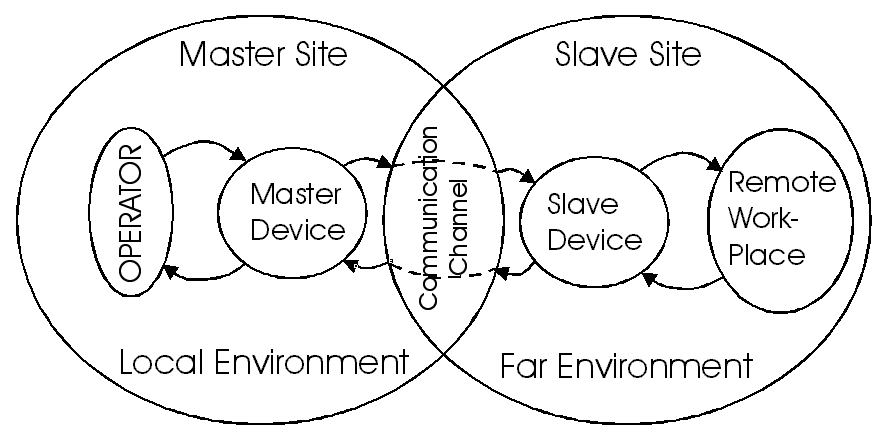

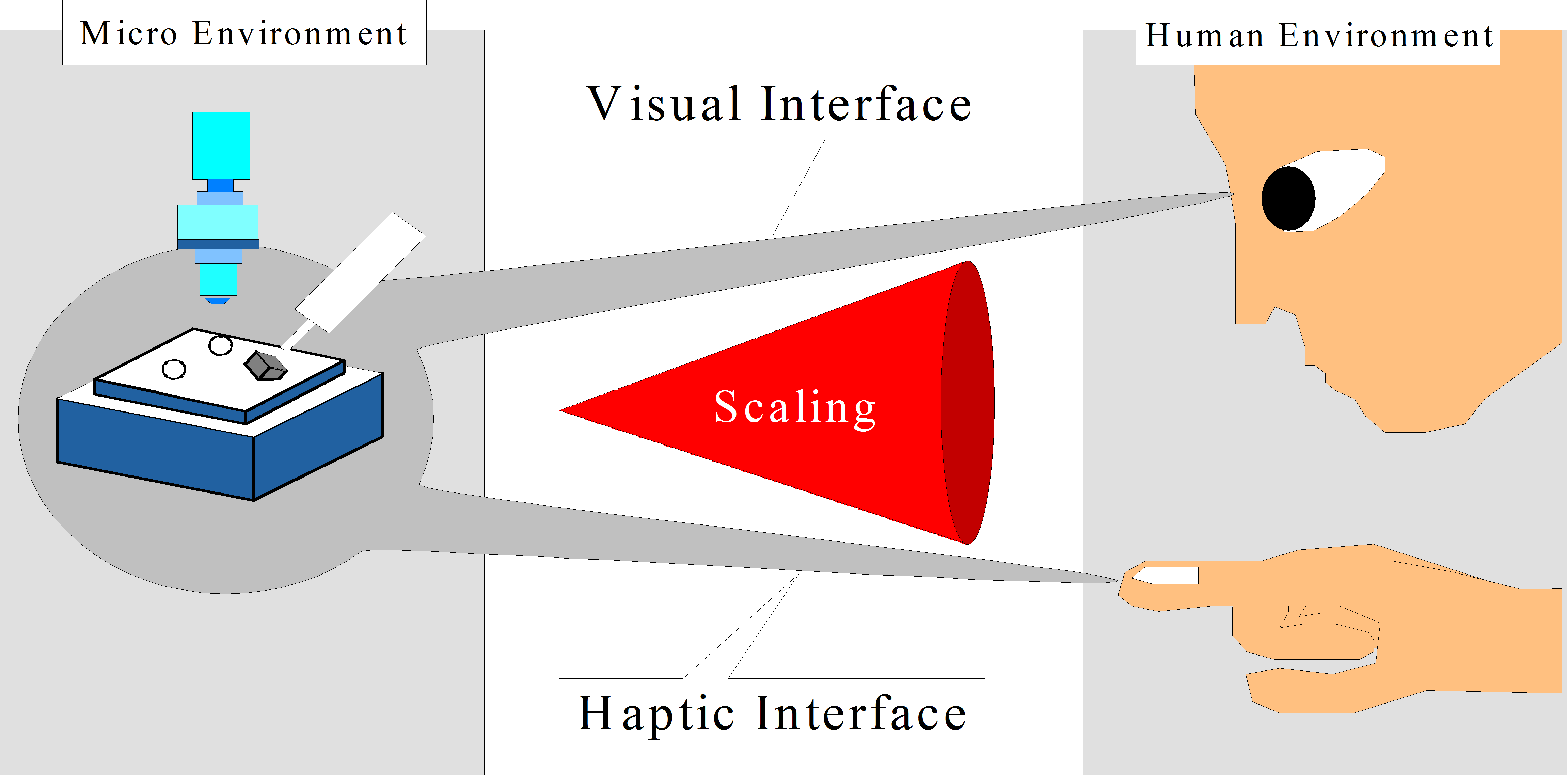

- 4.1. Information streams of the Telemanipulation (adapted from [3])

- 4.2. General concept of the telemanipulation

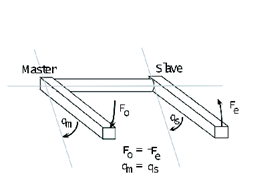

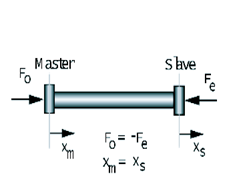

- 4.3. Ideal Telepresence systems: (a) Revolute motion manipulation, (b) Linear motion manipulation

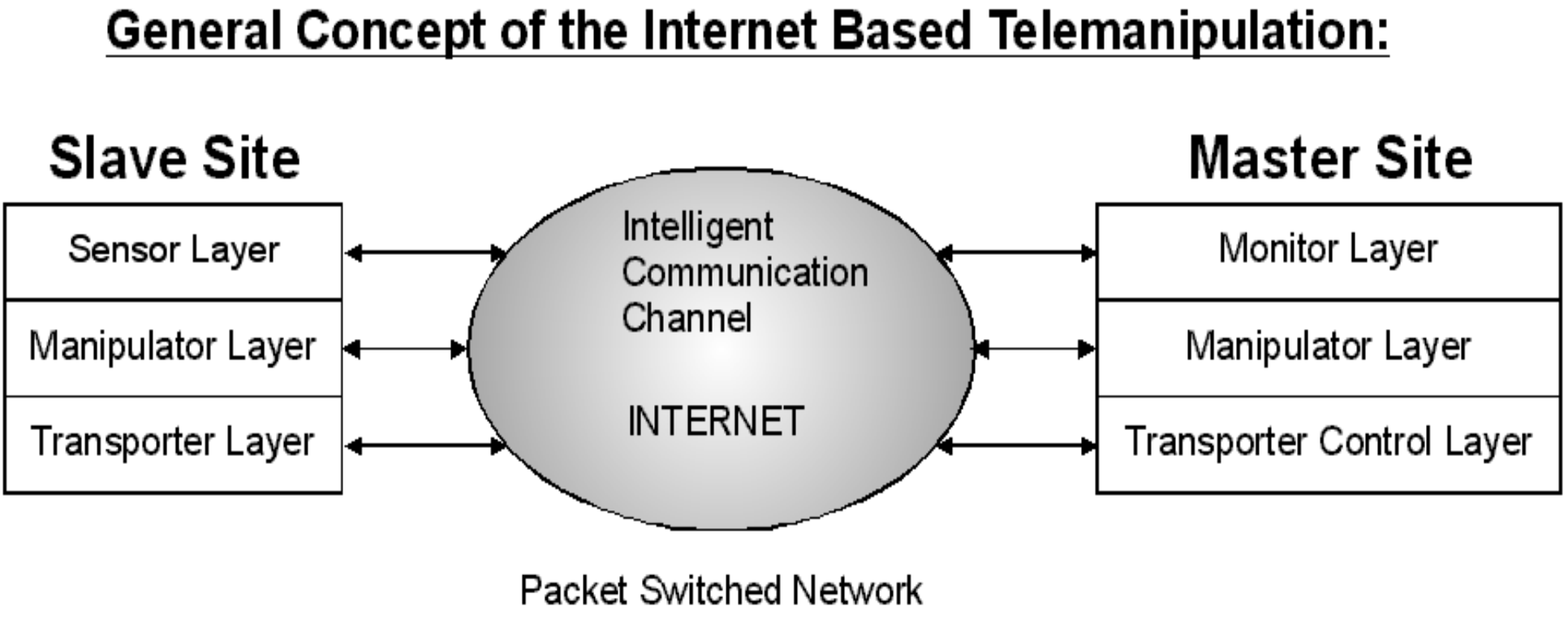

- 4.4. Layer definition for the general concept of the Internet-based Telemanipulation.

- 4.5. Sensor Layer definition for the general concept of the Internet-based Telemanipulation.

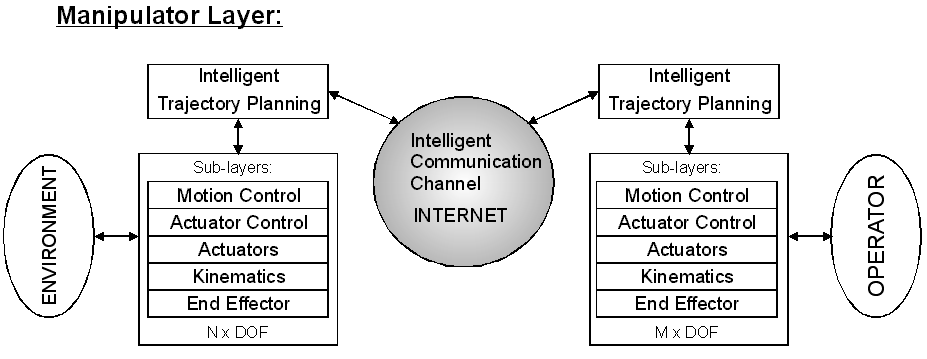

- 4.6. Manipulator Layer definition for the general concept of the Internet-based Telemanipulation.

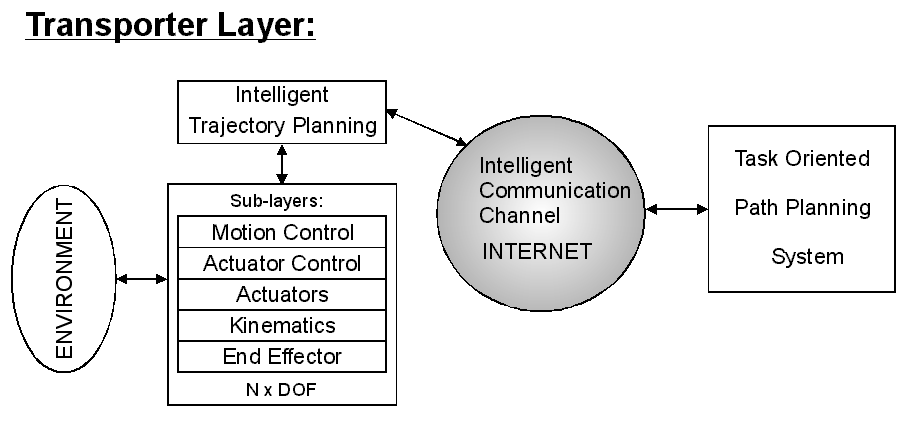

- 4.7. Layer definition for the general concept of the Internet-based Telemanipulation

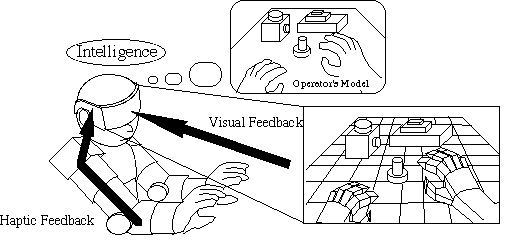

- 4.8. Telemanipulation in the virtual reality

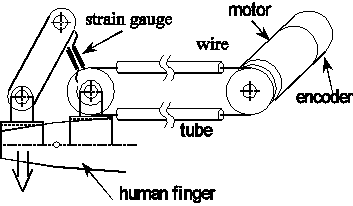

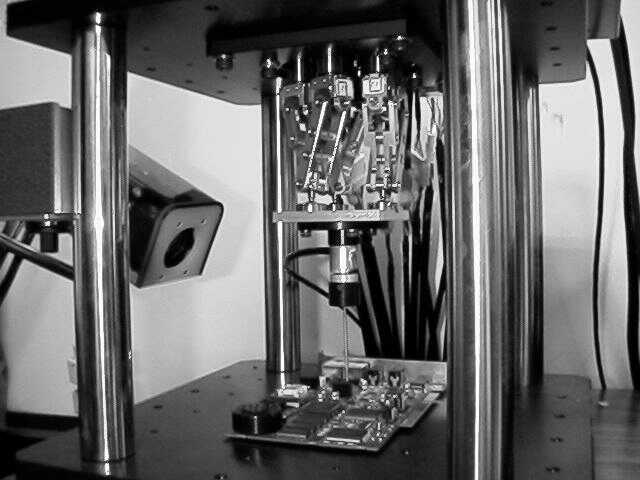

- 4.9. Micro/nano teleoperation system

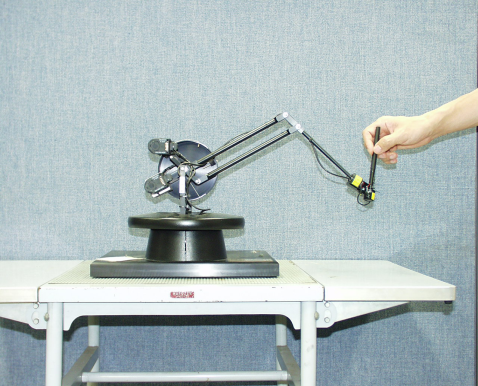

- 4.10. A point type master device

- 4.11. An arm type master device

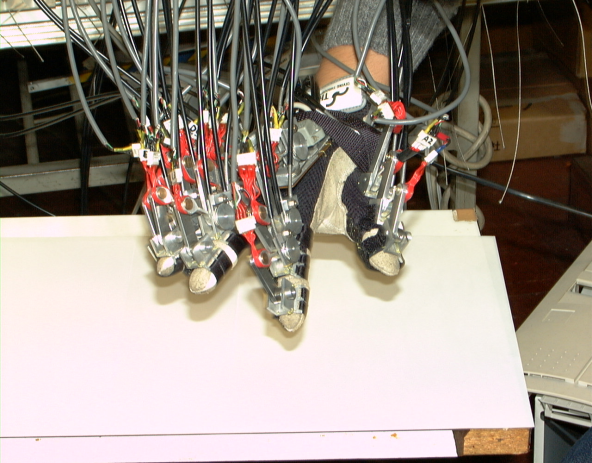

- 4.12. A glove type master device

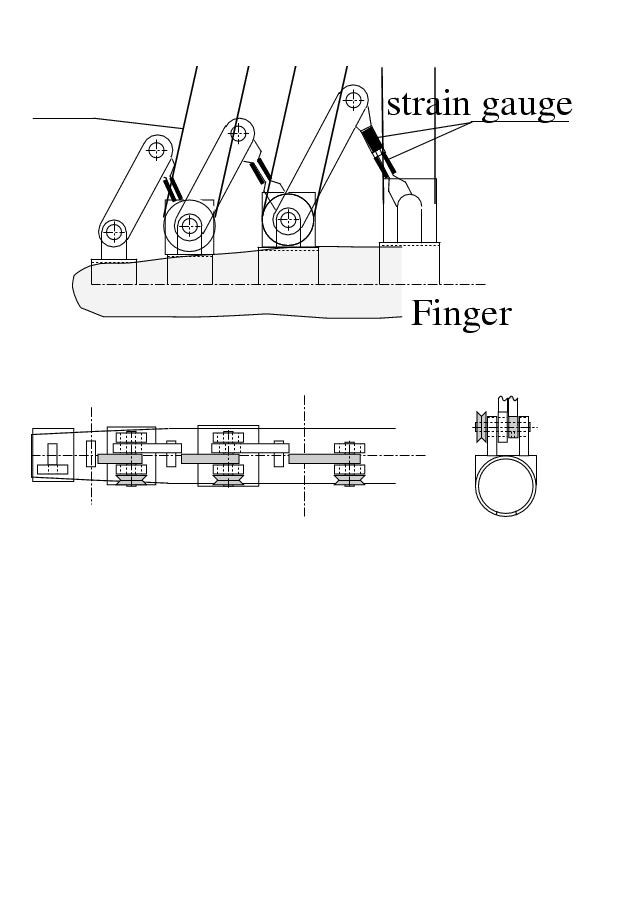

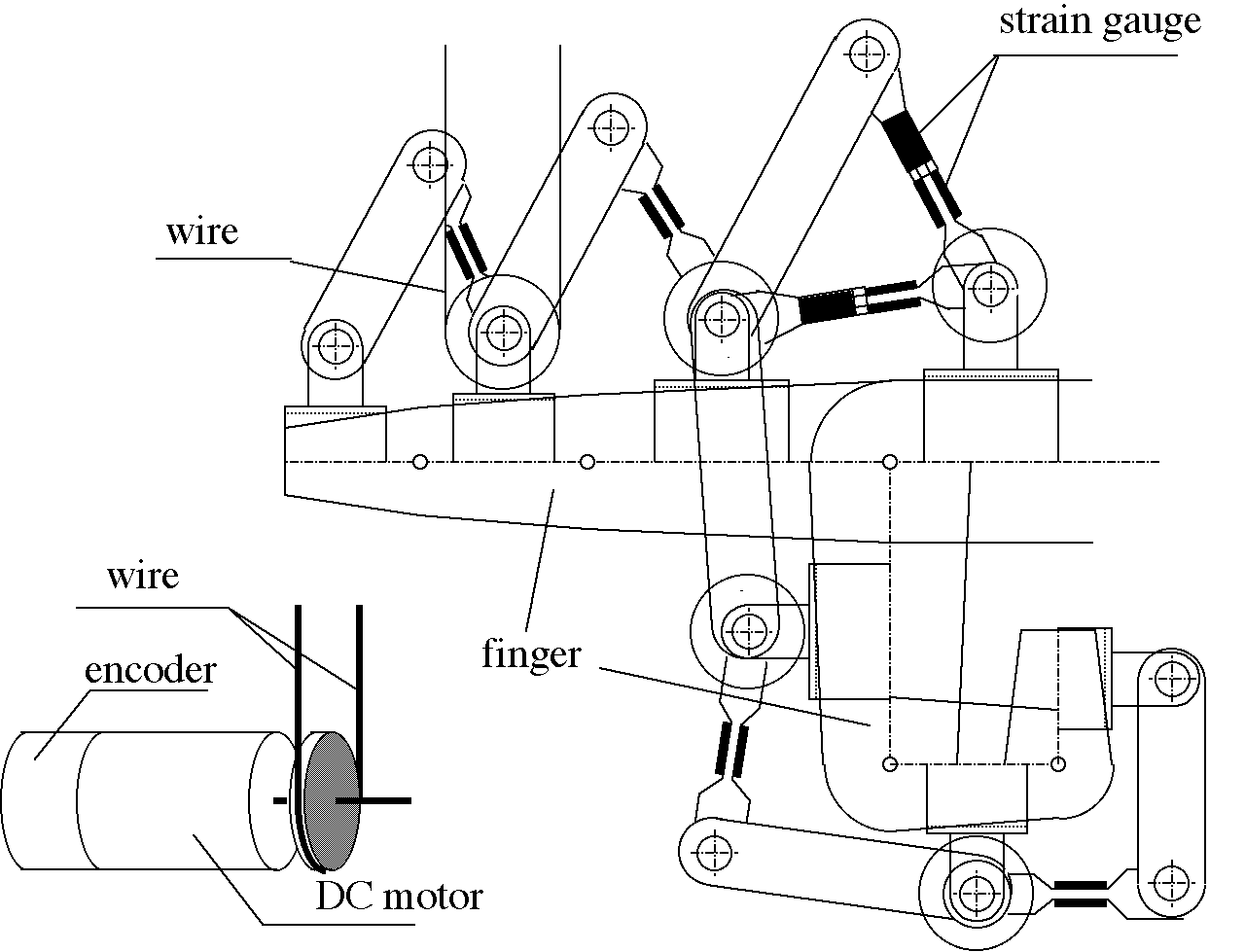

- 4.13. Mechanical structure of the sensor glove

- 4.14. Finger movement in the glove

- 4.15. Structure of one D.O.F. of the Sensor Glove

- 4.16. Concept of the Micro Telemanipulation

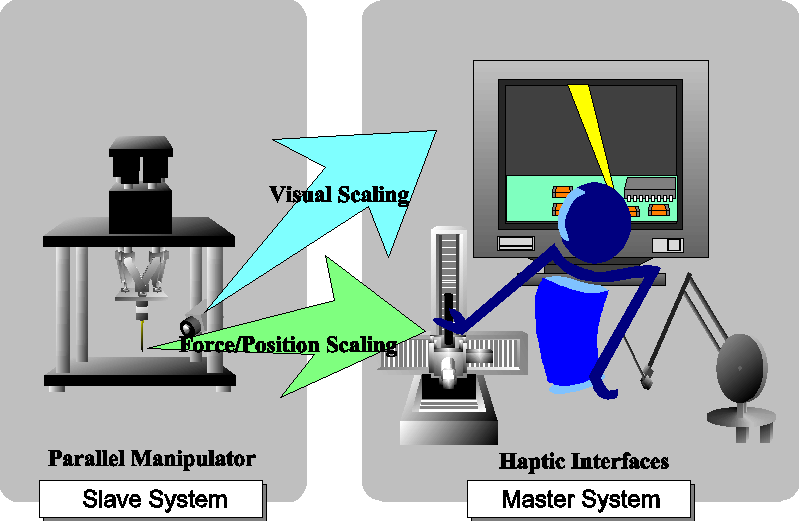

- 4.17. The photo of the Master Device

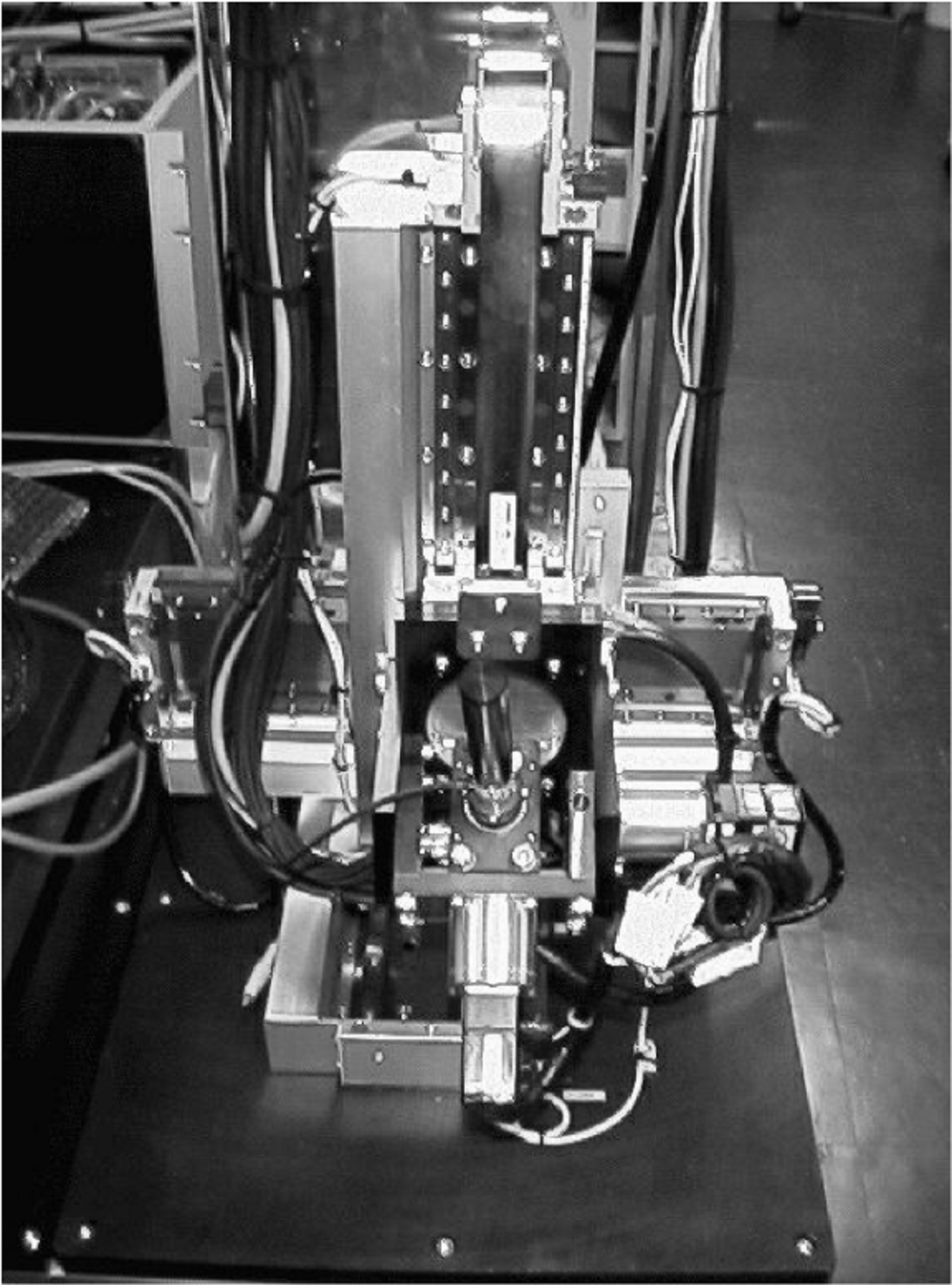

- 4.18. The photo of the Slave Device

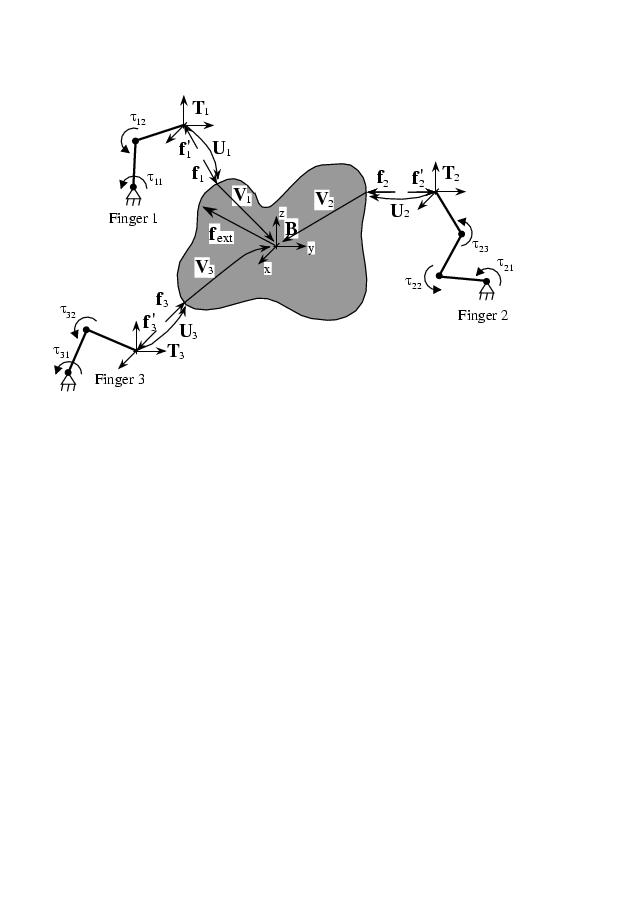

- 4.19. Object Grasped by 3 Fingers

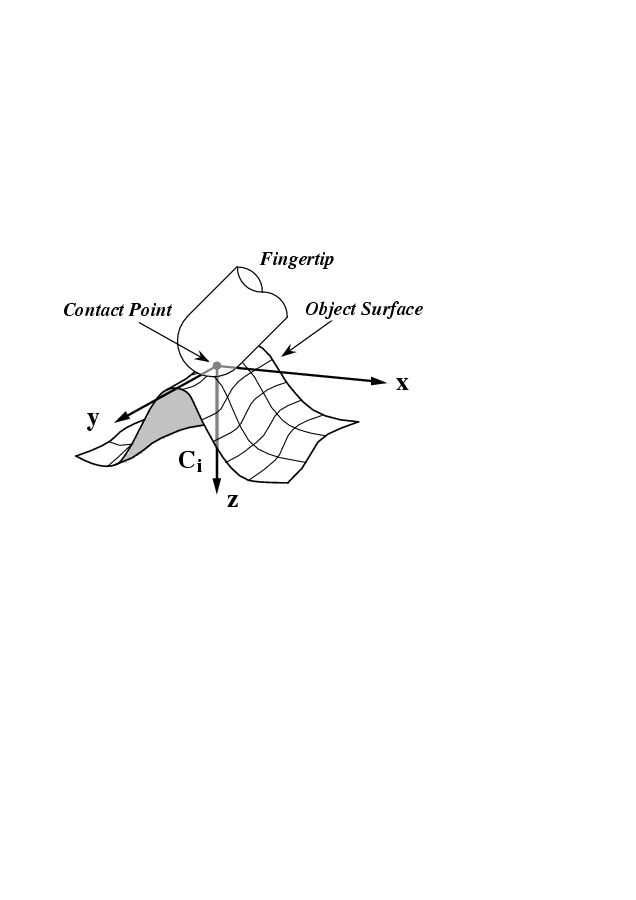

- 4.20. Contact Point and Contact Frame

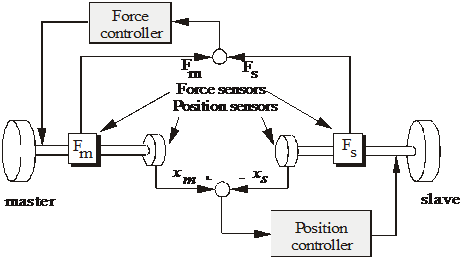

- 4.21. Conventional bilateral control schema with force and position feedback

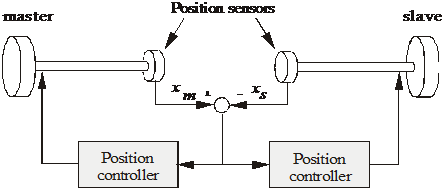

- 4.22. Conventional bilateral control schema with two position control loops

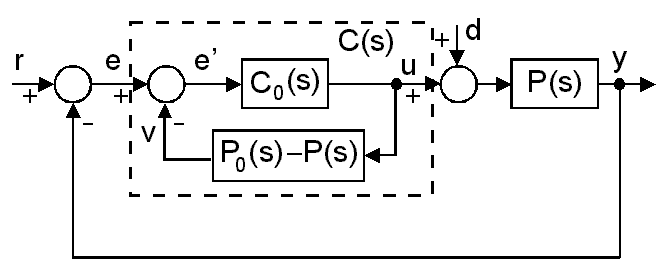

- 4.23. The Configuration of the Smith Predictor

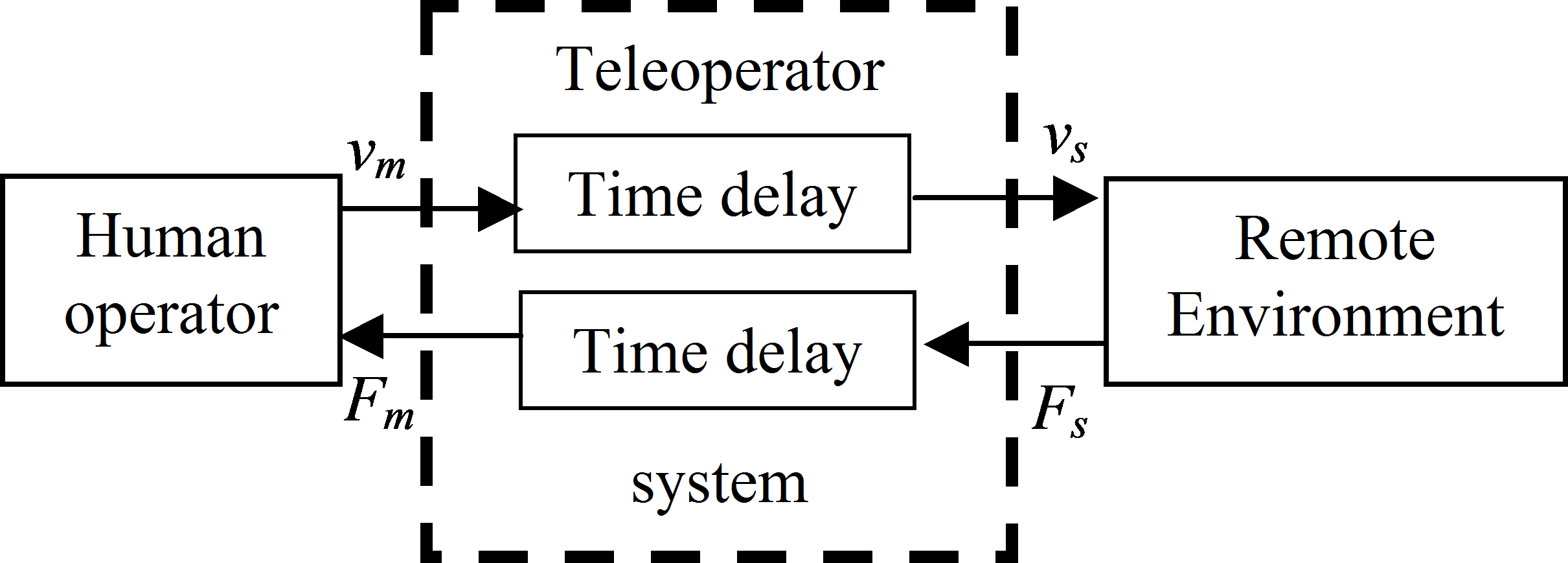

- 4.24. A simple teleoperator with time delay Td.

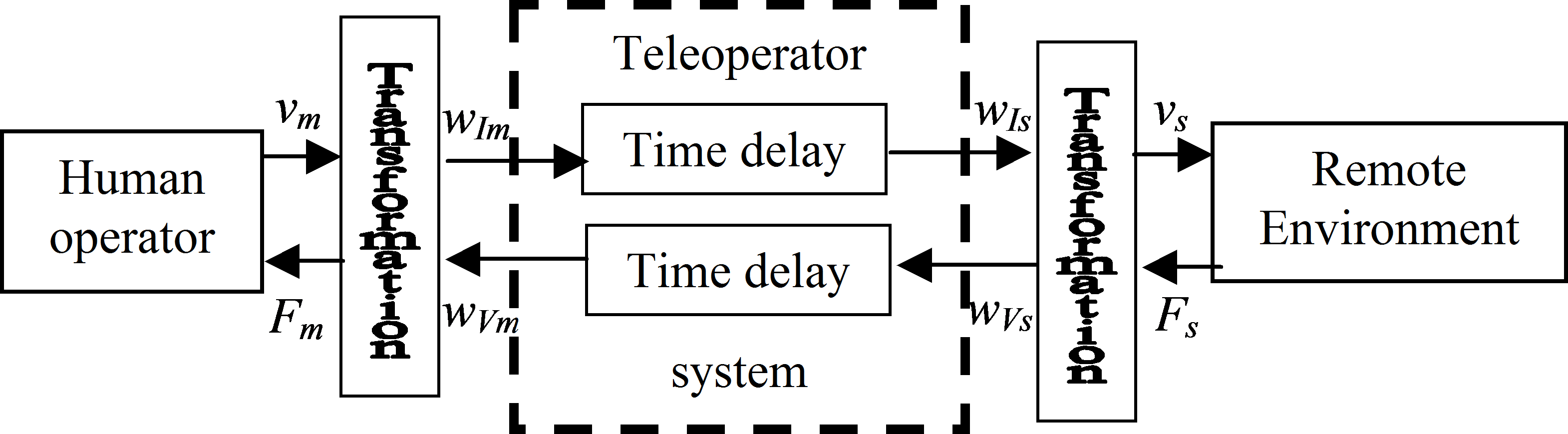

- 4.25. Telemanipulation with wave variables

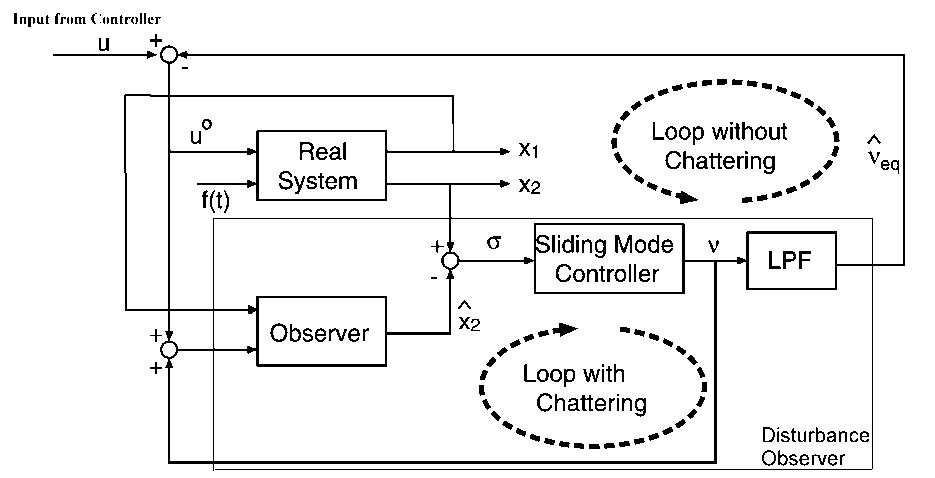

- 4.26. Sliding mode based feedback compensation

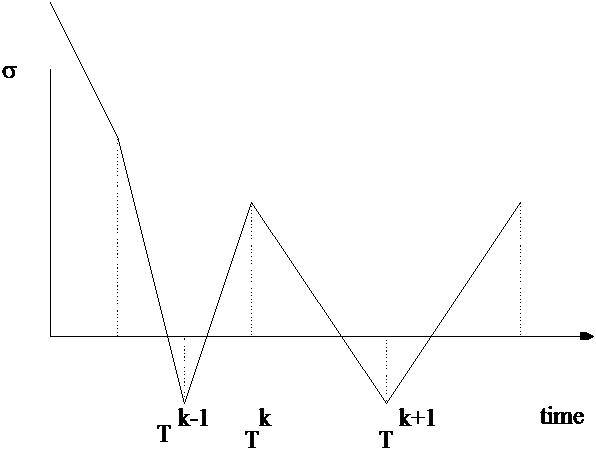

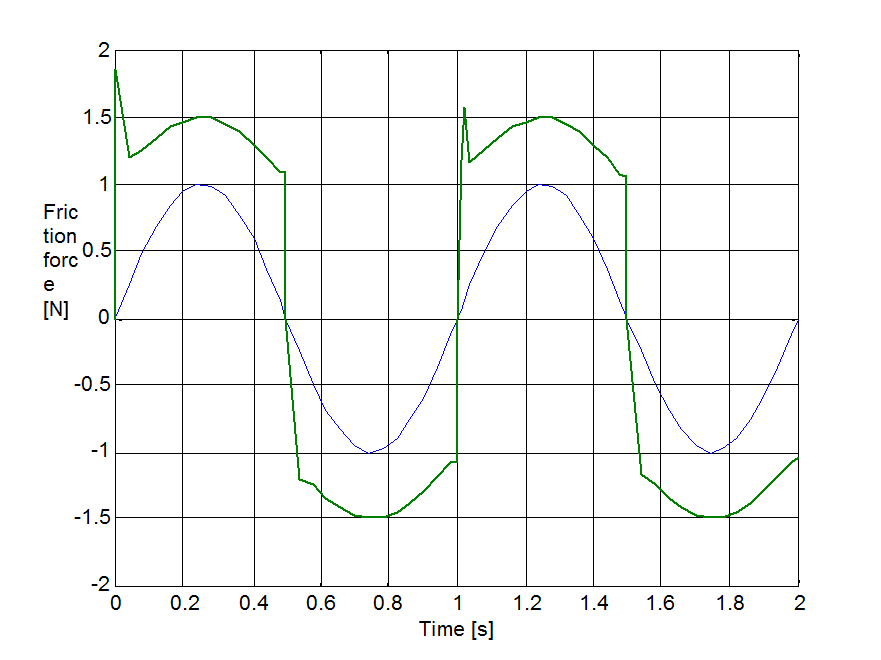

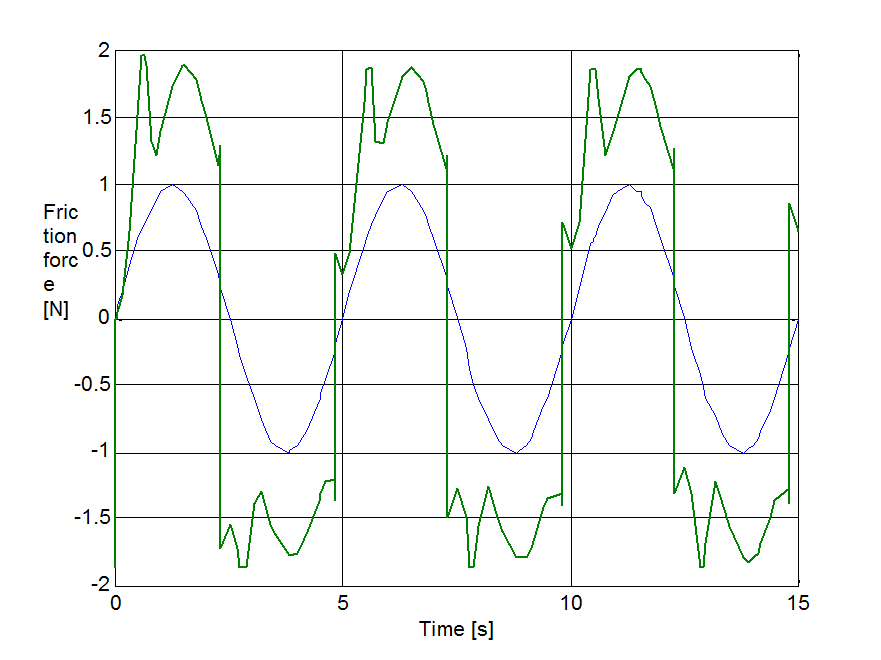

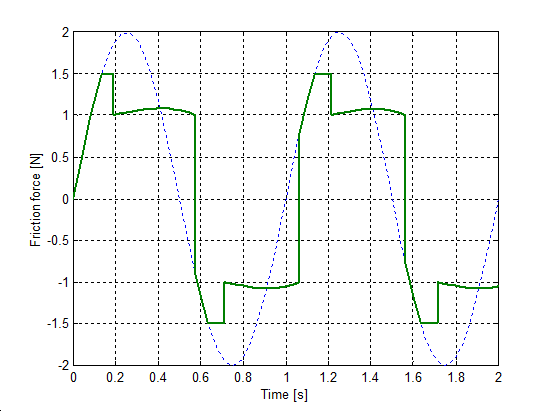

- 4.27. Discrete-time chattering phenomenon

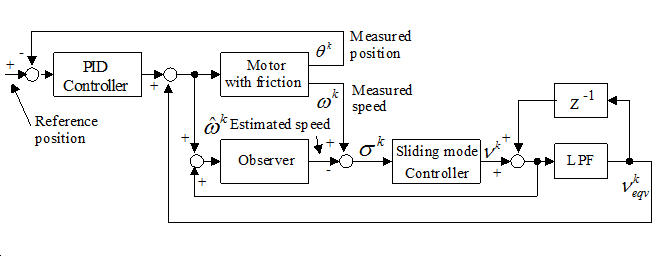

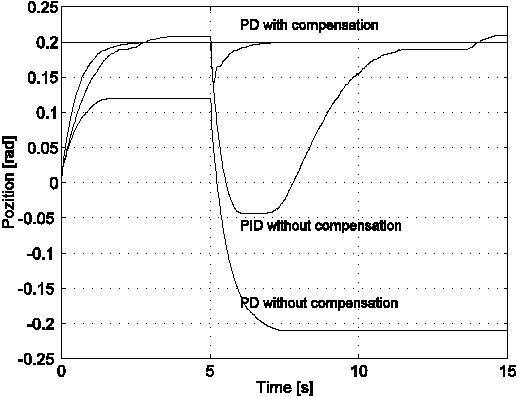

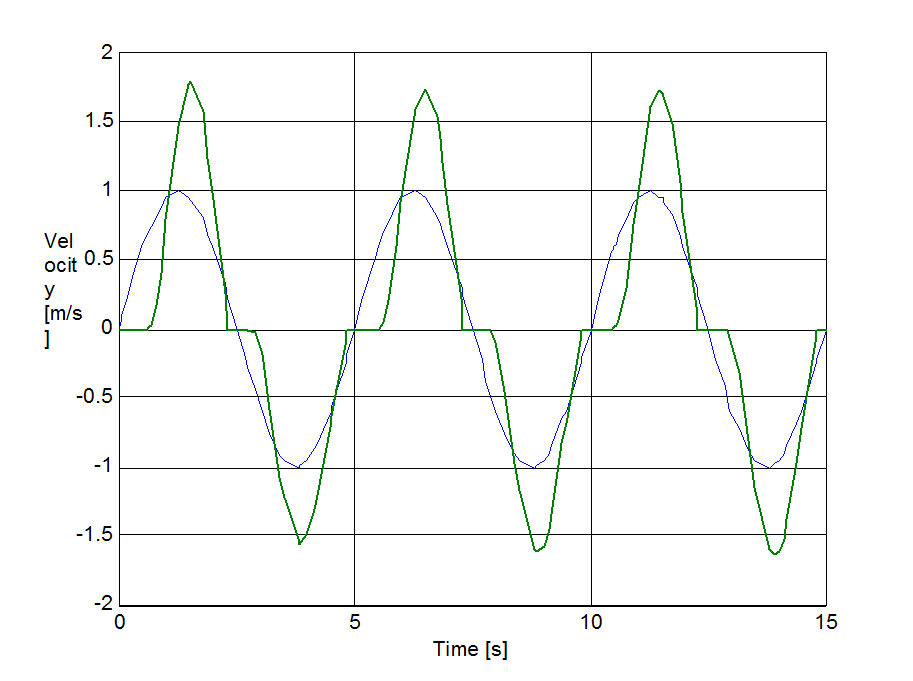

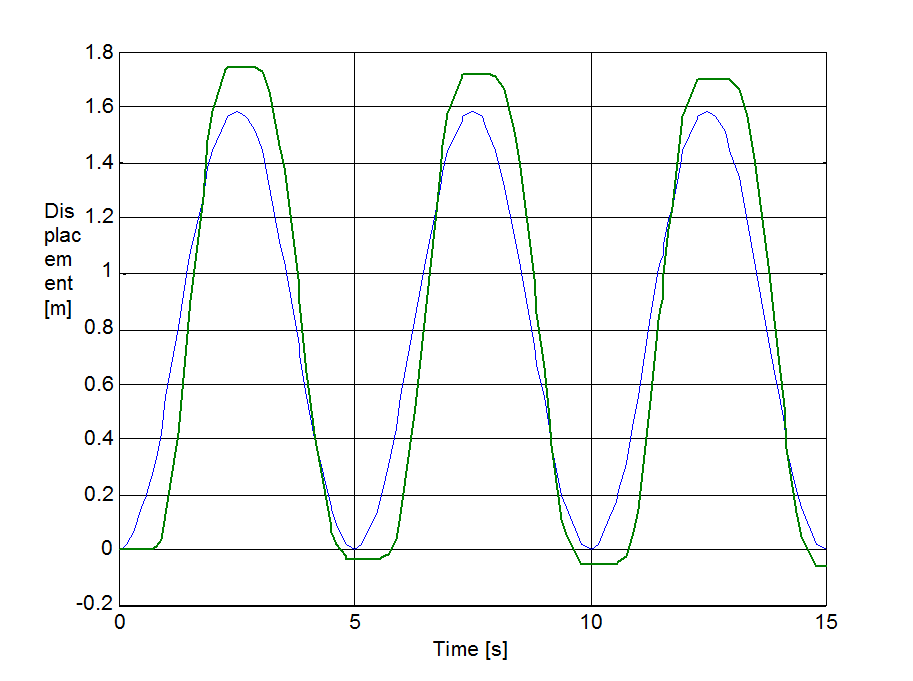

- 4.28. Controller scheme for position control

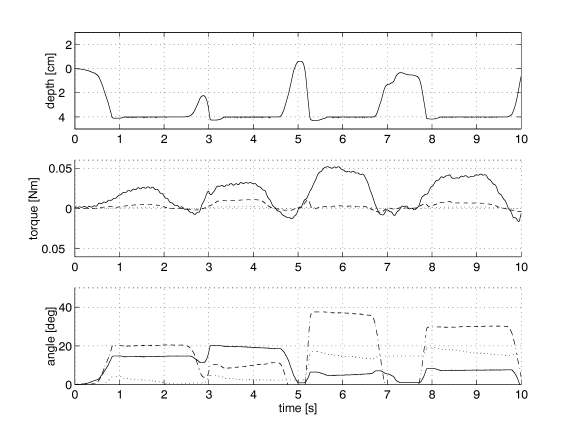

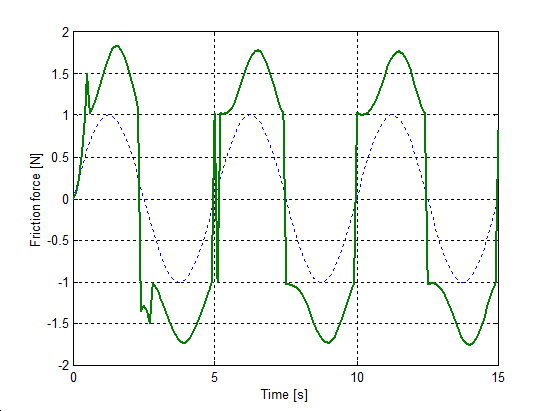

- 4.29. Experimental results: Position control tests

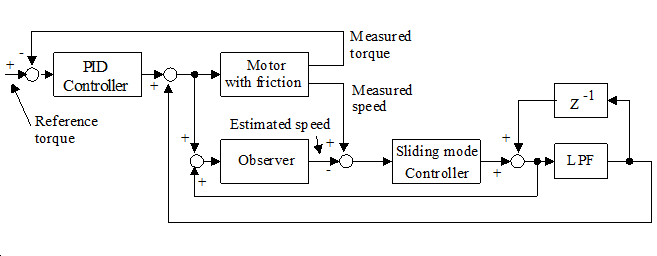

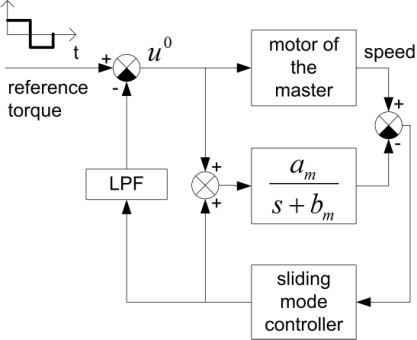

- 4.30. Overall control scheme for force control

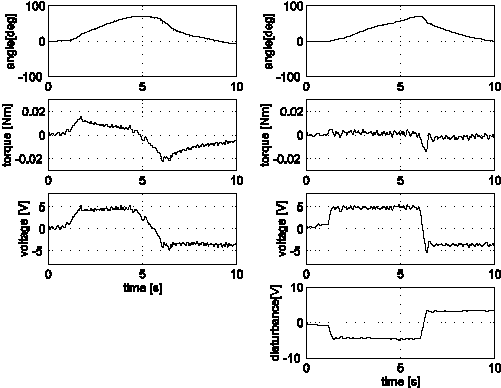

- 4.31. Experimental results of one joint of glove type device; (left) PID, (right) PID with disturbance observer, angel of motor, torque of human joint, output voltage and estimated disturbance

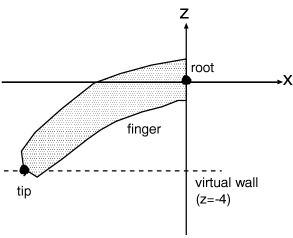

- 4.32. The geometry setup of wirtual wall touching experiment

- 4.33. Meassurement results of virtual wall touching, depth from the operator’s palm (upper) and the torque (middle)

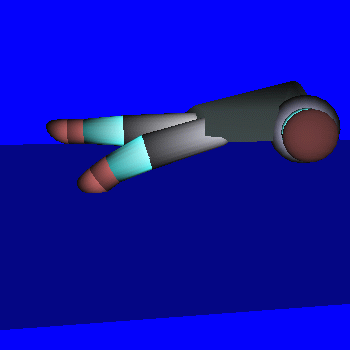

- 4.34. Visual feedback for the operator

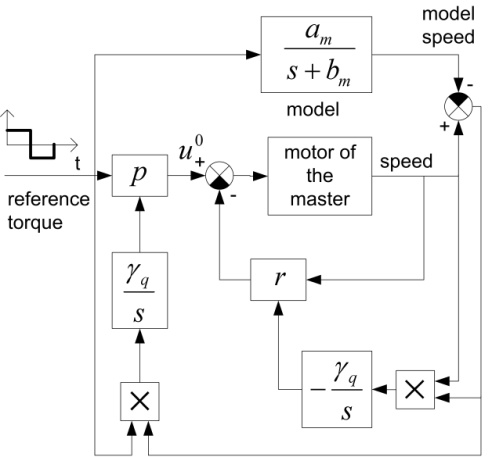

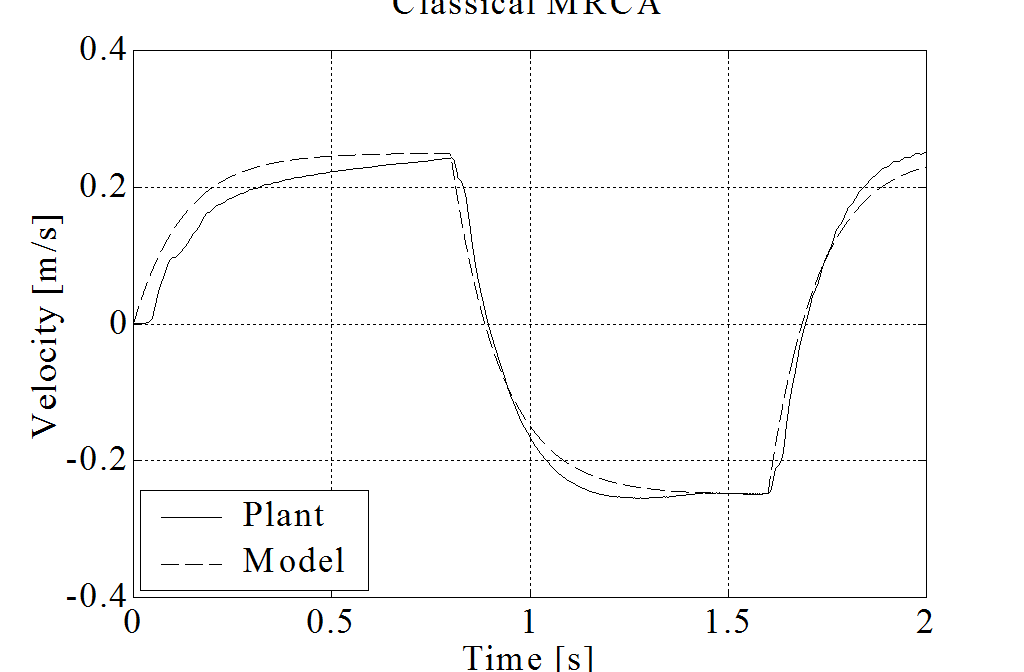

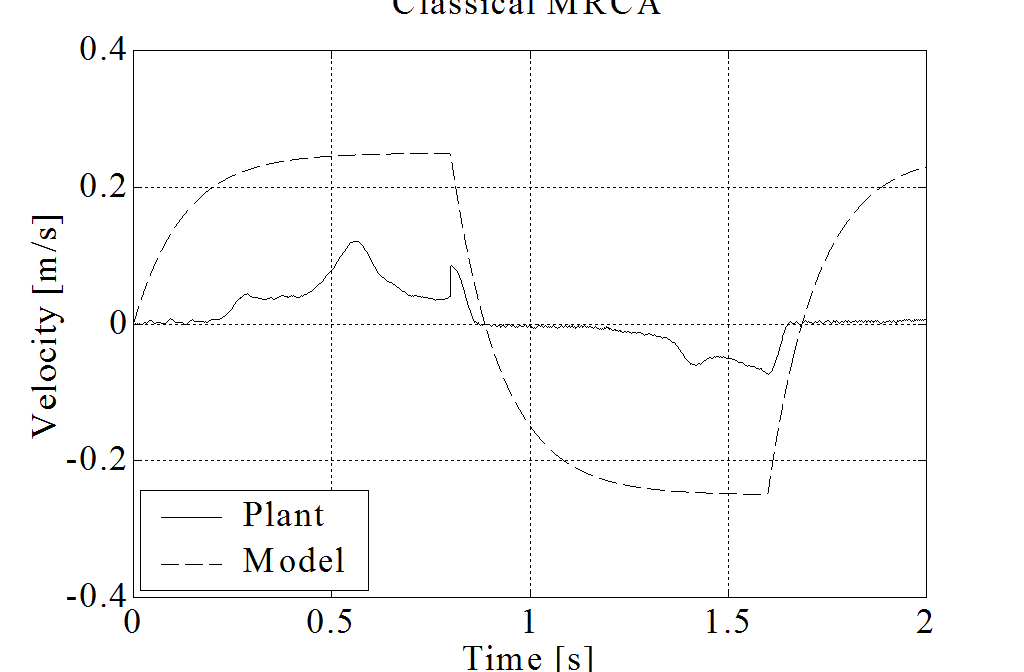

- 4.35. Classical MRAC scheme

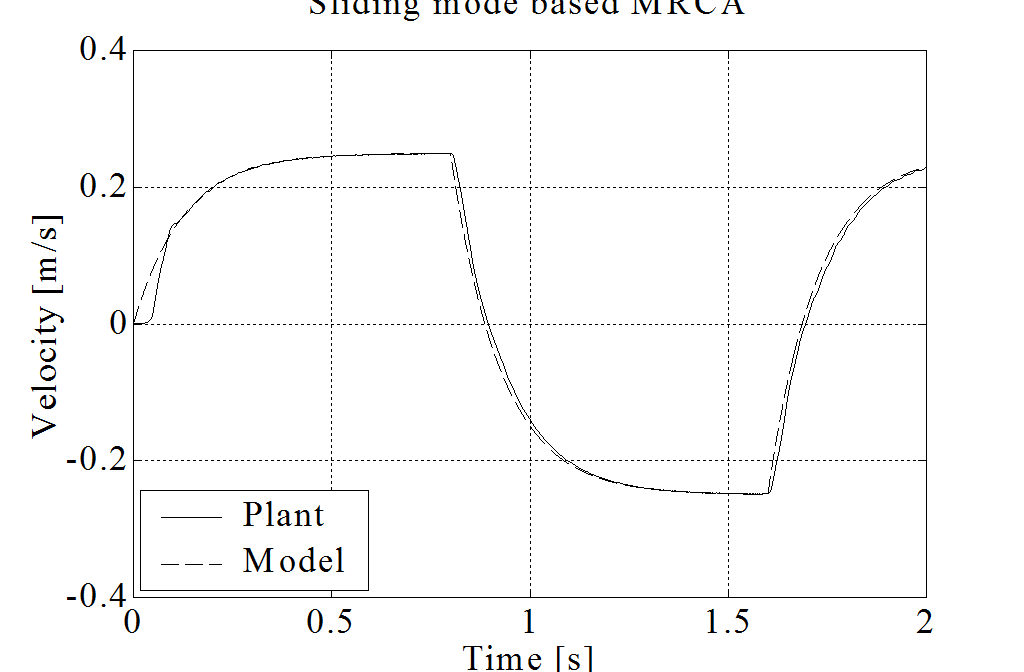

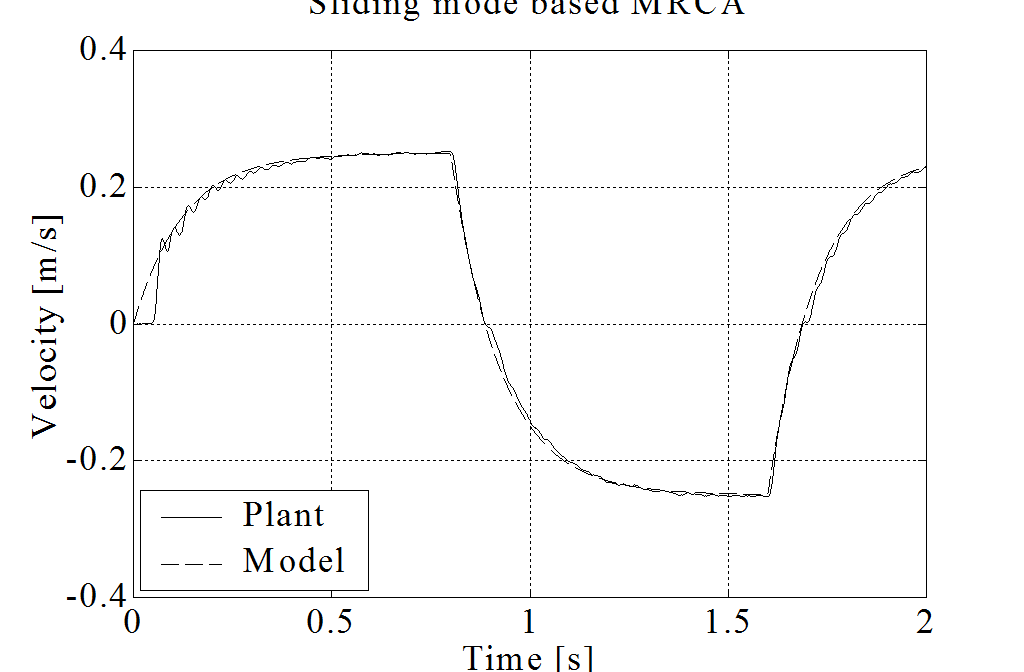

- 4.36. Sliding mode based MRAC scheme

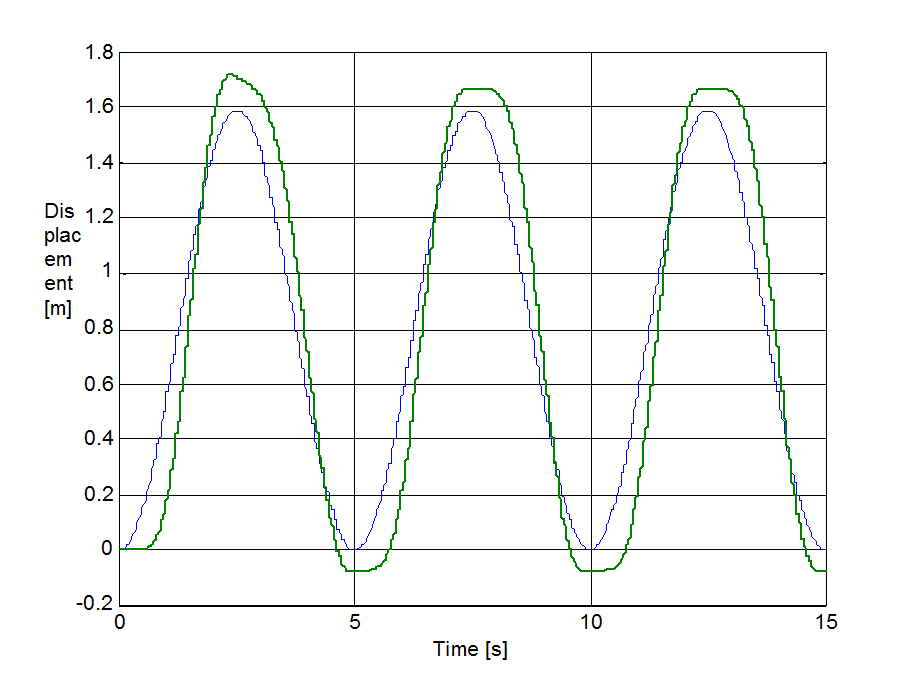

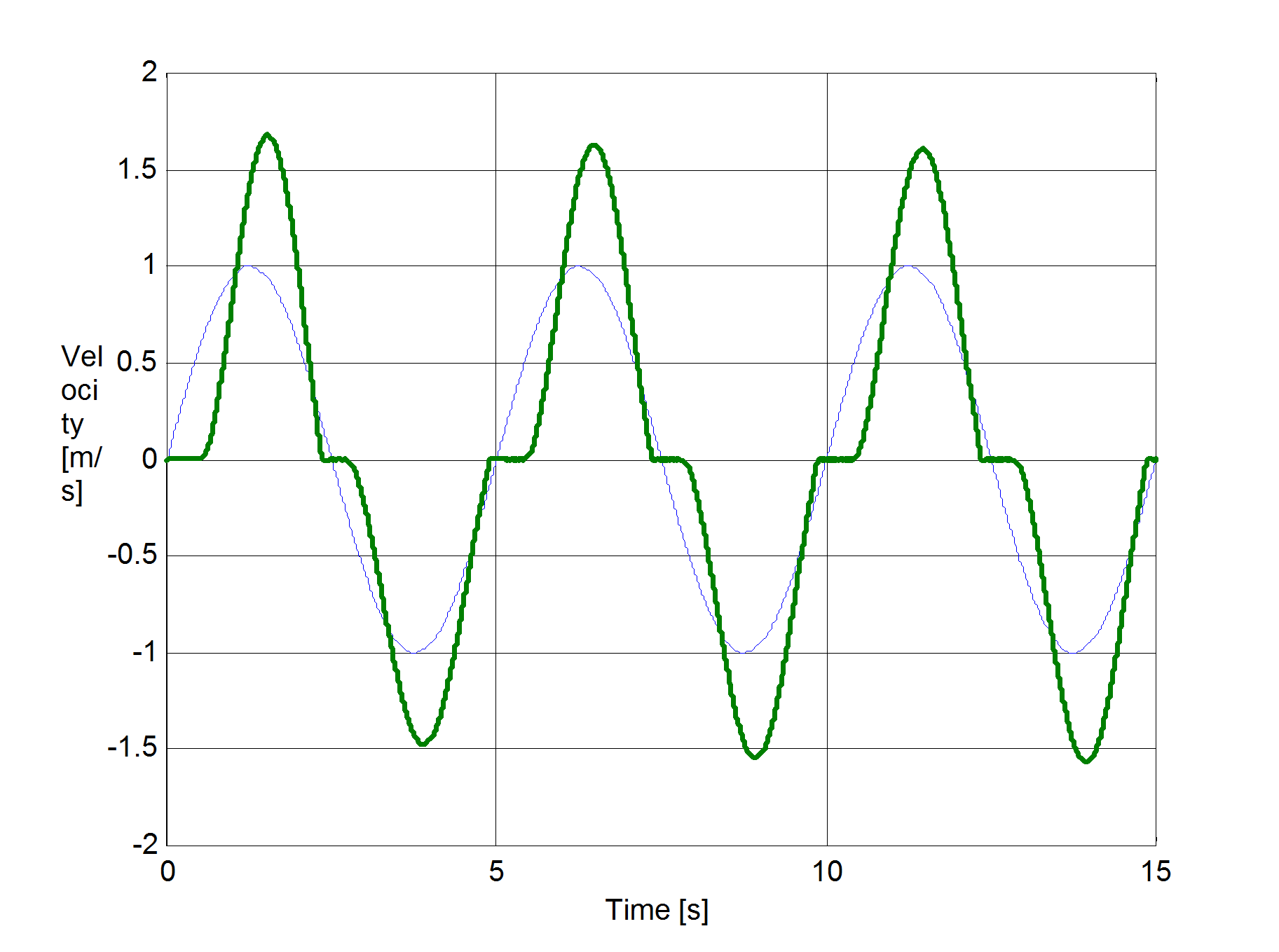

- 4.37. Axis X: Comparison of the response of the reference model and the real plant

- 4.38. Axis Y: Comparison of the response of the reference model and the real plant

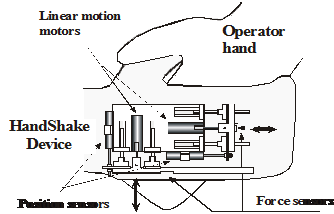

- 4.39. Tele Handshaking Device: (a) Photo (b) Structure

- 4.40. One DOF linear motion manipulator with virtual impedance

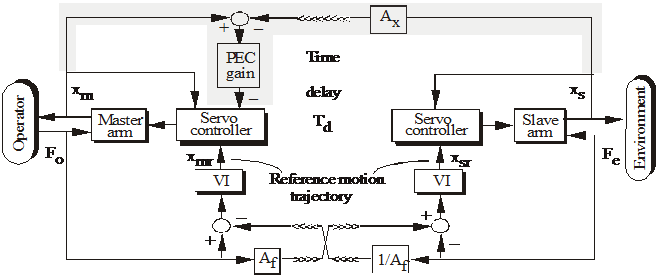

- 4.41. Virtual Impedance with Position Error Correction for a teleoperator system with time delay

- 4.42. Control diagram the Handshaking device

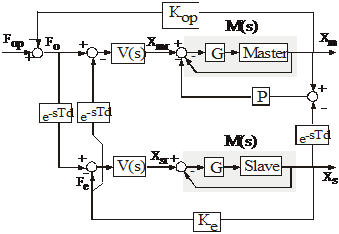

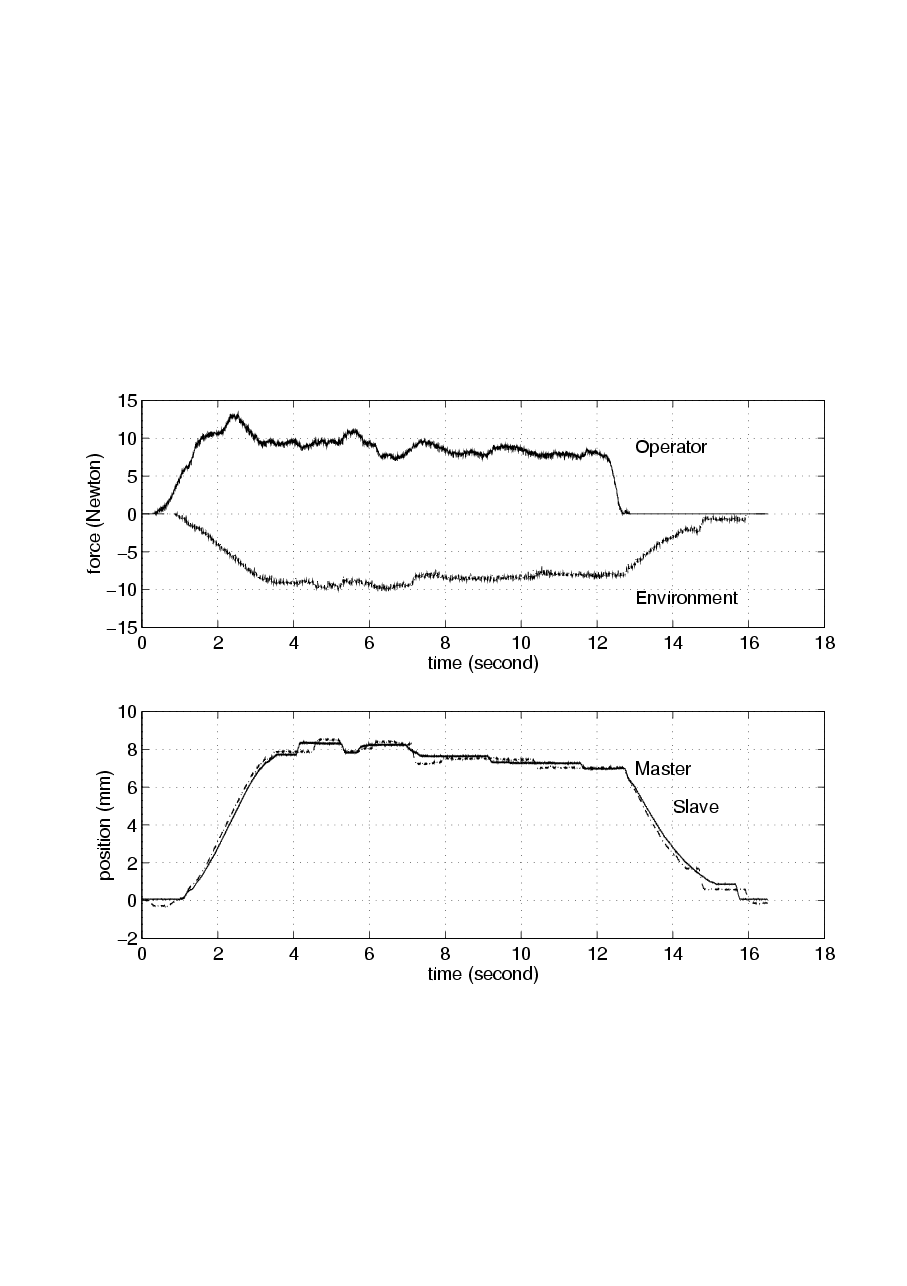

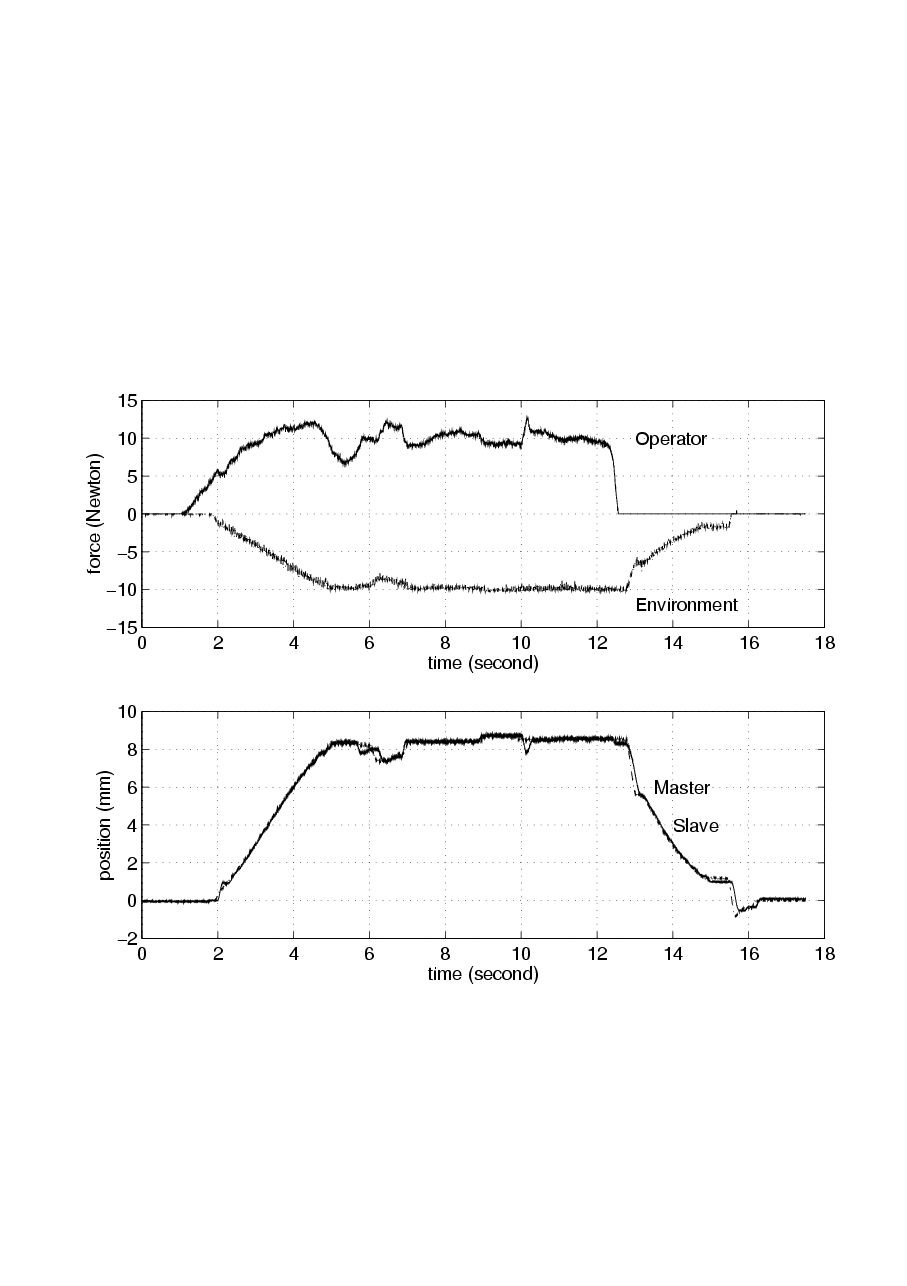

- 4.43. Experimental results of tele handshaking device without time delay (a) Results with VI and without PEC

- 4.44. Experimental results of tele handshaking device without time delay (b) Results with VIPEC

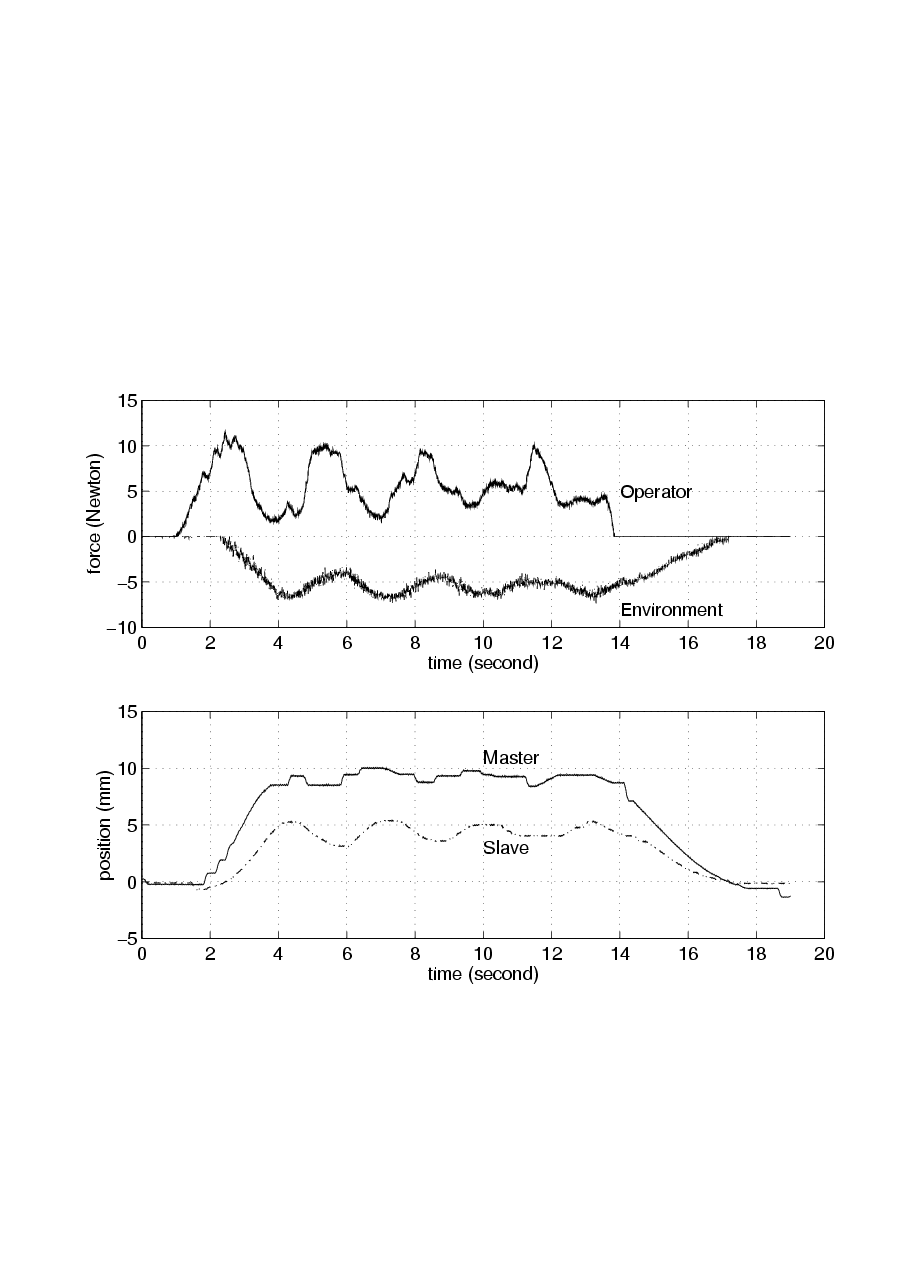

- 4.45. Experimental results of tele handshaking device with 400 ms time delay (a) Results with VI and without PEC

- 4.46. Experimental results of tele handshaking device with 400 ms time delay (b) Results with VIPEC

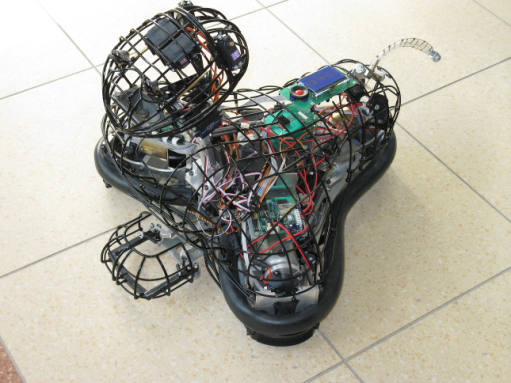

- 5.1. MogiRobi: the holonomic drive based ethological robot

- 5.2. The concept of the iSPACE and the behaviour attitude

- 5.3. MogiRobi expressing sadness

- 5.4. MogiRobi expressing happiness

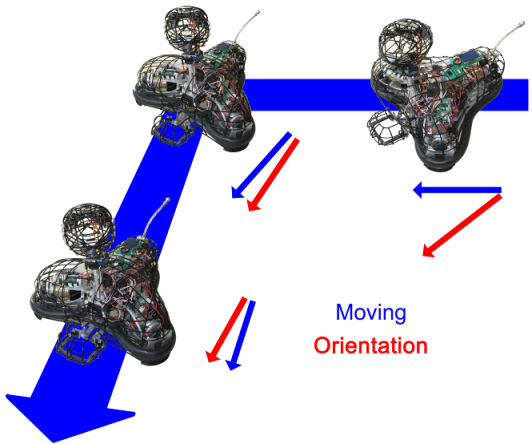

- 5.5. Different direction of moving and looking during holonomic movement.

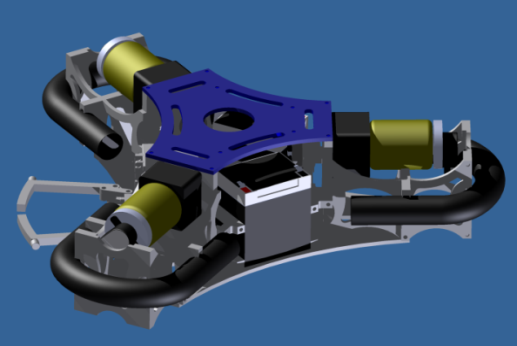

- 5.6. The basement of the robot

- 5.7. The design of the basement

- 5.8. The omnidirectional wheels

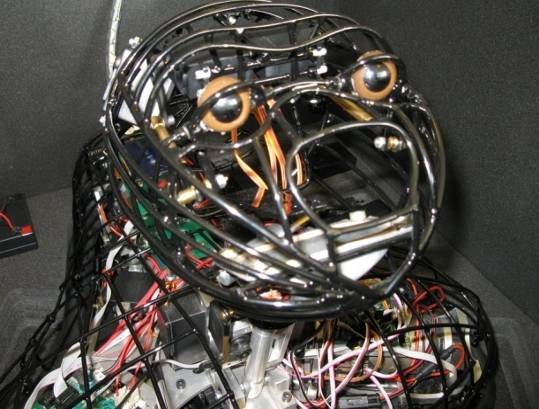

- 5.9. The neck of the robot

- 5.10. The ball joint of the head

- 5.11. The head

- 5.12. The gripper

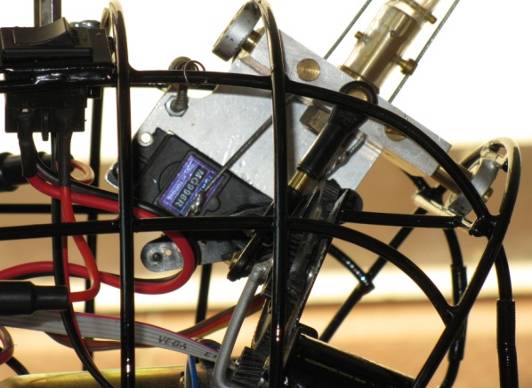

- 5.13. The oscillating mechanical system and the wired servo drive

- 5.14. The tail

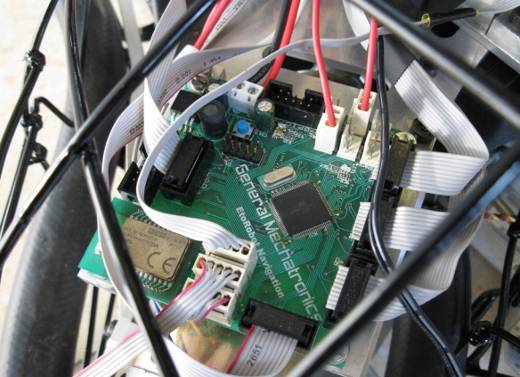

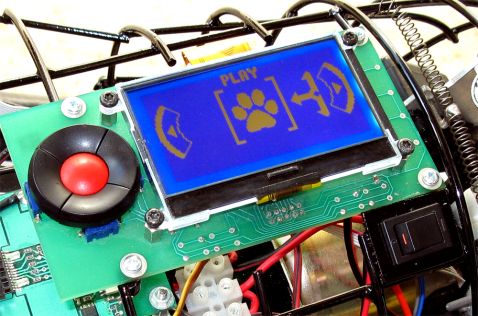

- 5.15. The motion control board

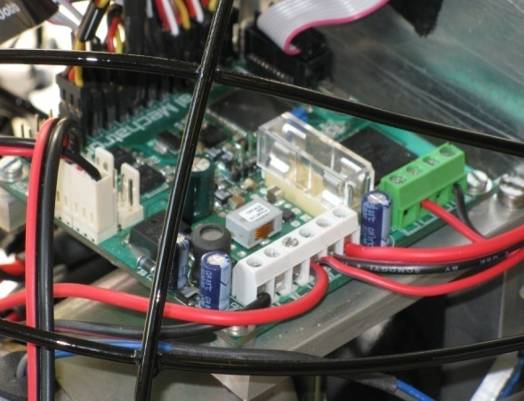

- 5.16. The servo control board

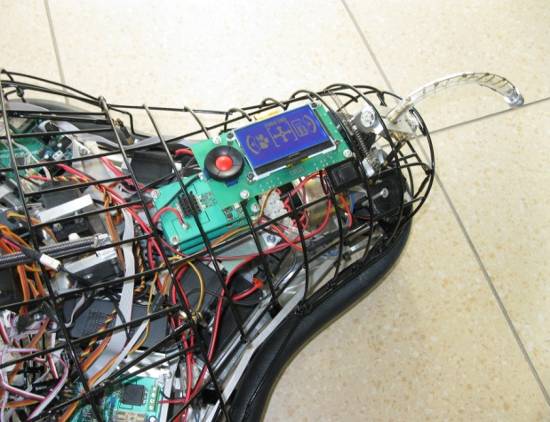

- 5.17. The LCD and the control buttons

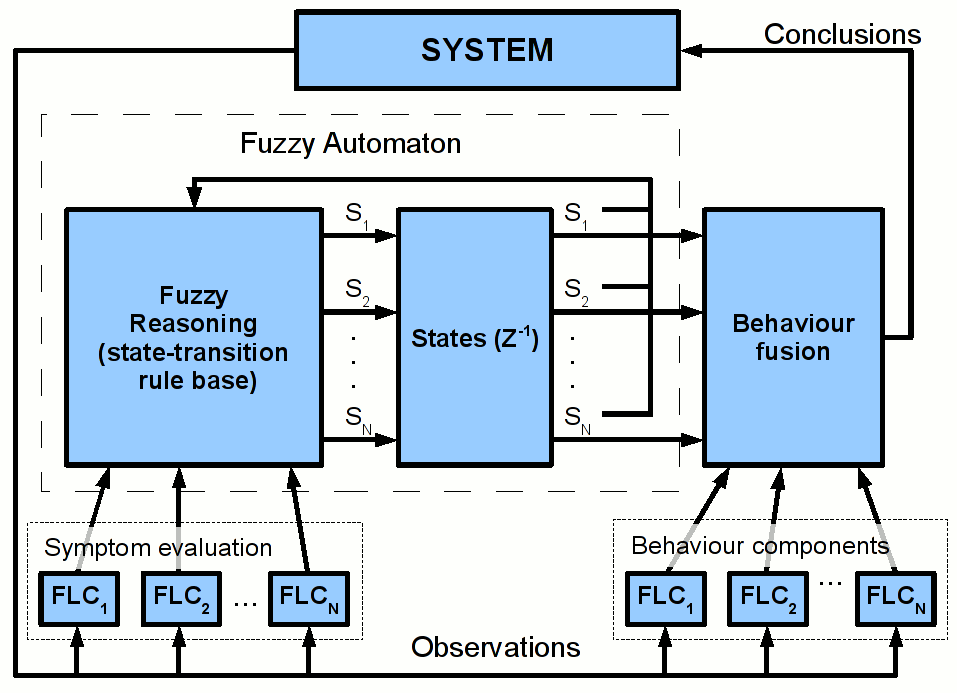

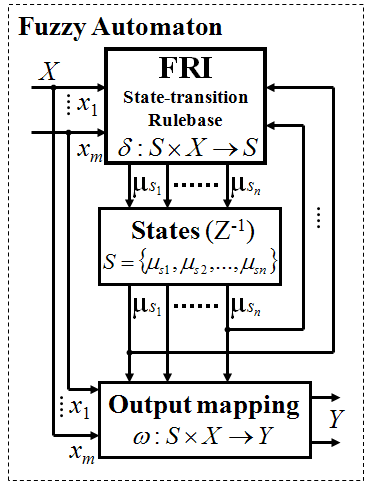

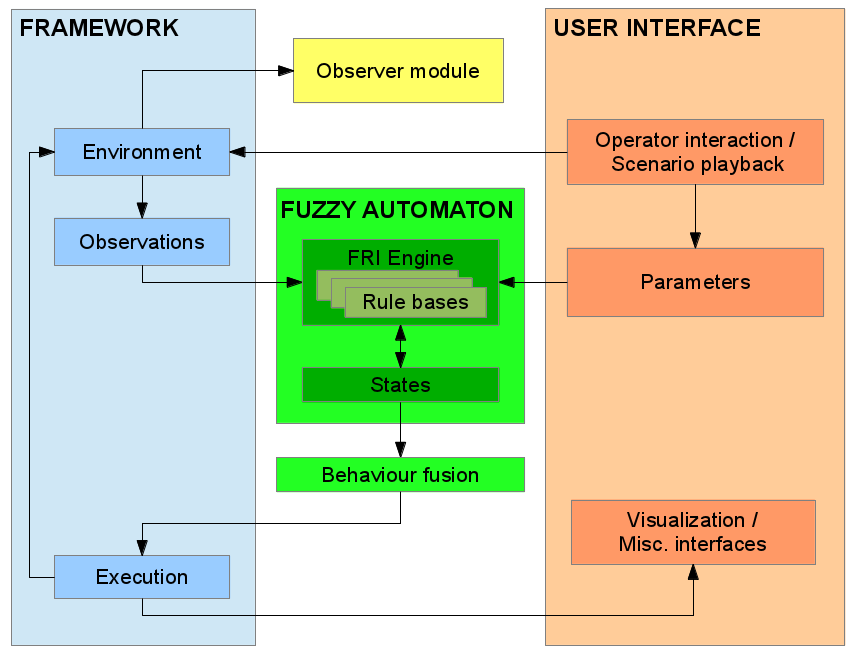

- 6.1. Diagram of the fuzzy automaton

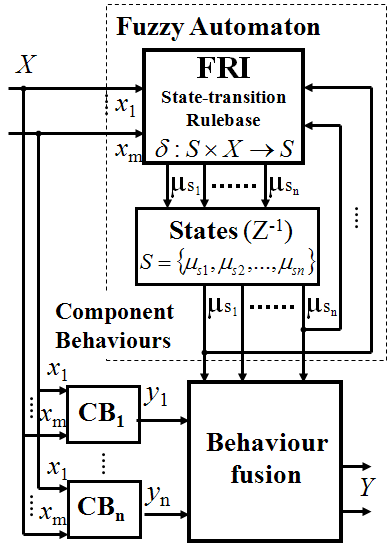

- 6.2. FRI based Fuzzy Automaton.

- 6.3. FRI behaviour-based structure

- 6.4. Structure of the simulation

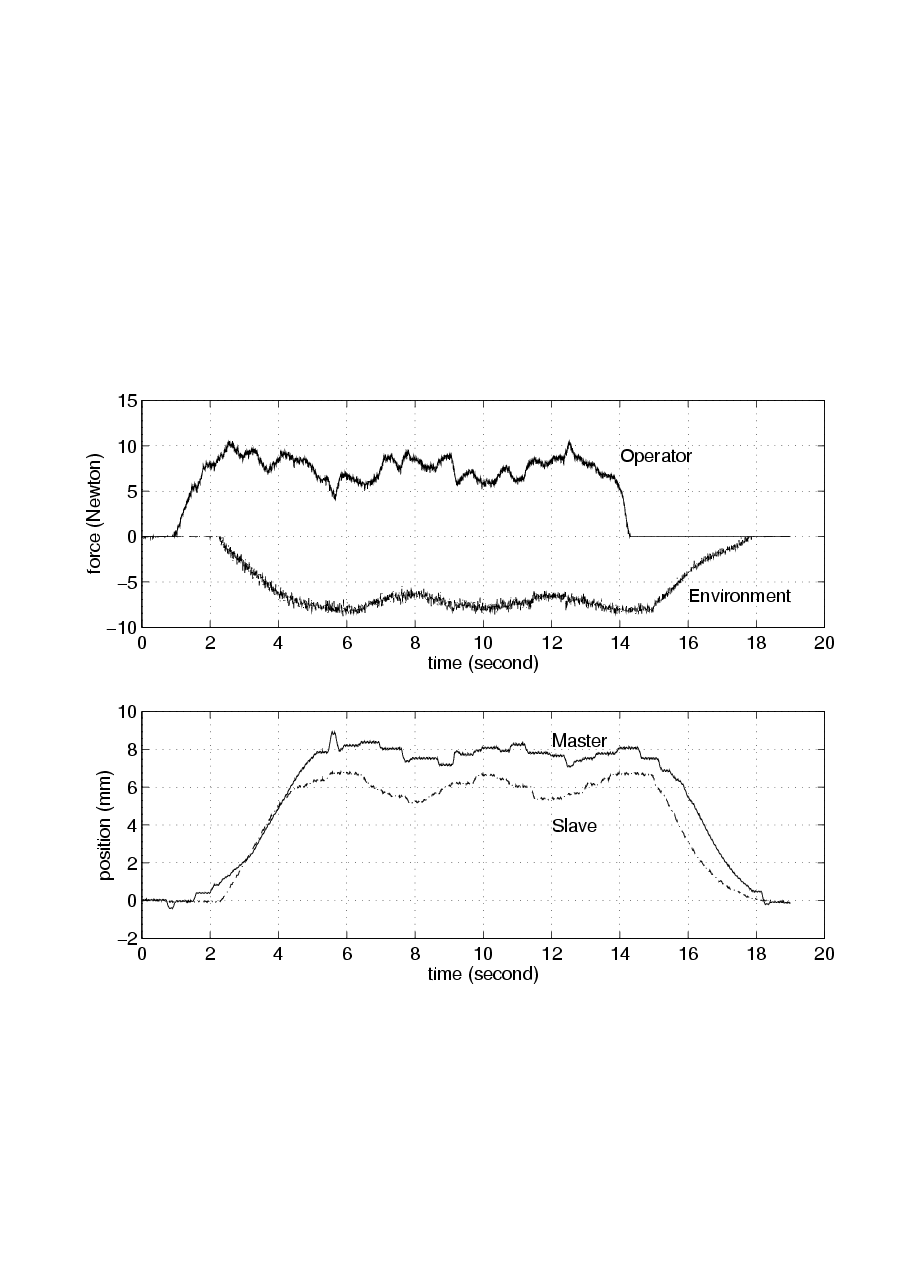

- 6.5. Screenshot of the simulation application

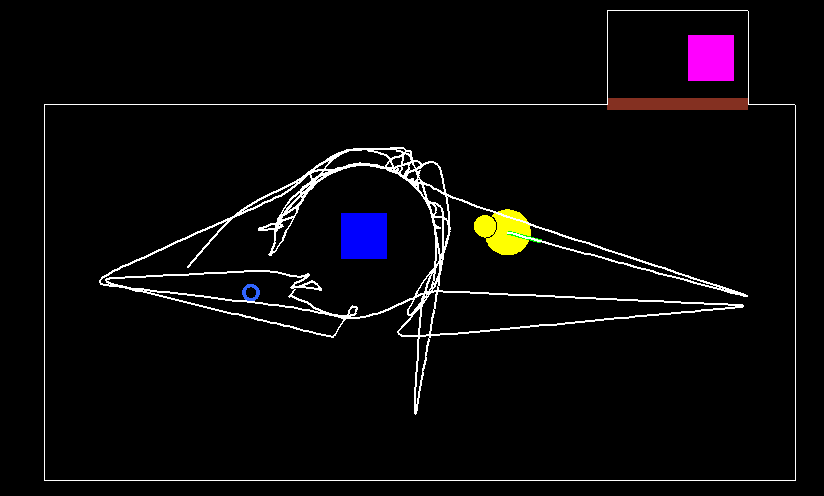

- 6.6. A sample track induced by the exploration behaviour component

- 6.7. A sample track induced by the ‘DogGoesToDoor’ behaviour component

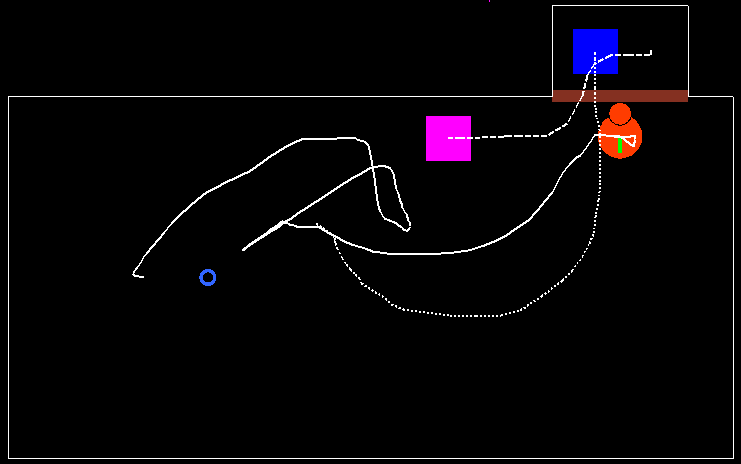

- 6.8. Some of the state changes during the sample run introduced in

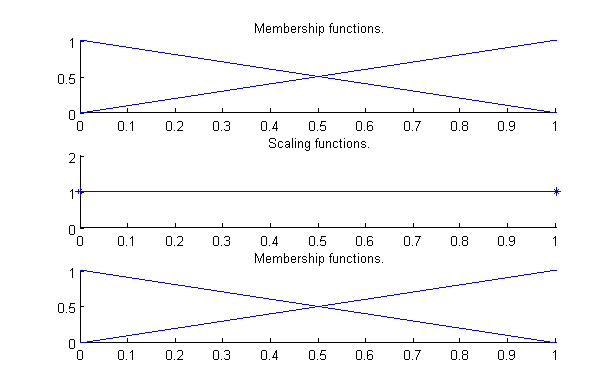

- 6.9. Fuzzy partition of the following terms: dgro - dog greets owner, dpmo - dog’s playing mood with the owner, dpms - dog’s playing mood with the stranger, dgtt - dog goes to toy, dgtd - dog goes to door, oir - owner is inside, ogo - owner is going outside

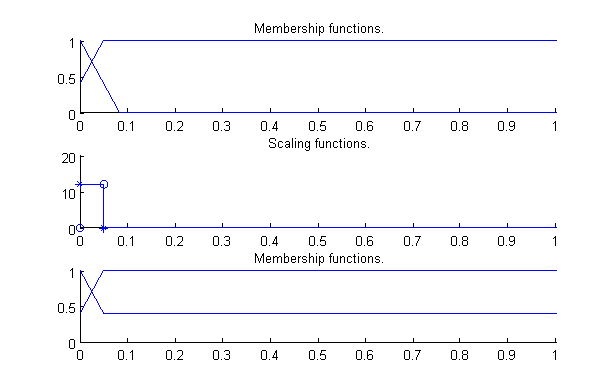

- 6.10. Fuzzy partition of the term ddo (distance between dog and owner)

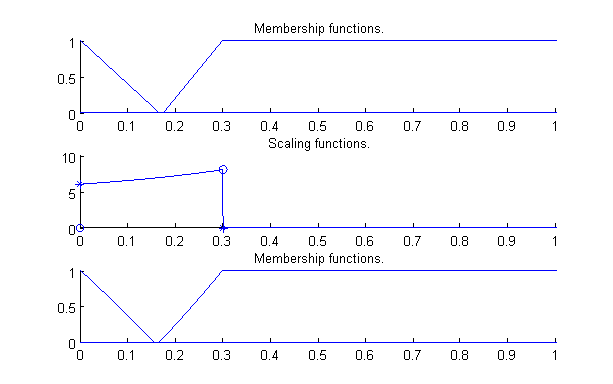

- 6.11. Fuzzy partition of the term danl (dog’s anxiety level)

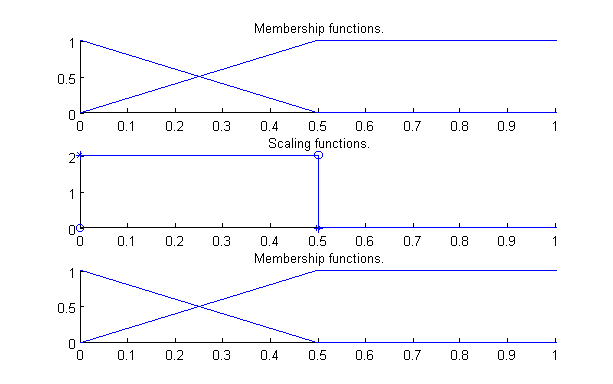

- 6.12. Fuzzy partition of the term dgto (dog is going to owner) and dgtd (dog is going to the door)

- 8.1. Leonardo da Vinci’s studies about the influence of apparent area upon the force of friction.

- 8.2. Amonton’s sketch of his apparatus used for friction measurement in 1699.

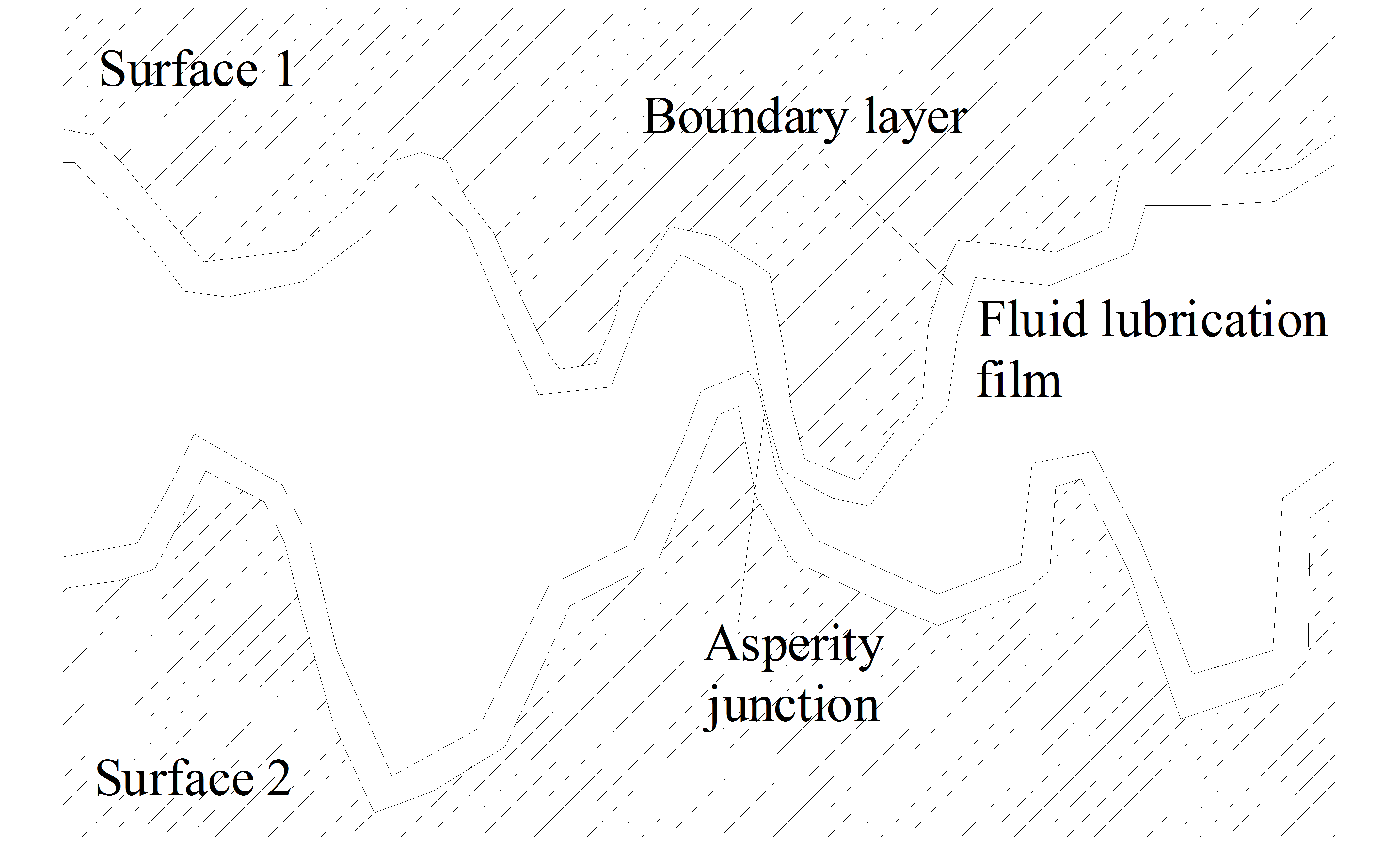

- 8.3. Contact surfaces at microscopic level

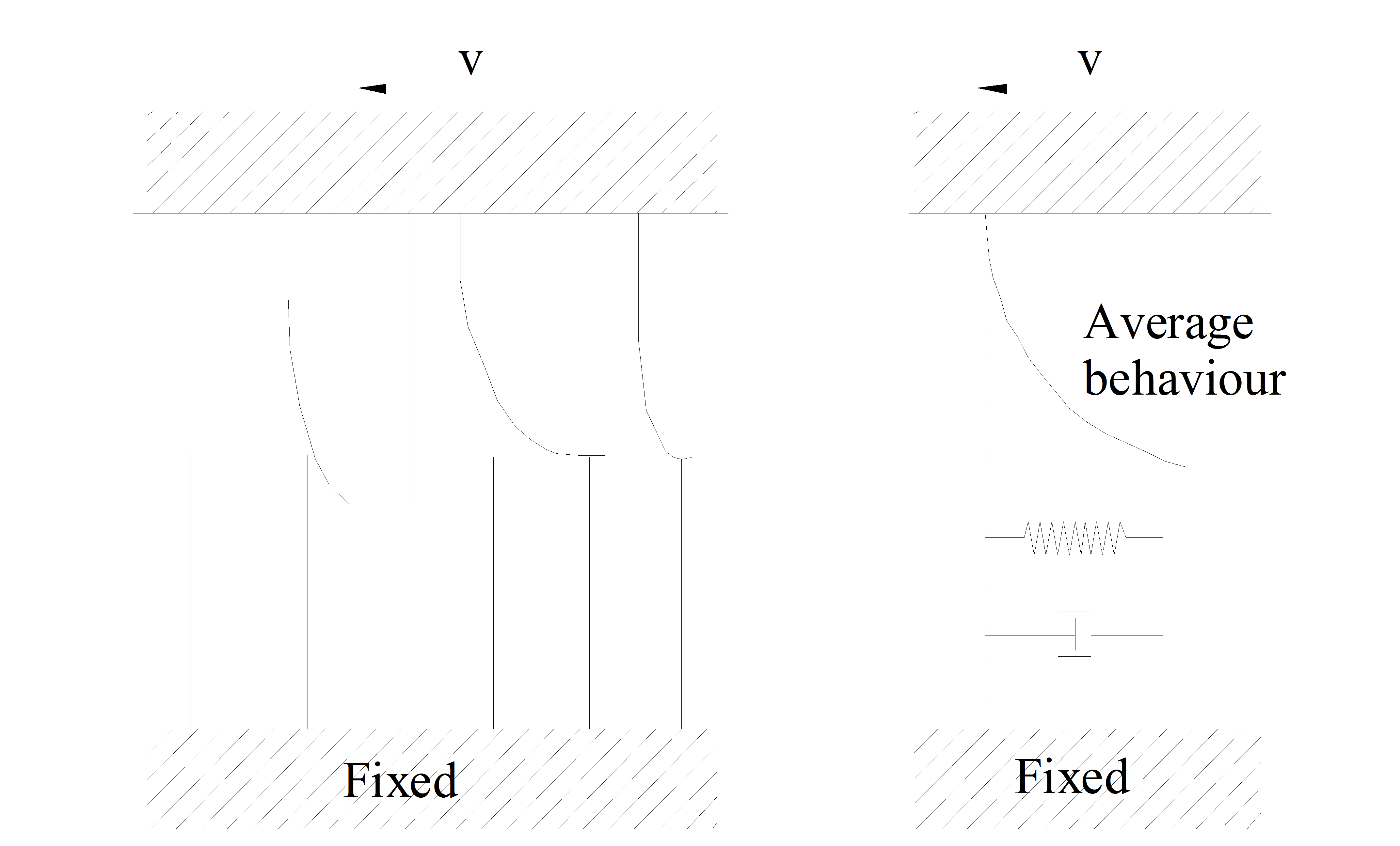

- 8.4. Visualization of rigid bodies in contact

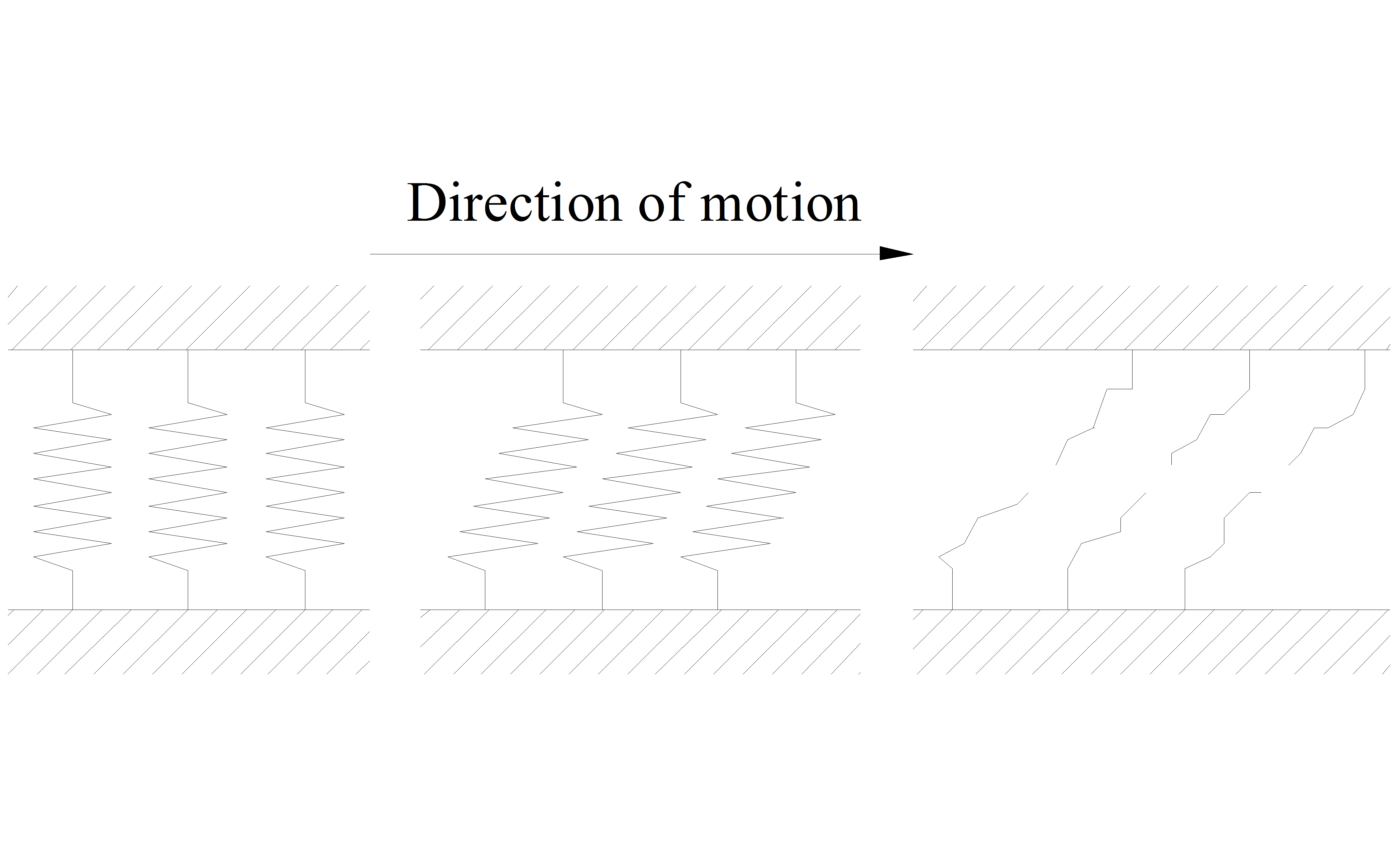

- 8.5. The breaking of bristles

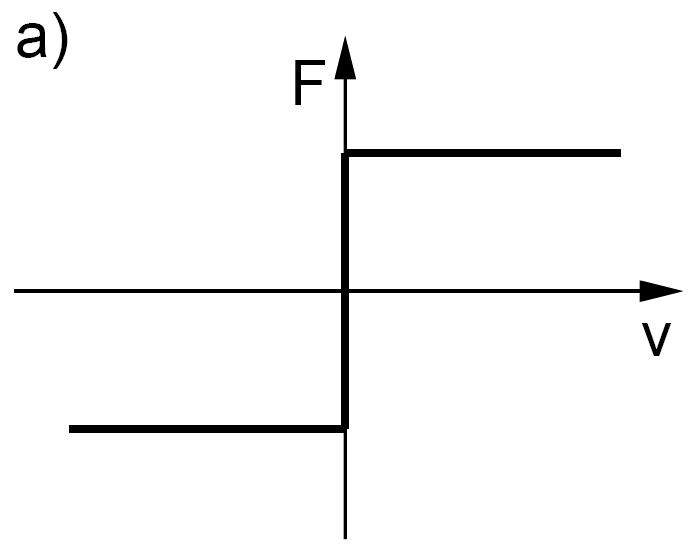

- 8.6. Coulomb friction characteristic

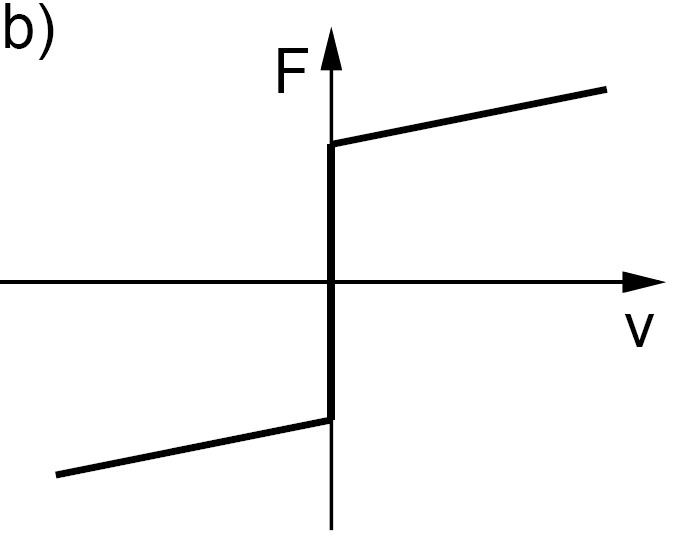

- 8.7. Viscous friction combined with Coulomb friction

- 8.8. Viscous friction combined with Coulomb friction and static friction

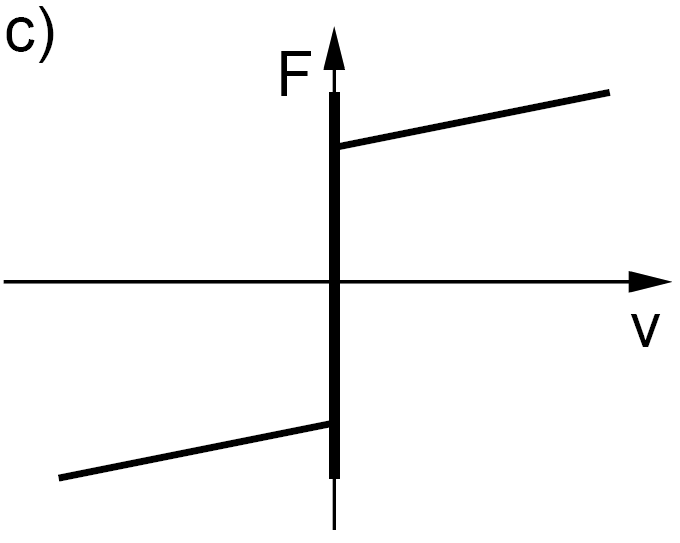

- 8.9. Stribeck friction characteristic

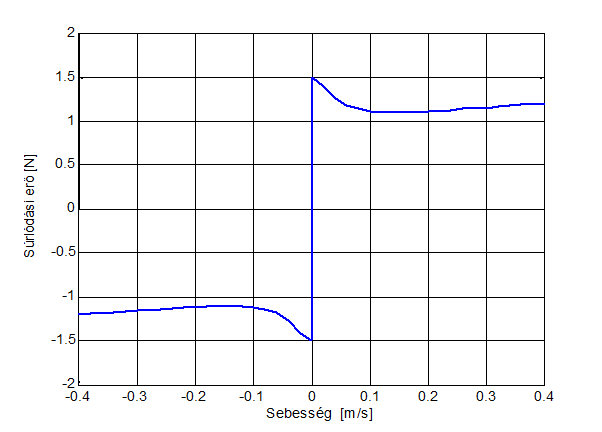

- 8.10. Steady state friction-velocity curve used for simulation

- 8.11. Karnopp model, stick-slip curve

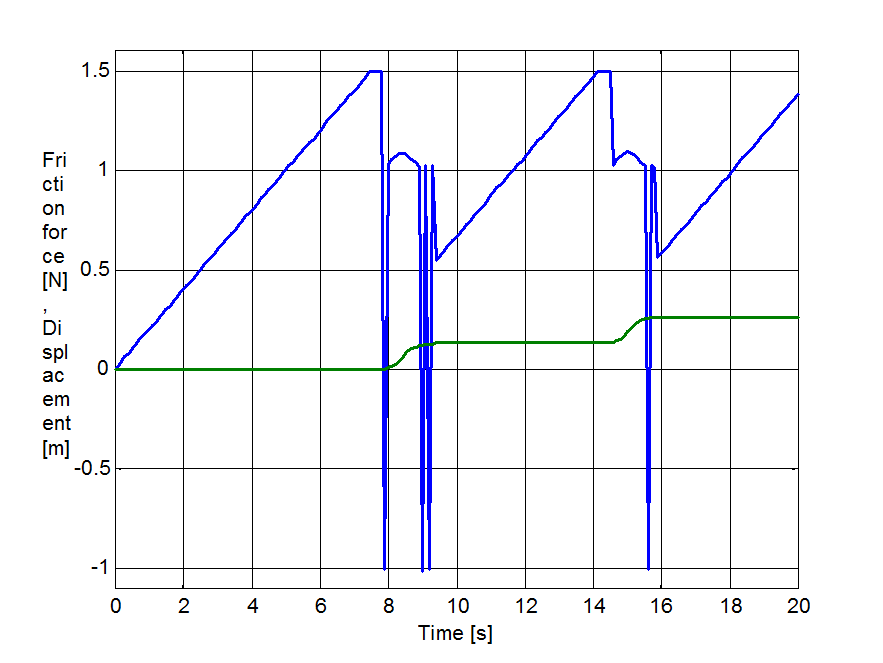

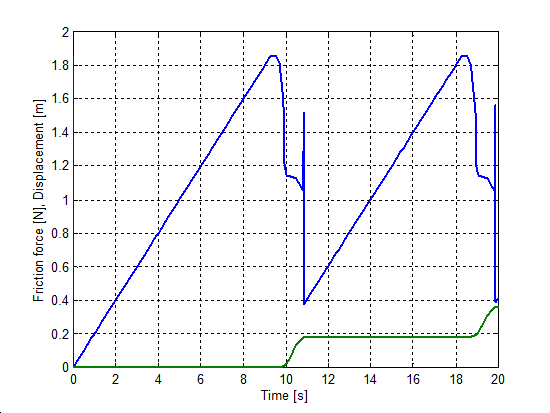

- 8.12. Seven-parameters model, stick-slip curve

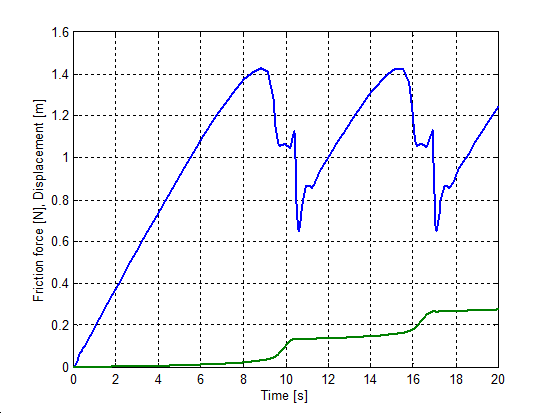

- 8.13. LuGrell model, stick-slip curve

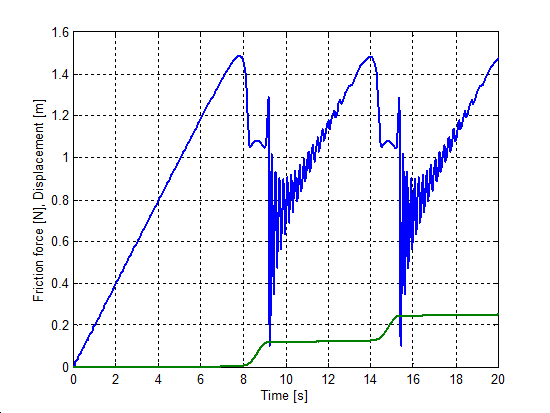

- 8.14. Modified Dahl model, stick-slip curve

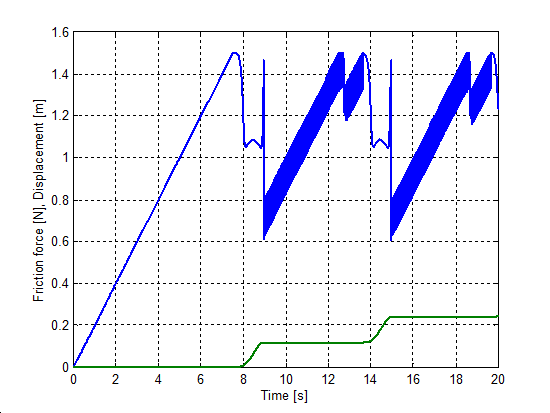

- 8.15. M2 model, stick-slip curve

- 8.16. Seven-parameters model, change of friction force during velocity reversals

- 8.17. Seven-parameters model, change of spring force during velocity reversals

- 8.18. Seven parameter model, change of mass velocity during velocity reversals

- 8.19. Seven parameter model, change of displacement during velocity reversals

- 8.20. Dahl model, change of spring force during velocity reversals

- 8.21. Modified Dahl model, change of mass velocity during velocity reversals

- 8.22. Modified Dahl model, change of displacement during velocity reversals

- 8.23. Modified Dahl model, change of displacement during velocity reve

- 8.24. LuGre model, change of spring force during velocity reversals

- 8.25. LuGre model, change of mass velocity during velocity reversals

- 8.26. LuGre model, change of mass displacement during velocity reversals

- 8.27. LuGre model, change of friction force during velocity reversals

- 8.28. Karnopp model, change of mass velocity during velocity reversals

- 8.29. Karnopp model, change of mass displacement during velocity reversals

- 8.30. Karnopp model, change of friction force during velocity reversals

- 8.31. Karnopp model, change of spring force during velocity reversals

- 8.32. M2 model, change of mass velocity during velocity reversals

- 8.33. M2 model, change of mass displacement during velocity reversals

- 8.34. M2 model, change of friction force during velocity reversals

- 8.35. M2 model, change of spring force during velocity reversals

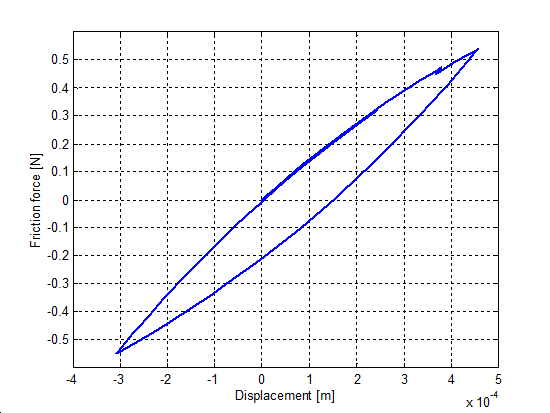

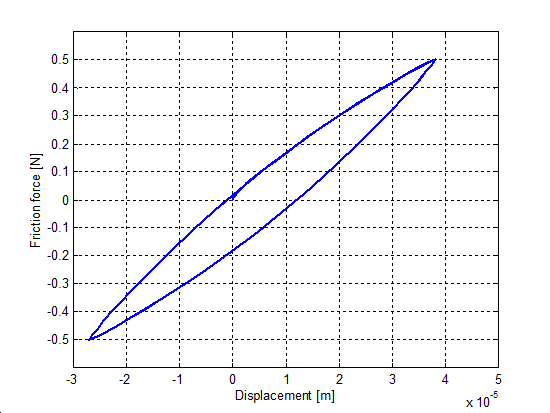

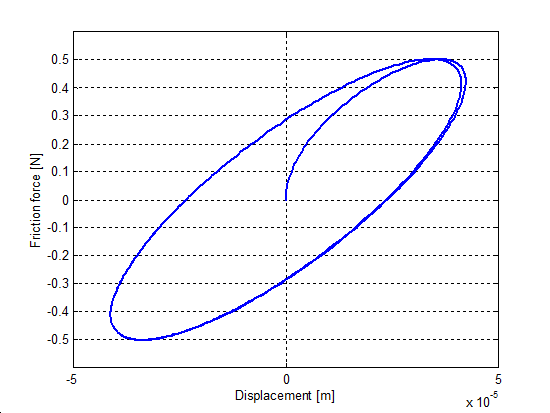

- 8.36. Presliding displacement curve of the seven-parameters friction model

- 8.37. Presliding displacement curve of LuGre model

- 8.38. Presliding displacement curve of Modified Dahl model

- 8.39. Presliding displacement curve of M2 model

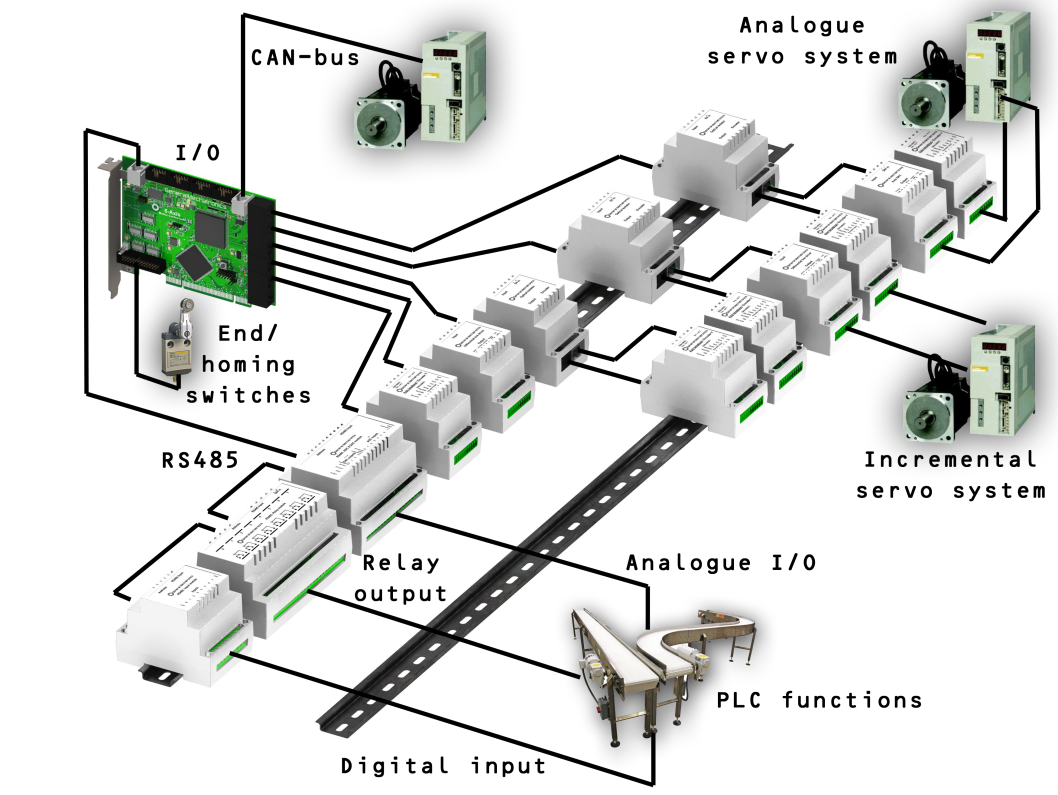

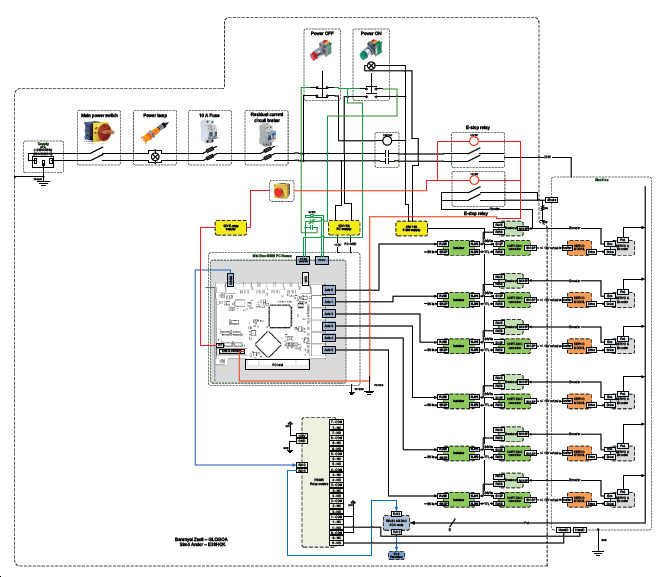

- 9.1. Connection layout of PCI card based motion control system

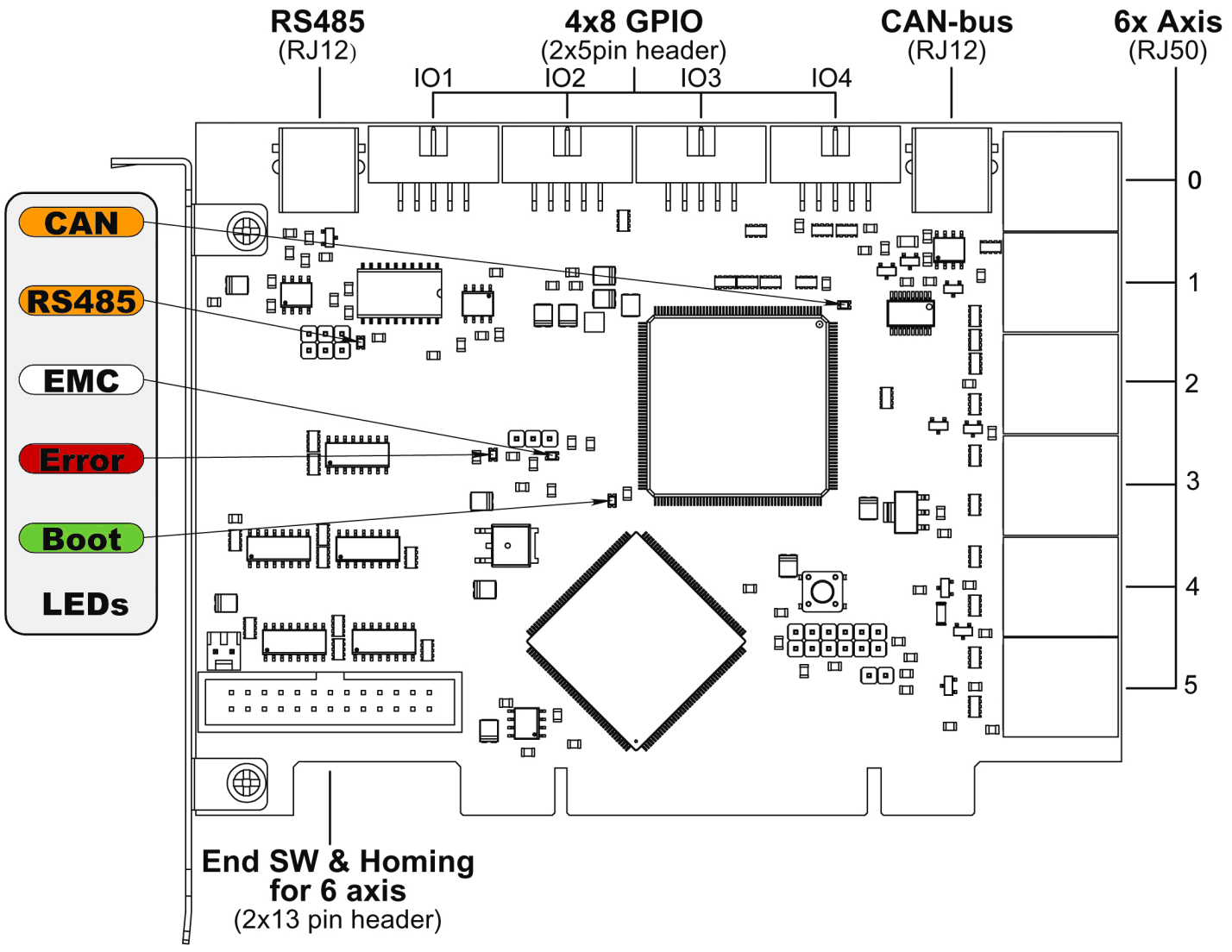

- 10.1. PCI card connectors and LEDs

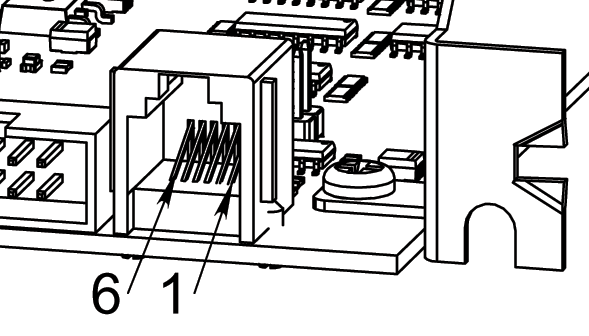

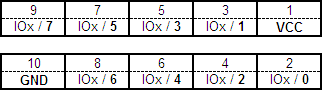

- 10.2. Pin numbering of RS485-bus connector

- 10.3. Pinout of RS485-bus connector

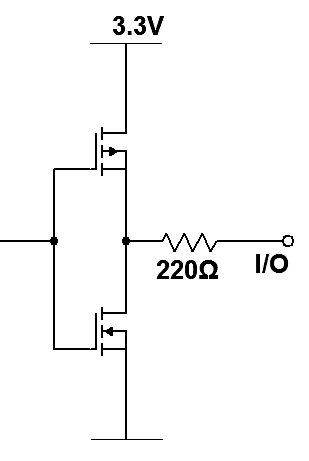

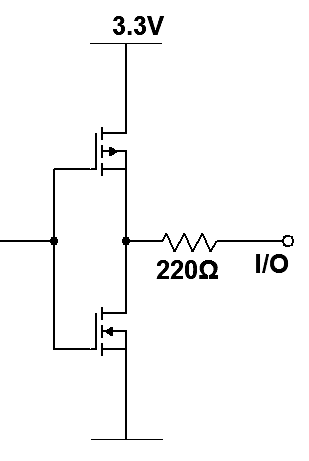

- 10.4. Equivalent circuit of an output pin. Direction of I/O pins depending on configuration see chapter 4.9

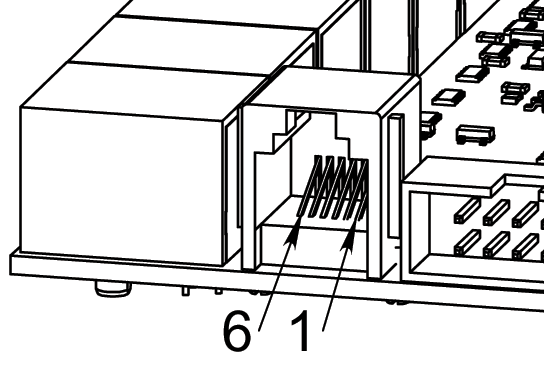

- 10.5. Pin numbering of CAN-bus connector

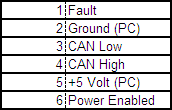

- 10.6. Pinout of CAN-bus connector

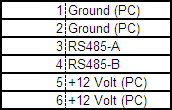

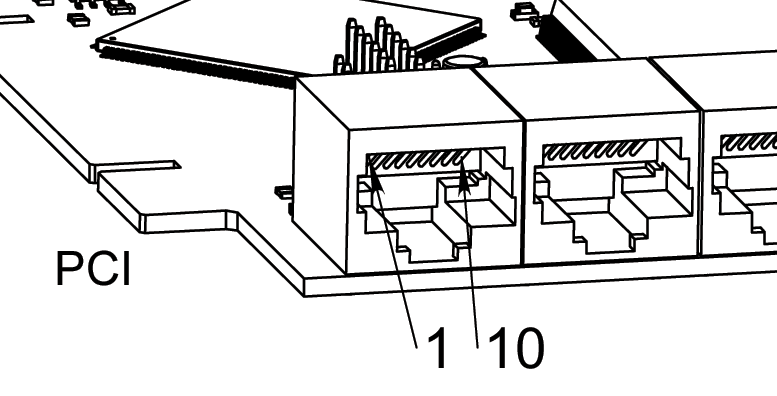

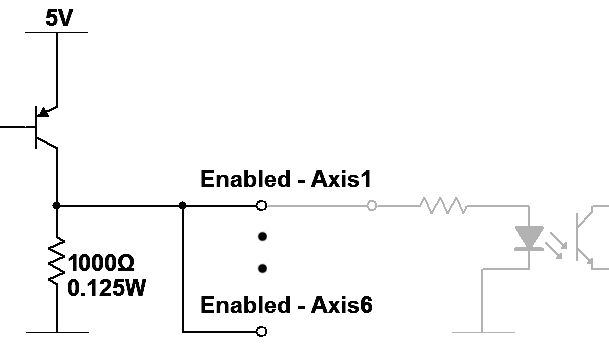

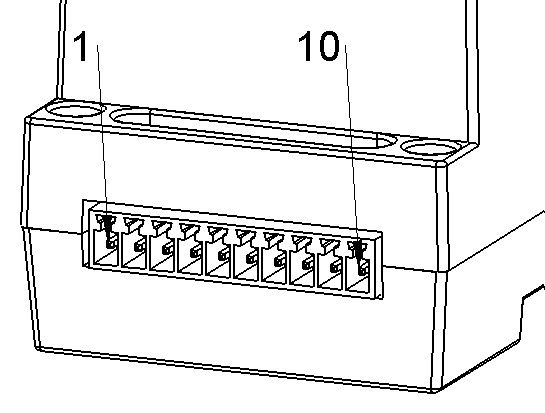

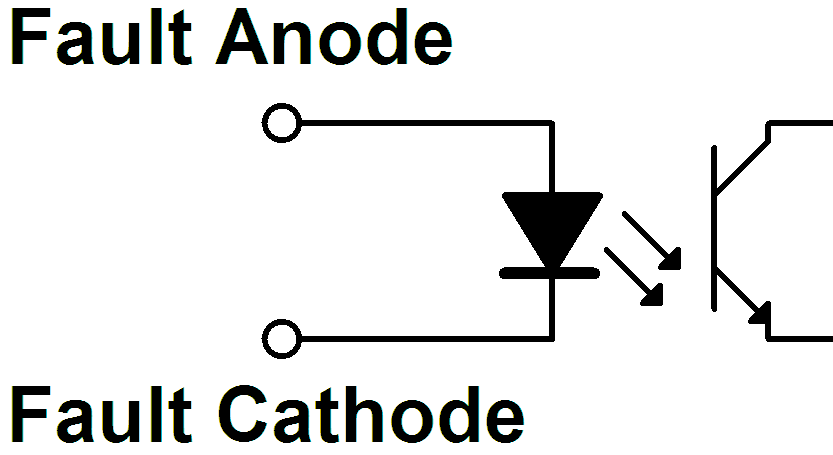

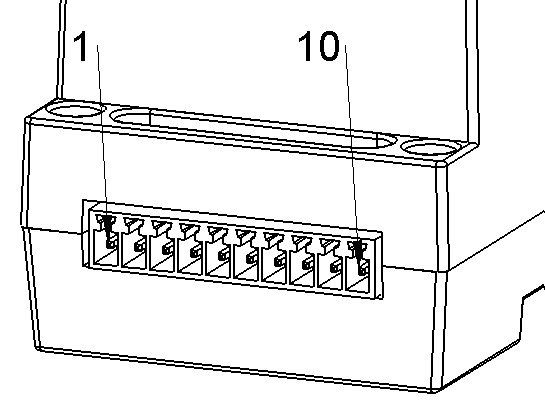

- 10.7. Pin numbering of axis connectors

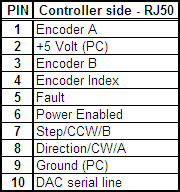

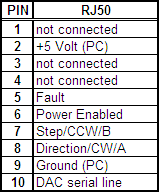

- 10.8. Pinout of axis connectors

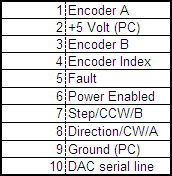

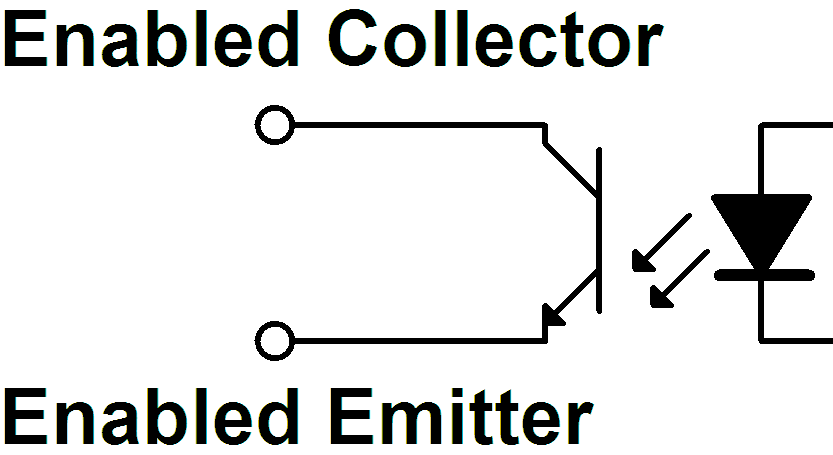

- 10.9. Equivalent circuit of fault input for an axis

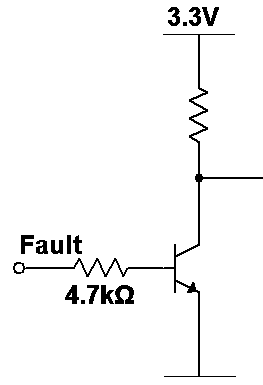

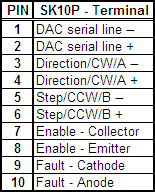

- 10.10. Equivalent circuit of enabled outputs

- 10.11. Equivalent circuit of output pins (Step, Direction, and DAC serial line) on axis connectors

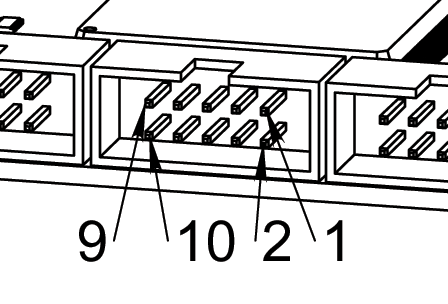

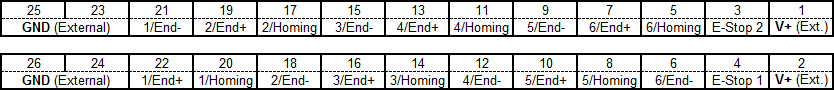

- 10.12. Pin numbering of homing & end switch connector

- 10.13. Pinout of homing & end switch connector

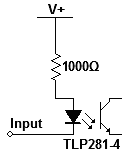

- 10.14. Equivalent circuit of input pins

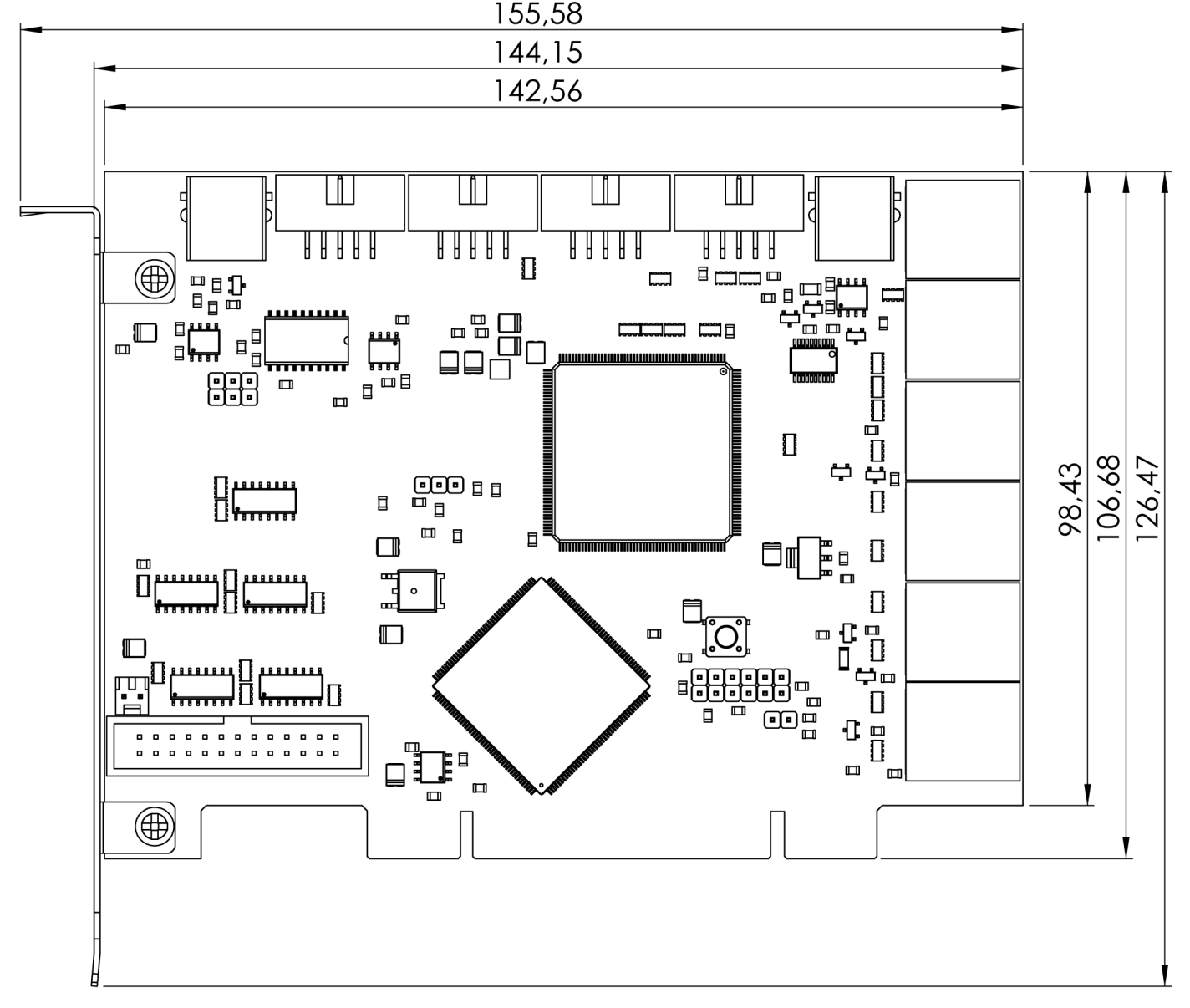

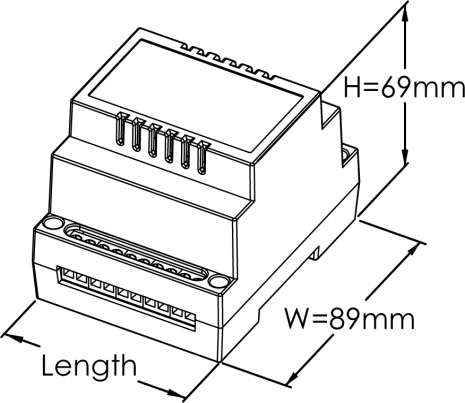

- 10.15. Mechanical dimension

- 10.16. Axis interface modules: Differential line driver, Digital to analogue converter, Optical isolator, Encoder/ reference breakout

- 10.17. Analogue system with encoder feedback

- 10.18. Incremental digital system with encoder feedback and differential output

- 10.19. Incremental digital system with encoder feedback and TTL output

- 10.20. Incremental digital system with differential output

- 10.21. Incremental digital system with TTL output

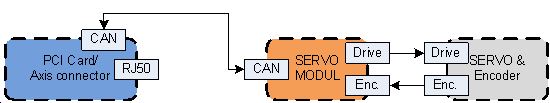

- 10.22. Absolute digital (CAN based) system

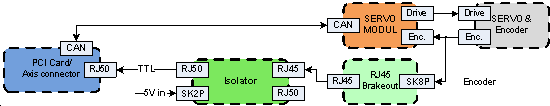

- 10.23. Absolute digital (CAN based) system with conventional (A/B/I) encoder feedback

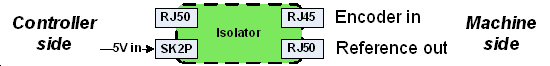

- 10.24. Block diagram of the optical isolator module connection

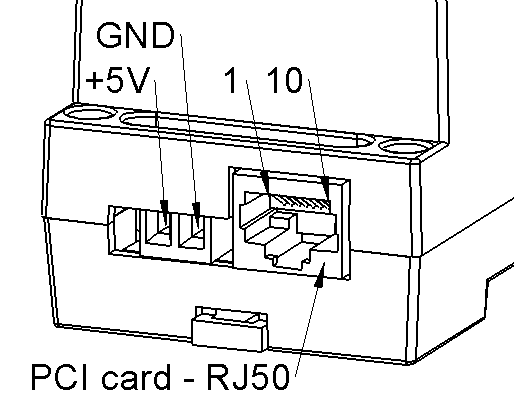

- 10.25. Pinout of PCI card (RJ50) connector and power input terminals

- 10.26. Pinout of reference output and encoder input connectors

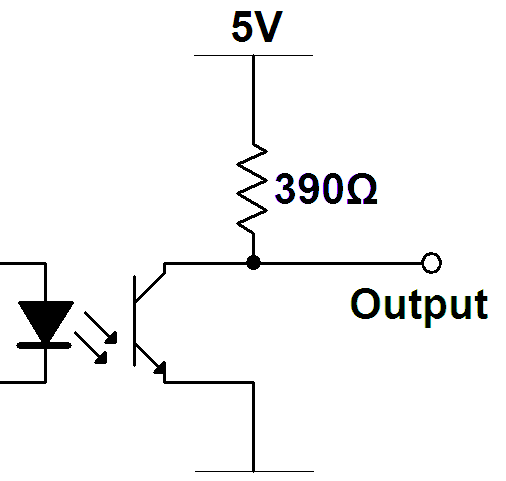

- 10.27. Equivalent circuit of output pins

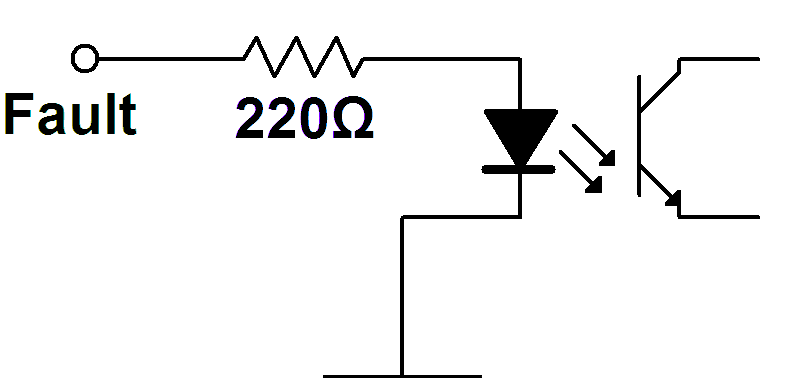

- 10.28. Fault signal input equivalent circuit

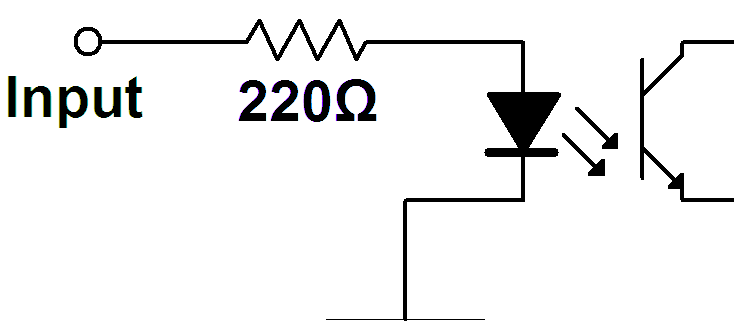

- 10.29. PCI card (RJ50) input equivalent circuit

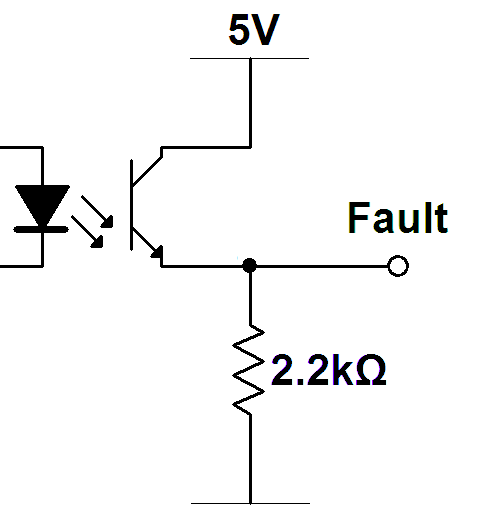

- 10.30. RJ50 to PCI card: Fault output equivalent circuit

- 10.31. Block diagram of the digital to analogue converter module connection

- 10.32. Controller side pinout

- 10.33. Machine side pinout

- 10.34. Equivalent circuit of fault signal output

- 10.35. Enable output

- 10.36. Equivalent circuit of fault input circuit

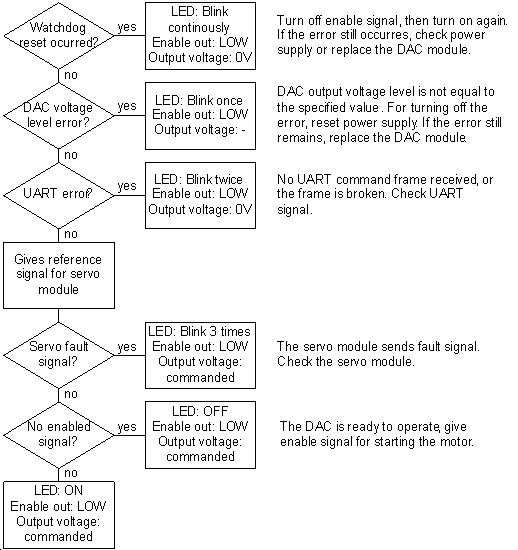

- 10.37. Fault management flowchart

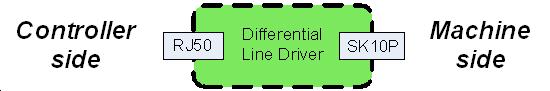

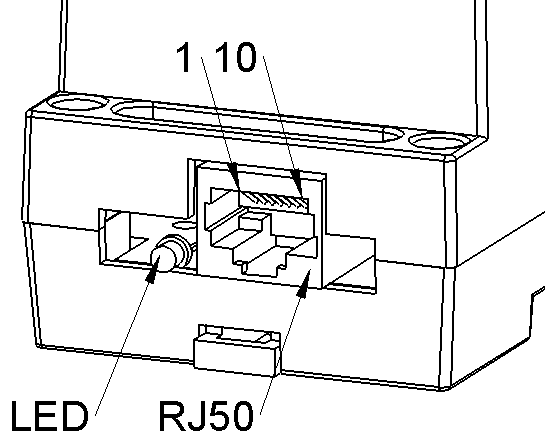

- 10.38. Block diagram of the differential line driver module connection

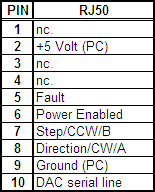

- 10.39. Controller side pinout

- 10.40. Machine side pinout

- 10.41. Optocoupler

- 10.42. Fault input circuit equivalent circuit

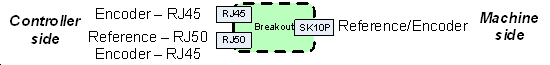

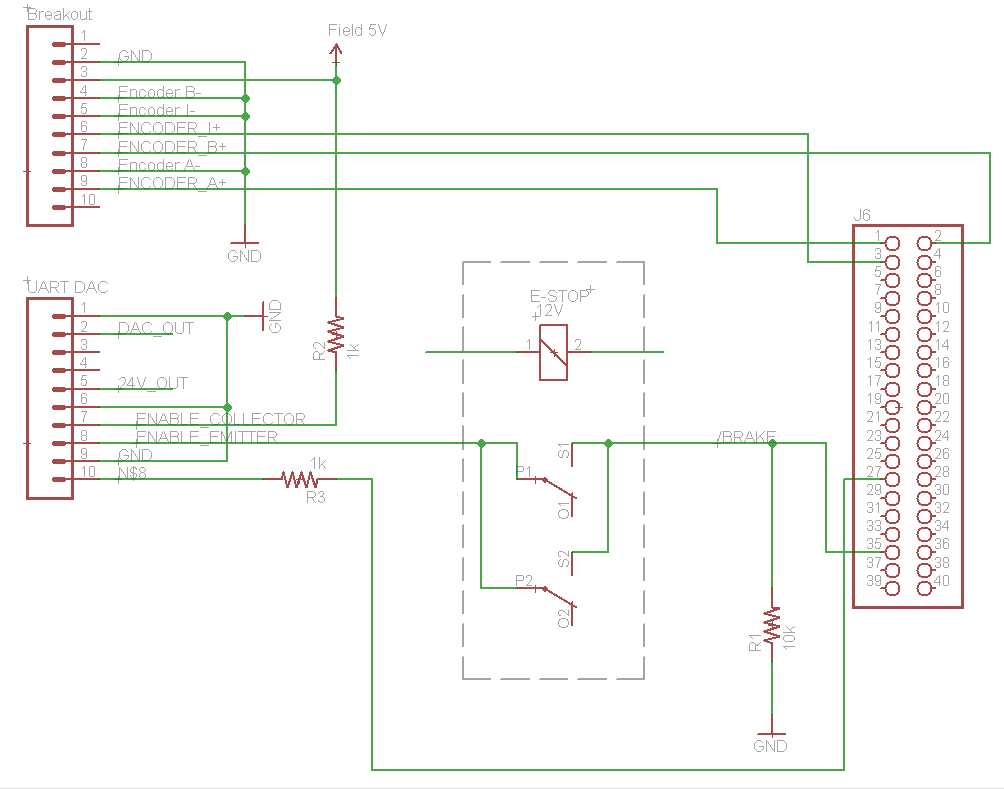

- 10.43. Block diagram of the breakout module connection

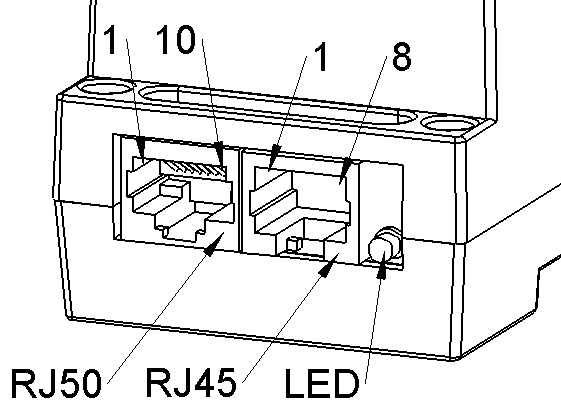

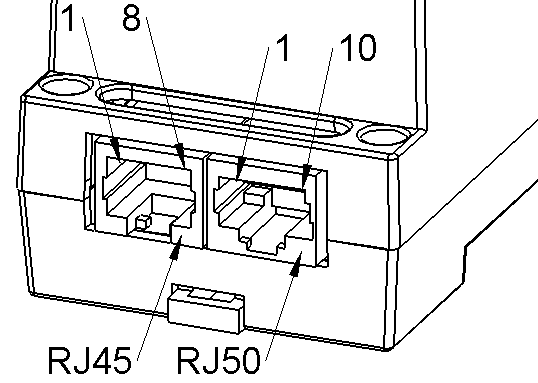

- 10.44. Pin numbering of RJ50 and RJ45 modular connectors

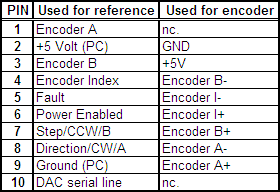

- 10.45. Encoder pinout

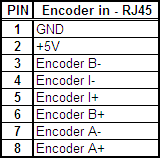

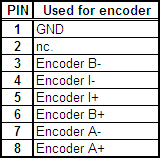

- 10.46. Encoder pinout

- 10.47. Terminal connector pinout

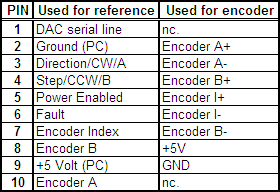

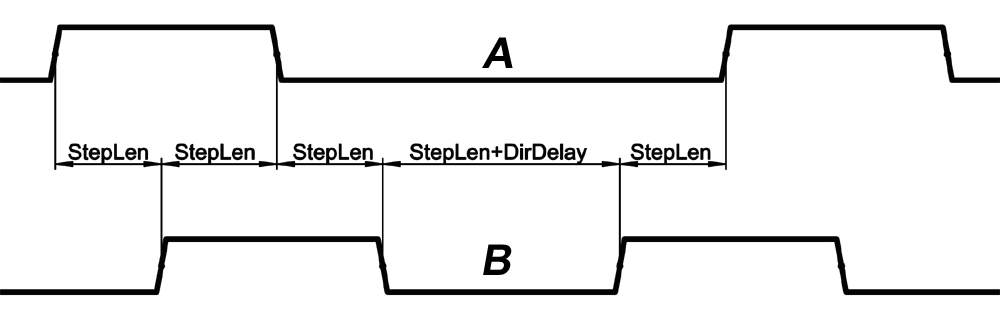

- 12.1. Step/Dir type reference

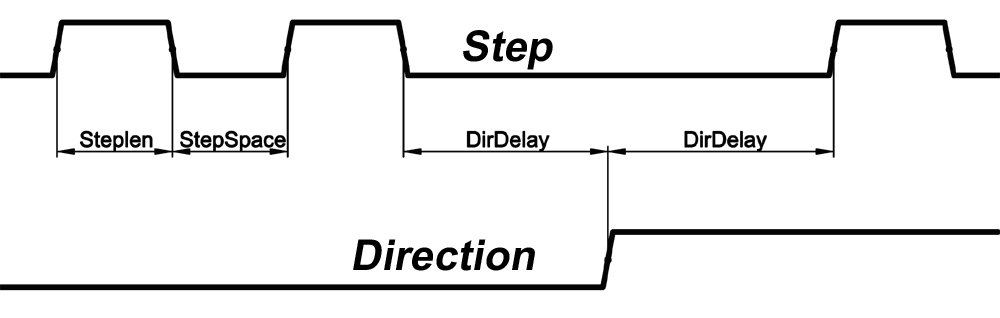

- 12.2. Up/Down count (CW/CCW) reference

- 12.3. Quadrant (A/B) type reference

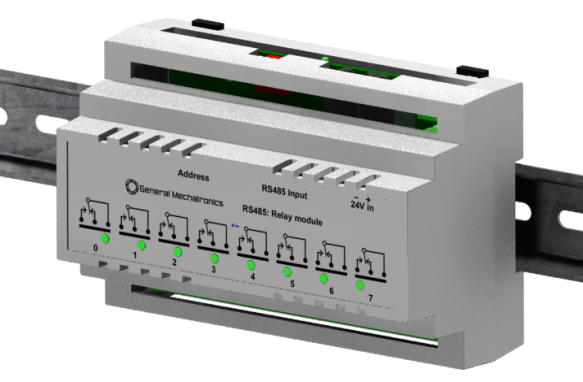

- 13.1. 8-channel relay output module

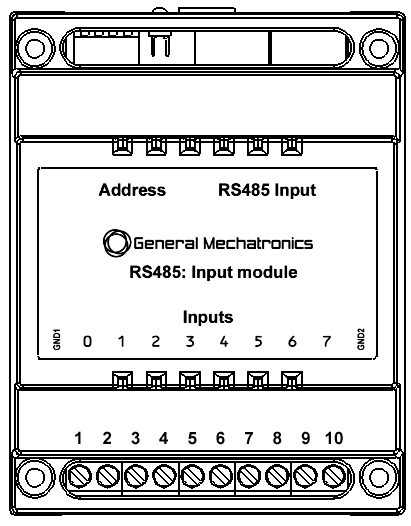

- 13.2. 8-channel digital input module

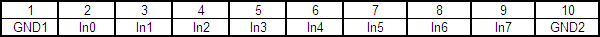

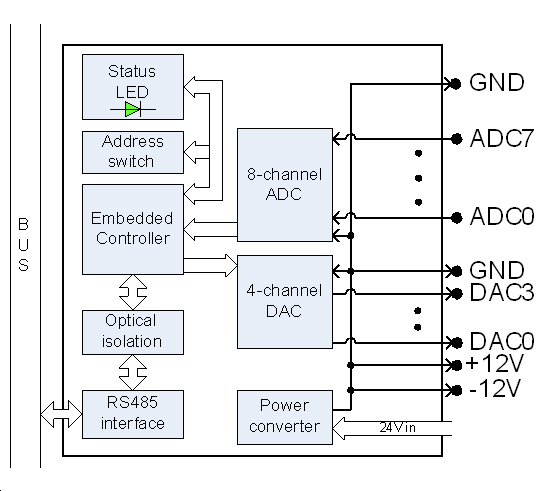

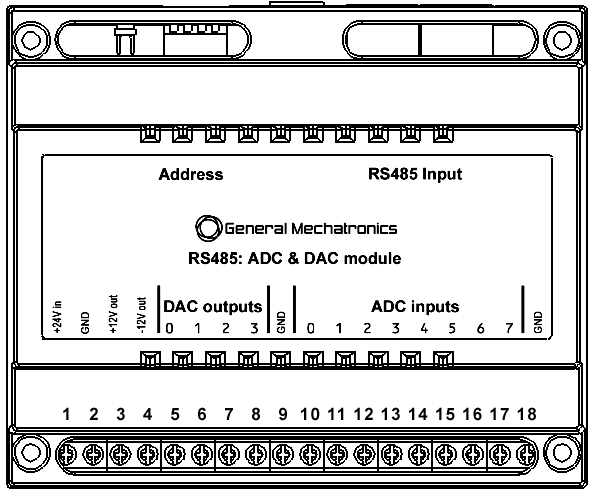

- 13.3. 8 channel ADC and 4-channel DAC module

- 13.4. Teach Pendant module

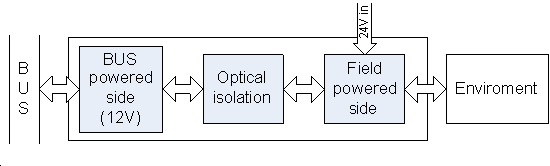

- 13.5. Powering of the nodes

- 13.6. Bus setting

- 13.7. Connecting of the nodes

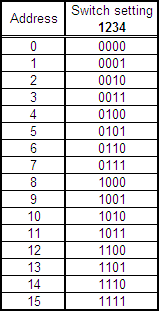

- 13.8. Node NBC addressing

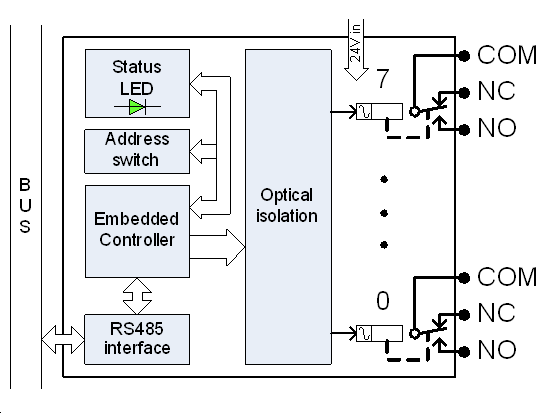

- 13.9. Relay output module

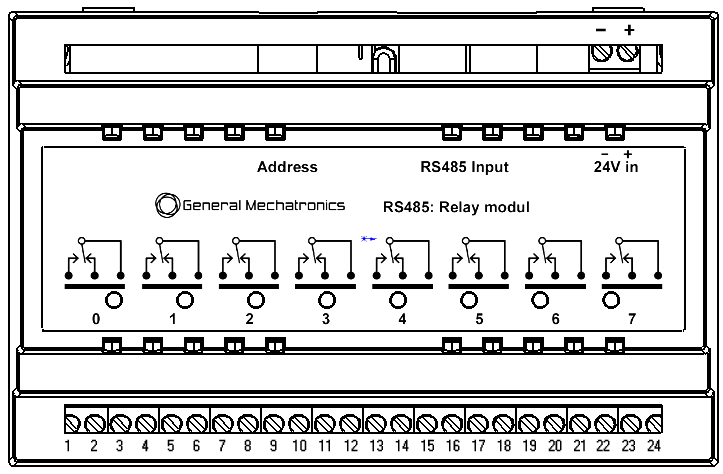

- 13.10. Numbering of output terminal connector and 24 input

- 13.11. Output connection diagram

- 13.12. Pin assignment table: NO: Normally Open, NC: Normally Closed, COM: Common

- 13.13. Digital input module

- 13.14. Equivalent circuit of digital input lines

- 13.15. Numbering of input terminal connector

- 13.16. Pin assignment table

- 13.17. AD & DC modul

- 13.18. Numbering of the terminal connector

- 13.19. Pin assignment table

- 13.20. Teach pendant module

- 13.21. Connectors and pin numbering of the teach pendant module

- 13.22. Pin assignment table of the digital input connector

- 13.23. Mechanical dimensions

- 13.24. (http://grabcad.com/library/robot-puma-560) Download: 2013. november 2.

- 13.25. Blockdiagram of the control

- 13.26. Connection of the robot and of the control

Chapter 1. Introduction: Trends in robotics

According to the report of Japanese Ministry of Economics and Trade [1] the robot industry market will be rearranged dramatically (see 11. ábra). The dominance will be shifted from the classical industrial robots used in the manufacturing sector to the so called service robots, which have already overtaken the leadership in the scientific journals and conferences but his market segment was almost ignorable. It will be increased most rapidly in the near future. This perception shows the increasing trend office, hospital, and similar robots. (In most of the cases these are mobile robots.)

![Robot industry market projections [1]](images/image_0.png)

Until now, the ordinary people could see robots only in the TV but there was no real physical contact with them. It means that robots were pure engineering stuff. The engineers used them. It is unequivocal that industrial robots are used and programmed by robot specialist engineers. As the robot appearing in small and middle size enterprises the robots are handled by engineers, who are not specialized in robotics. Thus the efficiency of robot programming methods must be improved in order to avoid losses caused by frequent switches in small scale production. So there is an increasing need to make the training of robots more automatized and at the same time to make them be able to fulfill more and more sophisticated tasks.

For automated material handling an industrial robot is a very handy solution, however it needs special attention in programming of work tasks. Offline programming of industrial robot is difficult because it relies on very accurate system setups and the virtual programming environment must be carefully calibrated to capture the real-life setup and to avoid any changes of the robot program on the spot itself. These problems could be avoided by using online programming of principles, but during online programming the robot is unable to produce anything. This results a continuous demand for new and effective robot teaching methods. In the field of industrial robotics the most challenging obstacle is that it takes approximately 400 times longer to program an industrial robot in complex operations than to execute the actual task [2].

In the next step the robot will be used by non engineers. In the aspect of robot users people can be divided to 4 main groups.

robot specialist engineer

engineer, but not robot specialist

not engineer, but being interested in robotics

elderly people, reluctant to robotics

For robots in our daily life it is not enough to execute a pre-programmed action line. They must be able to adopt themselves to changing environment, make their own decisions and in addition, they have to socially fit into the human environment. This requires a more sophisticated robot control method. The way of usage must be as simple (or simpler) as the usage of every office, or household devices. Service robots are designed for more sophisticated tasks than other devices, so the robot control method and the artificial intelligence must satisfy the communication tasks at a human-robot interaction. In line with the hardware design, the low level software development this train of thought raises several additional questions like

How should a social robot look like?

How should we communicate with the robot?

Is it possible for a robot to have emotions?

What is the definition of emotions?

People have always felt attached to their articles for personal use (phone, car, etc.). This attachment can be stronger to service robots. Turning this - currently unilateral attachment relationship - into a mutual relationship can bring not only obvious marketing advantages but enhance cooperative effectiveness in human-system interactions

1.1. Human Robot Cooperation on shopfloors

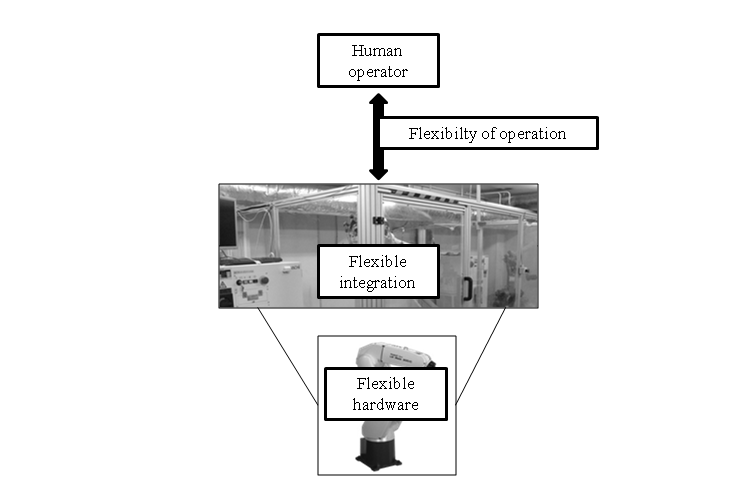

While service robots are about to infiltrate everyday life still a significant effort is given to the research of robotic manipulators. As service robots become friendlier and more natural the underdevelopment of operation and programming possibilities of a manipulator becomes clear. Since small and medium enterprises (SMEs) are turning to automation the need for flexible robot cell integration is rising. Flexibility may depend on several factors:

Hardware flexibility (robot, CNC machine)

Integration flexibility (reconfiguration of robot cell)

Operation flexibility(human robot interaction)

The first two components are addressed my both the robot manufacturers and informatics technology researches. The main challenge on this field is to provide a standard protocol for the different components building up the whole production system. The service oriented architecture (SOA) paradigm [3] offers a scientifically accepted approach and it is still in the main flow of interest [4, 5, 6].

On the other hand the least flexible point of a whole system defines its overall flexibility. The problem is well defined in [7]: most flexible robot cells are sold with custom operating software and as a result the key to operational flexibility remains in the hands of the integrator. To bring more adaptively in operation [7] presents a set of robust control software. Furthermore one has to keep in mind that SMEs are often not in the position to employ highly trained operational personnel thus the easy programming and configuration contribute to flexibility (see 12. ábra). Human robot cooperation is still in the focus because of the goal for simple robot programming.

1.1.1. Robot operation in shared space

The easiest and most natural way of programming a robot is to teach the task by hand. Moving the manipulator by hand implies danger: the robot should be energized thus a robust and safe control system is needed. Any autonomous movement of the manipulator is potentially dangerous when humans must stay or work in the reach of the robot. Controlling the human robot interaction force offers a solution and implementing impedance control [8] with use of force sensor showed feasibility of collaboration without fences. Another control scheme is presented by [9] with the comparison of PD and sliding mode controller algorithms.

The other possibility to overcome this issue is to develop compliant robot systems. The lack of robustness in previous systems prompted research for new technology. [10] Presented a control system for manipulator actuated by pneumatic muscles while [11] and [12] uses direct-drive motors with wire rope mechanism.

The trend in industrial manipulator control research points clearly towards robust but compliant systems to merge accuracy and safety.

1.1.2. Flexible human robot interaction

In the framework of human robot cooperation the most important aspect is the bilateral communication. Physical interactions carry safety problems mentioned in the previous sub-section and environment awareness of the robot is still a significant problem. The more effective and flexible interaction with the user is intended the more sophisticated recognition technology is required.

Interesting example is presented by [13]. With traditional approach an additional channel should be used to communicate information about the object handed to the robot by the user. The use of a tactile sensor gives better potential for the robot system to understand the human intentions thus expanding the modularity of the interaction.

From the operators point of view better understanding of the system is possible to realize through user interfaces adapted to various contributors:

Complexity of the robot cell

Complexity of the task performed by the robot cell

Competence of the operator

Information needed

The key toward better flexibility is to transform the traditional and very technical graphical user interfaces between humans and industrial robots (see 1-3. figure). The focus should be shifted from the practical operation of a robot cell towards the cognitive programming and operation. Research on this includes other fields like psychology, usability, human factors.

Traditionally industrial robots are machines with as minimal human interaction as possible. Including the human in the operation not only increases the flexibility and efficiency but brings improved understanding in human robot relations and takes a new step toward better trust in automation in overall.

1.2. Engineering concepts for service robotics

Robots at the service sectors have to fulfill more and more sophisticated tasks. This is the same trend like at the evolution of industrial robots. The first industrial robot was created in 1937 by Griffith P. Taylor. [14] It contained almost only mechanical parts, and only one electric motor. It could stack wooden blocks in pre-programmed patterns. The program was on a punched paper, which activated solenoids. Nowadays industrial robots can weld, paint, mill, etc.

In a point of view the simplest robots at home are the washing machines, blenders, dishwashers, etc. These devices are created to help our daily routine tasks. The next step of this evolution is the help at our daily service type tasks. Inherently from the service tasks most of the robots in the service sector are mobile robots.

There are several responsive robot platforms can be found for to help us somehow, or simply just for fun. Roomba [15], or Navibot [16] helps to clean up the household in simple and convenient way like never before. There are also some doglike equipment like AIBO [17], or Genibo but these items are just trying to act as a real dog in a very primitive level. Some human inspired robots are also available, like Honda's ASIMO [18], or SONY's QRIO [19], but these complex and technically very advanced platforms are also just scratching the surface of the real social interaction between machine and human.

For the elders there is Paro [20] or Kobie, a kinds of a therapy robots, which are capable to perceive light, sound, temperature and touch, thus they are capable to sense their environment and the surrounding people. These robots could interact with the user in simple ways. They could learn the preferences of the elder in behavior, and react to their names. Even “simple” toys like these could reduce the stress level of the user, help to increase the socialization between the elders and make the communications with the caregivers more flawless.

1.2.1. Movement of the robots

Several types of mobile robots exists like tracked, wheeled, legged, wheeled-legged, leg-wheeled, segmented, climbing or hopping. The control methods of these robots are implemented individually for every construction. In most of the cases the control algorithms uses the virtual center of motion method. The path of the motion describes the path of this point [21], [22]. We can consider the whole mobile robot as a point (the centre of gravity, virtual center of motion). The velocity and the angular velocity of the centre of gravity define the motion of the robot (at constant speed). At the path of the motion we prescribe the velocity and the angular velocity of the robot in every moment.

The most often ways for mobile robot motion are the differential type drive and the steered vehicle concept. The path planning of these mechanical constructions can be extremely difficult [23]. Indoor environments increase the number of questions in this method. (see in 14. ábra)

At different driven type mobile robots path planning is simpler, because the robot can turn around without the change of the position. The orientation of the robot is always the same with the moving direction. These robots (and the driven vehicles also) have only 2 DoF-s on the ground plane.

In 1994 Stephen Killough invented omni wheels. [24] These are wheels with small discs around the circumference which are perpendicular to the rolling direction. This type of wheel does not have a geometrical constrain perpendicular to the rolling direction. With this type of configuration we can get a 3 DoF holonomic system (x, y, rot z). The most of the overland animals can move in 3DoF: turning and moving in one direction in the same time. The ordinary drive systems (steered wheels, differential drive) cannot perform this.

1.2.2. Informatics concepts

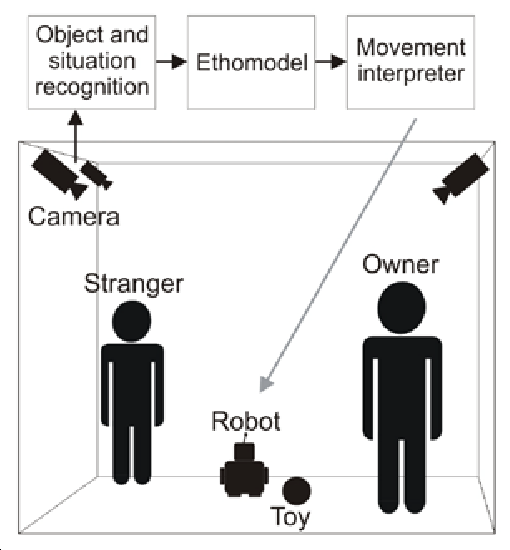

The artificial intelligence of a service robot system can be implemented on the robot, or distributed between the robot and the external environment (Intelligent Space). The Intelligent Space (iSpace) is an intelligent environment which provides both information and physical supports to humans and robots in order to enhance their performances. In order to observe the dynamic environment, many intelligent devices, which are called Distributed Intelligent Network Devices, are installed in the iSpace. [25, 26]

At the current state of science the greatest problem is caused by self-localization of robots (orientation, obstacle avoidance, path planning, etc.). There are several types of sophisticated methods for this problem. [27]

Could they be moved without requiring our presence? [28] Optical markers are generally developed for this kind of positioning. [29] (15. ábra) The most generally know optical marker is the QR code. QR code is designed only with the consideration of visual recognition and redundant information storage. (16. ábra)

1.3. Etho-Robotics

Ethology will play a major role in future development of social robotics because the emergence of new robotic agents does not only pose technological questions [30]. Only a broad synergic view of biological causality (ultimate and proximate), which has been practiced in ethology for at least half a century [31], can provide the necessary theoretical network which is able to handle future challenges.

May be a recent analogy could help in revealing our intentions with regard to social robotics. [32], investigated the head structures of woodpeckers in order to find out how the woodpecker’s head is protected against the vibration caused by drumming behaviour. The motion of a biological being has communication related senses.

According to human evolution we, can usually recognize the purpose, the behavior and the mood of an animal. The goal is to enhance the artificial devices with this natural interoperability, creating a way of human-machine interactions which stands for human needs. Instead of specifying any improvised implementation based on individual intuitions, the characteristics of the etho-inspired machine behavior has to be defined with a standardized methodology. These definitions are relying on organized observations of the natural behaviors, with a collection of perceptions. During the pre-organization of these tests, the observed and analyzed behavioral variables have to be defined those can make any sense for a human witness in a given scene and situation. As in many disciplines, the tests have to be performed with many subjective individuals for getting an objective result. The analyzing method consists of two simple steps: For first, the impressions and potential lateral information should be collected, which caused by the main character of the test situation. Then the impressions and information have to be conjugated to the behavioral variables in a systematic way, which allows a weighted overlap between the nodes. The weight factors must be given by using the statistics of the many performed observations.

An illustrative example, when a predator animal runs for a specified target (position) in a non-straight trajectory, because of an interfering obstacle. The most of the observers can conclude the goal position while the direction of the run is not pointing to the target. Here, the essential behavioral variable is the looking direction which contains the lateral information.

Using these analyzed measurements, a fuzzy model can be created that implements the initial concept. In many cases, the implementations require the use of new technologies. The solution of the animal like locomotion with different looking direction, body orientation and moving direction was induced the usage of holonomic robot platforms. 17. ábrashows the above example scene, where the implemented solution can be validated by repeating the observation tests with the robot, concluding that the lateral information is reflected by the behavior.

1.3.1. Informatics concepts with etho-inspiration

The best concept for the artificial intelligence is to use the abstract ethological model of the 20,000 year old human-dog relationship as the basis for the human-computing system interaction. [6] The mathematical description of the dog’s attachment behavior towards its owner is an essential aspect of setting up the ethological communication model. Such approach needed an interaction among various scientific

disciplines including psychology, cognitive science, social sciences, artificial intelligence, computer science and robotics. The main goal has to find ways in which humans can interact with these systems in a “natural” way.

These systems do not have emotions, but they can act in a way that makes us believe that they are actually fond of us. While there is a need for a mathematical model of the system, the complexity of the applied solution must fit the complexity of the problem. In case of neural networks it concerns the number of the neurons and connections, in case of fuzzy logic system; there is a minimal value of the number of the fuzzy sets and fuzzy rules. Since elderly people are naturally mistrustful toward new technologies, a solution like this could makes easier to use the modern devices and fell the interactions more natural. A device like this could substitute assistant dogs in some special situations, or could cooperate with the pets to serve the elderly people in need.

As it mentioned in the previous section markers are used for robot localization. Existing markers were designed to be practical and not aesthetic, thus people did not welcome them in their homes. Who would want the walls of their home or work place to be filled with QR codes? Aesthetical appearance depends on proportional harmonization of composition, tone, pattern and rhythm. Through aesthetics marker design the goal is to create harmony between human visual recognition and image processing of robots. [29] (see 18. ábra) Without appropriate engineering methods and devices the information science would not be sufficient to express emotions. As it mentioned in section 1.2.1, the holonomic movement is one of these etho-inspired engineering methods. The eyes of the robot are especially important at the expression of emotions. (see 19. ábra) With the use of basic geometrical shapes, colors and arrangements basic emotion can be recognized.

1.4. Conclusion for the introduction

Often machines can replace humans for more effective manufacturing because machines outperform humans in terms of strength, precision and endurance. Humans, however, perform better than machines when flexibility and intelligence is required.

![Aesthetic markers, [10]](images/image_8.png)

This paper shows efficient methods and processes for teaching and optimizing complex robot tasks by introducing etho-robotics and flexible robotics. Intelligent user interfaces combining information from several sensors in the manufacturing system will provide the operator with direct knowledge on the state of the manufacturing operation. Thus, the operator will be able to determine the system state quicker. Sensory system calculates and proposes the optimal process settings. The key element is the new human-machine communication channels helping the human to comprehend the information from the sensory systems.

1.5. References for Robotics trends

[1] Ministry of Economics and Trade (METI). (2010). 2035nen ni muketa robotto sangyou no shourai shijou yosoku (Market forecast of robot industry in 2035). Retrieved: http://www.meti.go.jp/press/20100423003/20100423003-2.pdf

[2] T. Thomessen, P. K. Sannæs, and T. K. Lien, “Intuitive Robot Programming,” in Proc. 35th International Symposium on Robotics, 2004.

[3] F. Jammes, H. Smit, "Service-oriented Paradigms in Industrial Automation" IEEE Trans. on Industrial Informatics, vol. 1, no. 1, pp. , Feb 2005.,

[4] G. Candido, A.W. Colombo, J. Barata, F. Jammes, "Service-Oriented Infrastructure to Support the Deployment of Evolvable Production Systems" IEEE Trans. on Industrial Informatics, vol. 7, no. 4, pp. 759 - 767 , Nov 2011.

[5] R. Kyusakov, J. Eliasson, J. Delsing, J. van Deventer, J. Gustafsson, "Integration of Wireless Sensor and Actuator Nodes With IT Infrastructure Using Service-Oriented Architecture" IEEE Trans. on Industrial Informatics, vol. 9, no. 1, pp. 43-51, Feb 2013.

[6] M. GarciaValls, I.R. Lopez, L.F. Villar, "iLAND: An Enhanced Middleware for Real-Time Reconfiguration of Service Oriented Distributed Real-Time Systems" IEEE Trans. on Industrial Informatics, vol. 9, no. 1, pp. 228-236, Feb 2013.

[7] A. Ferrolho, M. Crisostomo, "Intelligent Control and Integration Software for Flexible Manufacturing Cells ," IEEE Trans. on Industrial Informatics, vol. 3, no. 1, pp. 3-11, Feb 2007.

[8] G. Ferretti, G. Magnani, and P. Rocco, “Impedance Control for Elastic Joints Industrial Manipulators,” IEEE Trans. Robot. Autom., vol. 20, no. 3, pp. 488–498, Jun. 2004.

[9] L.M. Capisani, A. Ferrara, "Trajectory Planning and Second-Order Sliding Mode Motion/Interaction Control for Robot Manipulators in Unknown Environments" IEEE Trans. on Industrial Electronics, vol. 59, no. 8, pp. 3189-3198, Aug 2012.

[10] Tae-Yong Choi, Ju-Jang Lee, "Control of Manipulator Using Pneumatic Muscles for Enhanced Safety ," IEEE Trans. on Industrial Electronics, vol. 57, no. 8, pp. 2815 - 2825 , August 2010.

[11] C. Mitsantisuk, S. Katsura, K. Ohishi, "Force Control of Human–Robot Interaction Using Twin Direct-Drive Motor System Based on Modal Space Design" IEEE Trans. on Industrial Electronics, vol. 57, no. 4, pp. 1383 - 1392, April 2010.

[12] C. Mitsantisuk, K. Ohishi, S. Katsura, "Control of Interaction Force of Twin Direct-Drive Motor System Using Variable Wire Rope Tension With Multisensor Integration" IEEE Trans. on Industrial Electronics, vol. 59, no. 1, pp. 498-510, Jan 2012.

[13] K. Suwanratchatamanee, M. Matsumoto, S. Hashimoto, "Robotic Tactile Sensor System and Applications ," IEEE Trans. on Industrial Electronics, vol. 57, no. 3, pp. 1074 - 1087 , March 2010.

[14] Griffith P. Taylor, "An Automatic Block-Setting Crane". Meccano Magazine (Liverpool UK: Meccano) 23 (3): 172. March 1938.

[15] Jone J.L., “Robots at the tipping point: the road to iRobot Roomba”, IEEE Robotics & Automation Magazine, Vol. 13, Issue 1, pp. 76-78, Marc. 2006.

[16] Eric Guizzo, “Robot Vacuums That Empty Themselves”, IEEE Spectrum, 16. Jan. 2012.

[17] Fujita M. “On activating human communications with pet-type robot AIBO”, Proceedings of the IEEE, Vol. 92, Issue 11, Nov. 2004.

[18] Sakagami Y, Watanabe R, Aoyama C, Matsunaga S, Higaki N, Fujimura K, “The intelligent ASIMO: system overview and integration”, Intelligent Robots and Systems, Vol. 3, pp. 2478-2483, 2002,

[19] Tanaka F, Suzuki H, “Dance interaction with QRIO: a case study for non-boring interaction using an entrainment ensemble model”, Robot and Human Interactive communication, pp. 519-424, 2004.

[20] Shibata T, Kawaguchi Y, Wada K, “Investigation on living people with Paro at home”, Robot and Human Interactive Communication, pp. 1131-1136, Toyama, 2009.

[21] Martin Udengaard, Karl Iagnemma, “Kinematic Analysis and Control of an Omnidirectional Mobile Robot in Rough Terrain” in Intelligent Robots and Systems, San Diego, pp. 795-800, 2007.

[22] H. Adachi, N. Koyachi, T. Arai, A. Shimizu, Y. Nogami, “Mechanism and Control of a Leg-Wheel Hybrid Mobile Robot” in International Conference on Intelligent Robots and Systems, Kyongju, pp. 1792-1797, 1999.

[23] S.A. Arogeti, Danwei Wang, Chang Boon Low, Ming Yu, " Fault Detection Isolation and Estimation in a Vehicle Steering System ," IEEE Trans. on Industrial Electronics, vol. 59, no. 12, pp. 4810-4820, Dec 2012.

[24] Stephen Killough, "1997 Discover Awards". Discover Magazine, Retrieved 22, September 2011.

[25] Kovács B., Szayer G., Tajti F., Korondi P. Nagy I., "Robot with Dog Type Behaviour", EDPE 2011, pp. 4, 5

[26] QinZhang,L. Lapierre, XianboXiang " Distributed Control of Coordinated Path Tracking for Networked Nonholonomic Mobile Vehicles ," IEEE Trans. on Industrial Informatics, vol. 9, no. 1, pp. 472-484, Feb 2013.

[27] D. Lee, W. Chung, "Discrete-Status-Based Localization for Indoor Service Robots," IEEE Trans. on Industrial Electronics, vol. 53, no. 5, pp. 1737-1746, Oct 2006.

[28] W. Chung, S. Kim, M. Choi, J. Choi, H. Kim, C. Moon, J.-B. Song, "Safe Navigation of a Mobile Robot Considering Visibility of Environm," IEEE Trans. on Industrial Electronics, vol. 56, no. 10, pp. 3941-3950, Oct 2009.

[29] Korondi Péter, Farkas Zita, Fodor Lóránt, Illy Dániel Aesthetic Marker Design for Home Robot Localization. In: 38th Annual Conference of the IEEE Industrial Electronics Society (IECON 2012). Montreal, Kanada, 2012.10.25-2012.10.28. pp. 5510-5515.

[30] Miklósi, Á., Gácsi, M. 2012. On the utilization of social animals as a model for social robotics. Frontiers in Psychology, 3: 75

[31] Tinbergen, N (1963). On aims and methods of ethology. Zeitschrift für Tierpsychologie, 20, 410- 433.

[32] Yoon, S-H, Park S. (2011). A mechanical analysis of woodpecker drumming and its application to shock-absorbing systems. Bioinsp. Biomim. 6, 1-12.

Chapter 2. Robot middleware

The primary goal of this chapter is to find a robot framework in this environment that is easy to use, reliable and also easy to extend. After the different robot middleware concepts are detailed the most popular ones will be compared.

2.1. Introduction

RT-middleware (Robot Technology Middleware) is a technology that implements several key concepts needed for the development of complex robot systems, even in geographically distributed environments. Through a useful API, reusable standardized components and communication channels, and some degree of automatization, RT-middleware helps the user to build easily reconfigurable systems. The behavior of the components and the manner of interaction among them are standardized by the Robot Technology Component (RTC) specification. Until now there have been two implementations of the specification. OpenRTM.NET is written in .NET, while the other implementation, called OpenRTM-aist.

Within the robotics area, the task of robot systems can change quickly. If the job and environmental circumstances change frequently, reusable and reconfigurable components are needed, in addition to a framework which can handle these changes. The effort needed to develop such components depends on the programing language, the development environment and other frameworks used. Many programming languages can be applied for this process, however developing a brand new framework is a difficult task. Applying an existing framework as a base system, such as the OpenRTM-aist in the case of this chapter, the associated development tasks can be dramatically simplified.

Frameworks and middleware are gaining popularity through their rich set of features which support the development of complex systems. Joining together any robot framework and robot drivers can form a complex and efficient system with relatively small effort. In many cases, the task of the system designer is reduced to the configuration of an already existing framework.

On the other hand, when an existing framework requires only a set of completely new features, it can simply be extended with them. Improving an existing framework is an easier job than developing a new one because the design and implementation of such a system requires specialized knowledge and skills (design and implementation patterns). In most cases, the missing functionalities can be simply embedded into the existing framework, nonetheless there are some cases when this is hard to achieve. An existing framework can be improved simply only in case it is well designed and implemented. In spite of this, building a brand new system from is a much longer process that requires much effort.

In the area of robotics, there are many robot parts that share similar features, so the concept of robot middleware as a common framework for complex robot systems is obvious. Probably there is no framework which can fulfill all of the above requirements entirely.

2.2. Requirements of the robot middleware

In this section, I explore the requirements of robot middleware technologies in general and examine some existing robot approaches such as YARP, OpenRDK, OpenRTM-aist and ROS.

Robot middleware is a software middleware that extends communication middleware such as CORBA or ICE. It provides tools, libraries, APIs and guidelines to support the creation and operation of both robot components and robot systems. Robot middleware also acts as a glue that establishes a connection among robot parts in transparent way.

First of all, we should define the requirements and the actors of robot middleware. Generally, two actors use such technology: end-users and developers. Every robot middleware has to provide tools and mechanisms to facilitate the work of these actors.

The main use cases of robot middleware are shown in 21. ábra. After the analysis of these use case we can define some functional requirements. The component developers design and implement components. For this development procedure, many programming languages and operating systems should ideally be supported. Tools are needed for the generation of skeletons and semi-working components for quicker development. The main activity of the developer actor should be the generation of semi code, the filling out of business logic, the compilation of binary code and the execution of test cases. Developers also create robot parts as components using their knowledge about programming languages, robot middleware and the specification of the given robot system. Moreover, the person who develops the component has to validate it as an end-user. In summary, from the developer's point of view, the main requirements for robot middleware are as follows:

Support for a variety of programming languages: in most cases the members of research and development teams who develop various robot parts will be familiar with different languages depending on the trend at any given time.

Support for a variety of operating systems: nowadays Windows and Linux operating system support is mandatory, as heterogeneous systems may rely on other libraries as well that are specific to these systems.

Skeleton generation and other tools for components: The framework should be able to generate skeleton code for component development in order to speed up the development process. For this reason, robot middleware technology should include templates and tools (preferably graphical tools) to aid component development. Although developers will have to learn how to use the tools to generate source code, this requires less effort than starting component development from the scratch.

As opposed to the developer actor, an end-user is someone who has no knowledge about programming languages, as is not interested in developing components. End-users simply want to use components for their own work. In their case only a minimum number of system parameters should be visible, which are sufficient for the creation and operation of the robot system. Nice graphical interfaces and visual aid features are useful to end-users in allowing for the straightforward operation of the robot system. End-users have two main tasks. First, they often create complex robot systems using existing robot parts, and second, they often want to run previously created robot systems. The creation of a robot system can include a search for available robot parts during which the user obtains information about the accessibility of available components. This accessibility information can depend on the specific robot middleware which is applied for the task, and may include IP numbers, or any internal identifiers or symbolic names. Unfortunately, most robot middleware systems only support online components. For this reason, robot parts have to be available during the robot system creation process, i.e. have to be already running and must be registered.

End-users want to register their components in order to share functionalities with other end-users. After registration, the component can be found by all other participants. Usually end-users create custom robot systems using available components, so the second task is to find out which of the components are running. Once the robot systems are running, users will want to control them, i.e. activate/deactivate any or all of the components, and run them with various different parameter sets.

In summary, from the end-user point of view, the main requirements for robot middleware are as follows:

The overall change in the applications and general software components running on workstations in a research group should be minimal. In most cases the operation of any system that is relied on by end-users on a daily basis requires a special software environment. The installation of new software components may result in outage periods in terms of existing services.

User-friendly graphical interface the graphical interface of robot middleware should be as user-friendly as possible.

Like developers, end-users would like to use Windows or Linux systems, therefore Windows and Linux operating system support is a strong requirement. End-users will not replace their familiar workstation environment with a new one just to accommodate the requirements of a new robot middleware.

In summary, the key consideration for a robotics middleware system to be useful is that it should provide an efficient API and as much automatization as possible. Additionally, middleware systems also have to support as many operating systems and programing languages as possible. If a framework is applied for a specific task, some efforts will always be needed to understand the relevant concepts and application details.

2.3. Existing robot middleware

One distributed environment for robot cooperation is OpenRDK. The concept is detailed by Calisi in [1] . The user’s robot system can be developed using a set of Agents, through a simple process. A Module is a single thread inside an agent process. Every module has a repository in which a set of internal properties are published. Inter-agent (i.e., inter-process) communication is accomplished by two methods: through property sharing and message sending. RConsole is a graphical tool for remote inspection and management of modules. It can be used as both the main control interface of the robot and for debugging while developing software. It is just an agent that happens to have some module that displays a GUI. The framework can be downloaded from [2] .

Alternative important robot middleware platform is Yet Another Robot Platform (YARP). Communication in YARP generally follows the Observer design pattern (for more details see [3] ). Every YARP connection has a specific type of carrier associated with it (e.g., TCP, UDP, MCAST (multi-cast), shared memory, within-process). Ports can be protected by SHA256 based authentication. Each port is assigned a unique name and it is registered into a name server. The YARP name server, which is a special YARP port, maintains a set of records, the keys of which are text strings (the names of the ports). The remainder of each record contains whatever information is needed to make an initial connection to a given port.

The third important robot middleware technology is OpenRTM-aist (documentation about it can be found [4] ), which is a convenient modular system also built on the Common Object Request Broker Architecture (CORBA). This concept uses the distributed components approach, where the components have to be registered into central naming service. (The openRTM-aist uses the name server of CORBA system.). The whole concept is detailed by Ando et al in [5] , [6] and [7] . The components can contain modules what can be loaded any time. The framework is implemented using design patterns, robust software system, which provides huge feature set, more than previously mentioned ones. The components of robot system have same rights no central logic (except the naming service). Each RT-Component has an interface (a "port") for communication with other components. The RT system is constructed by connecting the ports of multiple components to each other in order to aggregate RT-Component functions. The advantage of OpenRTM-aist is that it provides a simple way to create and co-operate various robot parts. Two kinds of port can be used the service port and the data port. The data ports provide the asynchronous messages and the service port establishes the synchronous communication. The usage of service port is complicated because we have to know the CORBA technology that is an out of date system. The connection among components has to be established at runtime by the end-user using a graphical interface. A comfortable state machine concept is provided i.e. the developer has to override the appropriate methods only. More programing languages and mostly used operating systems are supported. This is the second popular robot framework in the world. (The most popular in Japan.)

The final investigated robot framework is the ROS (Robot Operating System), that seems a basic operating system, but it provides similar features as previously mentioned (available at [8] ). The concept is detailed by Quigley in [9] . The items of robot system have to be registered a central server as well, but this component is called ROS node in this case. This node is the basic structure that can not be divide more parts in official ROS, but a 3rd party component exists called nodelet which support run more algorithm in one process (node). A central process has to be run (one roscore file creating a ros master and rosout nodes) providing the central log opportunity and a distributed parameter set. The connections among node are established automatically, i.e. if a component is running that advertises the topic and the other which subscribe to the topic, then the two nodes store information about the other using the roscore API. The developers' work is assisted completely, but only few tools are available end-user. This is the most popular robot middleware all over the world.

In the case of all investigated robot framework a concept is handled as distributed entity that is an agent or a port or a component or a node. The YARP provides more communication possibility nevertheless it has poor set of tools. Unfortunately the development of YARP and OpenRDK is broken. Only two robot frameworks are used nowadays, the OpenRTM-aist and the ROS, which is in progress so in the later part these robot system will be compared and detailed.

2.4. Comparison of Robot middleware the point of view of developers

In this section the ROS and the OpenRTM-aist robot middleware are investigated in point of view of developer. The complexity of significant procedures are compared and additionally the helper tools.

2.4.1. Creating a new project

In the case of ROS the new package can be generated by a tool named catkin_create_package that creates a folder containing a CMakeList.txt and a package.xml. These files have to be modified if another package is needed or a new executable file is necessary. If a simple message sending or receiving is should be used then the official tutorial can be modified easily and the business logic can be placed into a single function. It doesn’t generate any file or class, but it is not necessary for usage of ROS. The structure of beginning project is very simple, understandable. The basic features of ROS can be accessed only via three classes and the implementation of business logic not requires serious knowledge of ROS system. C++ and Python programming languages are supported officially. A custom creation tool is presented called catkin_make that is an extension of the platform independent CMake build system. More Integrated Development Environment (IDE) can be used that are supported the CMake, because the CMake can export it (at the moment CodeBlocks, Eclipse, Xcode, KDevelop, and Visual Studio).

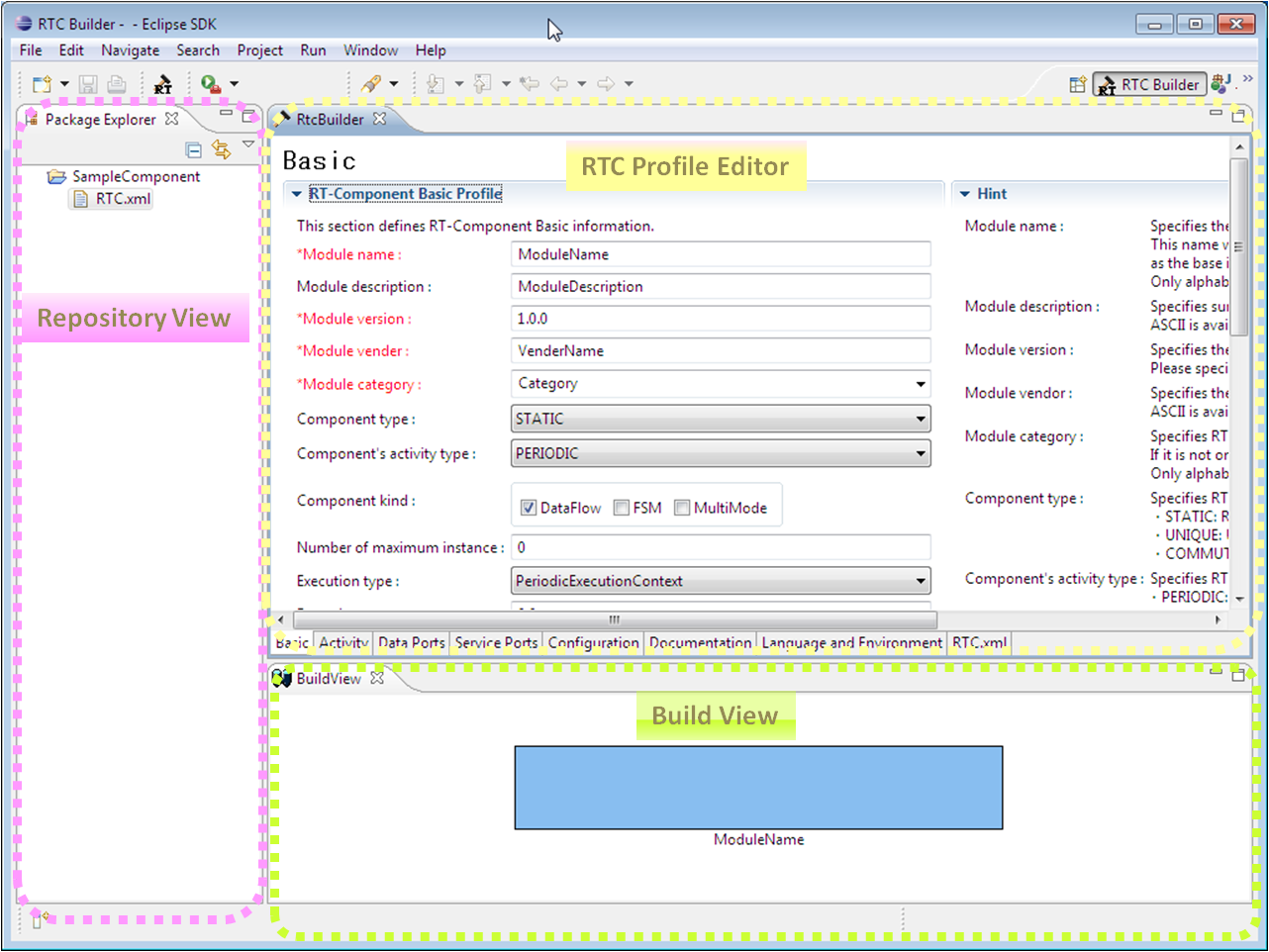

For project creation, the OpenRTM-aist middleware also contains a command line tool (rtc-template) and graphical editor, called RTC Builder (the graphical interface is shown in 22. ábra.

The definition of each RTC component is stored in a programming language independent xml file, and the source codes are automatically generated by the robot middleware framework using this XML file. Four source code files are created. One header and one C++ file for class definition, one C++ source file containing the main function and a makefile for building process. The developer has only to fill out the body of each skeleton member function and to insert other external classes. After building our system using these generated source we get a minimal working system, but it is hard to understand and to modify because substantial knowledge is needed about OpenRTM-aist system and the RT-specification. In the generated files the class is a subclass containing overriding methods and codes of design patterns. The knowledge of 5-10 classes are necessary for beginners. The generation process of a beginner project requires a lot of parameters at least 10, but in a complicated case can be 20-30 parameters too. C++, Java, Python programming languages Eclipse and Visual Studio are supported.

2.4.2. Component development

More than 2000 3rd party components are available for ROS that can be used freely for own robot system. Using these components the development period of the new robot system can be quick and easy. If our system is well designed i.e. the workflow of the components and the interface among them are designed precisely then the development process can be done in parallel due to available ROS tools. A message to a topic can be sent an easy way by rostopic from command line or listening to arriving messages to a specified topic. The construction of the component is not block the development of another component. Sharing a new functionality needs only a new method and variable definition and a simple function call.

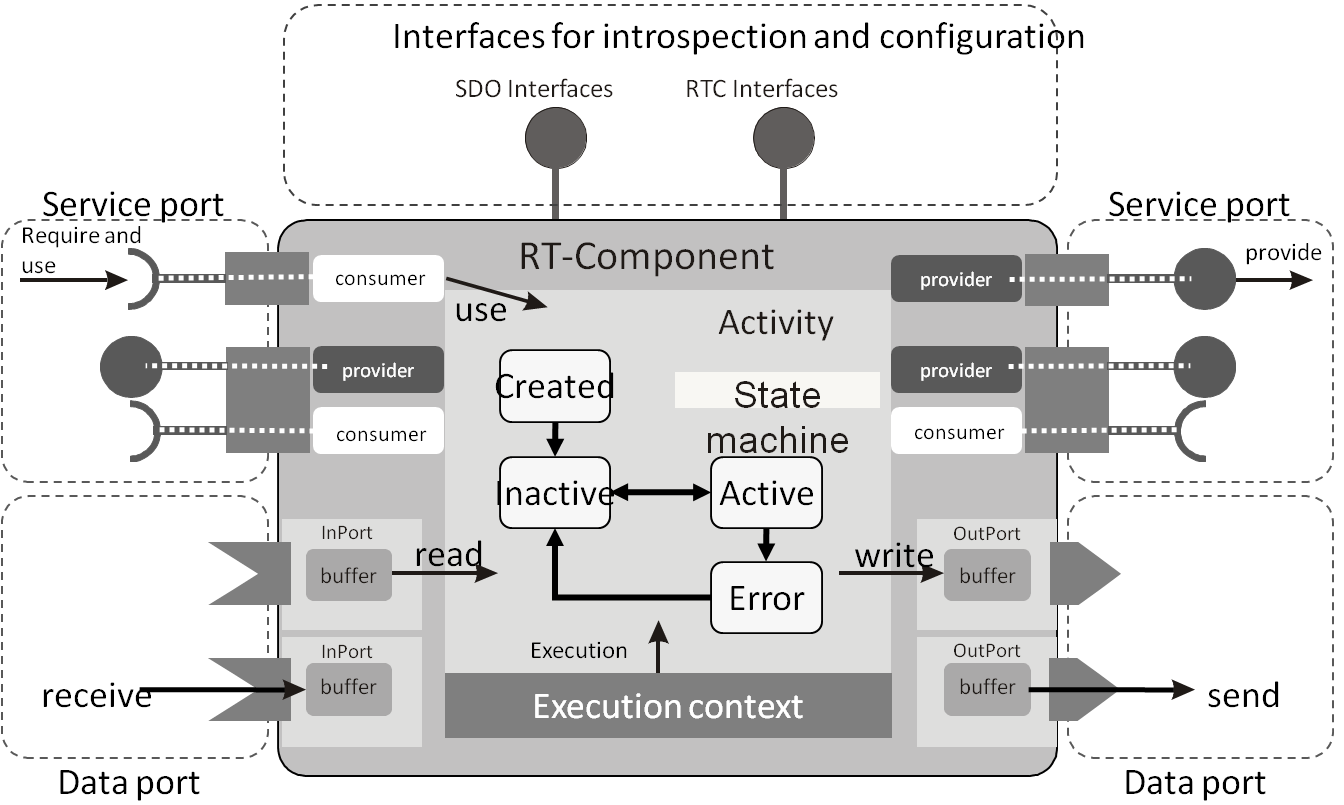

In case of OpenRTM-aist approximately 1000 components are available. Some of them can be used in Windows operating system only and the others restrict us to Linux. Unfortunately the documentation of the most components written in japan only and we have to trust the result of any language translator. The Debug and test tools are missing that helps to developer, we have to implement dummy components for this reason. In most cases the correct usage of the system services requires deep knowledge about CORBA technology that increases the full time of development. If a behaviour of any component can be used remotely we have to do more steps in the provider and consumer side also. In this case the usage of CORBA can not be omitted. The developer has to create a class containing methods of state machine and the business logic. The architecture of the component is show in the 23. ábra.

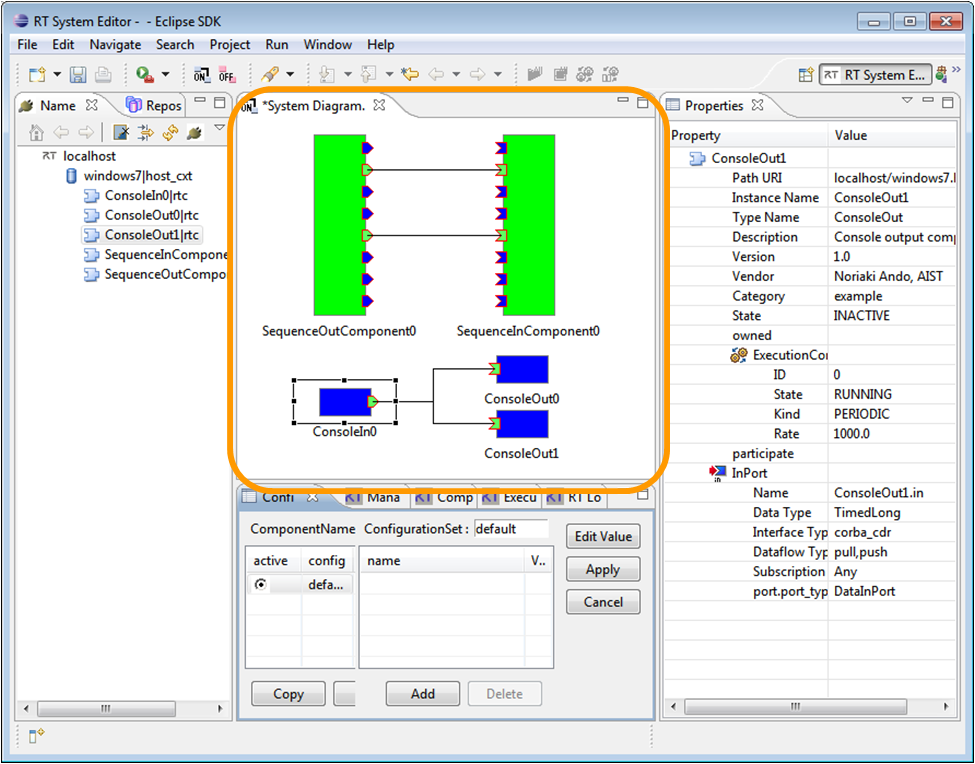

2.5. Services for end-users

The OpenRTM-aist robot framework is responsible for more services for the end-user than other ones. It supports the substitution of component, robot system creation, change of parameters of the robot, and the control of the component. Some command line tools are presented in rtc_shell package which are Python scripts. In addition, a graphical system editor is shipped as Eclipse plugin. The size of this system editor (and the Eclipse itself) is too large and the graphical capabilities are limited, but it is an effective supervisory interface of the system (the graphical interface is shown in the 24. ábra.)

In the left side of the window the end-user can browse the online components in the middle part the actual robot system is shown. In the right side the main parameters of the component are shown. The end-user can start or stop the robot system or any component of the system at any time. If this GUI is not sufficient, than a new tool should be created. Unfortunately, the detailed documentation of OpenRTM-aist system is missing so the source code investigation is needed.

Exiguous amount of tool exist in ROS aiding the end-user. Some graphical tools are implemented in qt helping to view the log messages and draw the structure of robot system. Moreover, we should mention a promising tool that runs in a browser called Robot Web Tool. This is a Java script collection communication to the Python server application (a bridge to ROS) but additional development is necessary.

2.6. Summary for robot middleware

The OpenRTM-aist contributes more features to end-users via graphical interface, but more knowledge is needed for developer. In the case when the researcher would like to try more solutions i.e. varying the component the OpenRTM-aist is better than ROS.

It supports more programming languages and operating systems also, so it is easy to integrate it into the existing topology and easy to develop the new system. Unfortunately, the documentation is poor and some of them are exist in japan only which is not popular except in Japan. The support of more operating systems is mentioned as advantage, but it is drawbacks also, because it can lead to complicate and heterogeneous robot topology. If our robot system requires components which are found, however that components can be run only different operating system, it requires additional PC-s. The OpenRTM-ais provides more tools and features to developers, but more knowledge and experience are needed, nevertheless the documentation of the system is poor. More useful code can be found in the sample projects complementing the missing documentation, however the developer has to search it and has to try it with different parameters. An active community of OpenRTM-asit exists in Japan, nevertheless the questions and the replies are presented in Japanese language only in most cases.

The ROS is suggested in the case of autonomous system when the connection of robot parts is mandatory. In this case the graphical editor is not necessary. Because of huge number of existing components, probably one or more component exists which fulfil our requirements which are small, so the understanding and the modification are an easy task. It is easy to create a new project from the scratch (easier than OpenRTM-aist) because the creation of classes is optional we have to use existing classes and methods only. The development of the ROS core is continuous by a community, the seventh version is in progress. There are a lot of information about it and a lot of questions and answers on the web page.

2.7. References for robot middleware

|

[1] |

D. Calisi, A. Censi, L. Iocchi és D. Nardi, „OpenRDK: a modular framework for robotic software development,” in Intelligent Robots and Systems, 2008. IROS 2008. IEEE/RSJ International Conference on, 2008. |

|

[2] | |

|

[3] | |

|

[4] |

„OpenRTM-aist robot middleware official web page,” [Online]. |

|

[5] |

N. Ando, S. Kurihara, G. Biggs, T. Sakamoto, H. Nakamoto és T. Kotoku, „Software deployment infrastructure for component based rt-systems,” Journal of Robotics and Mechatronics, %1. kötet23, %1. szám3, 2011. |

|

[6] |

N. Ando, T. Suehiro és T. Kotoku, „A software platform for component based rt-system development: Openrtm-aist,” in Simulation, Modeling, and Programming for Autonomous Robots, Springer, 2008. |

|

[7] |

N. Ando, T. Suehiro, K. Kitagaki, T. Kotoku és W.-K. Yoon, „RT-middleware: distributed component middleware for RT (robot technology),” in Intelligent Robots and Systems, 2005.(IROS 2005). 2005 IEEE/RSJ International Conference on, 2005. |

|

[8] | |

|

[9] |

M. Quigley, K. Conley, B. Gerkey, J. Faust, T. Foote, J. Leibs, R. Wheeler és A. Y. Ng, „ROS: an open-source Robot Operating System,” in ICRA workshop on open source software, 2009. |

|

[10] |

K. Saito, K. Kamiyama, T. Ohmae and T. Matsuda, "A microprocessor-controlled speed regulator with instantaneous speed estimation for motor drives," IEEE Trans. Ind. Electron., vol. 35, no. 1, pp. 95-99, Feb. 1988. |

|

[11] |

A. G. Filippov, "Application of the theory of differential equations with discontinuous right-hand sides to non-linear problems in autimatic control," in 1st IFA congress, 1960, pp. 923-925. |

|

[12] |

A. G. Filippov, "Differential equations with discontinuous right-hand side," Ann. Math Soc. Transl., vol. 42, pp. 199-231, 1964. |

|

[13] |

Van, Doren and Vance J., "Loop Tuning Fundamentals," Control Engineering. Red Business Information, July 1, 2003. |

|

[14] |

C. Chan, S. Hua and Z. Hong-Yue, "Application of fully decoupled parity equation in fault detection and identification of dcmotors," IEEE Trans. Ind. Electron., vol. 53, no. 4, pp. 1277-1284, June 2006. |

|

[15] |

F. Betin, A. Sivert, A. Yazidi and G.-A. Capolino, "Determination of scaling factors for fuzzy logic control using the sliding-mode approach: Application to control of a dc machine drive," IEEE Trans. Ind. Electron., vol. 54, no. 1, pp. 296-309, Feb. 2007. |

|

[16] |

J. Moreno, M. Ortuzar and J. Dixon, "Energy-management system for a hybrid electric vehicle, using ultracapacitors and neural networks," IEEE Trans. Ind. Electron., vol. 53, no. 2, pp. 614-623, Apr. 2006. |

|

[17] |

R.-E. Precup, S. Preitl and P. Korondi, "Fuzzy controllers with maximum sensitivity for servosystems," IEEE Trans. Ind. Electron., vol. 54, no. 3, pp. 1298-1310, Apr. 2007. |

|

[18] |

V. Utkin and K. Young, "Methods for constructing discountnuous planes in multidimensional variable structure systems," Automat. Remote Control, vol. 31, no. 10, pp. 1466-1470, Oct. 1978. |

|

[19] |

K. Abidi and A. Sabanovic, "Sliding-mode control for high precision motion of a piezostage," IEEE Trans. Ind. Electron., vol. 54, no. 1, pp. 629-637, Feb. 2007. |

|

[20] |

F.-J. Lin and P.-H. Shen, "Robust fuzzy neural network slidingmode control for two-axis motion control system," IEEE Trans. Ind. Electron., vol. 53, no. 4, pp. 1209-1225, June 2006. |

|

[21] |

C.-L. Hwang, L.-J. Chang and Y.-S. Yu, "Network-based fuzzy decentralized sliding-mode control for cat-like mobile robots," IEEE Trans. Ind. Electron., vol. 54, no. 1, pp. 574-585, Feb. 2007. |

|

[22] |

M. Boussak and K. Jarray, "A high-performance sensorless indirect stator flux orientation control of industion motor drive," IEEE Trans. Ind. Electron., vol. 53, no. 1, pp. 614-623, Feb. 2006. |

|

[23] |

D. C. Biles And P. A. Binding, „On Carath_Eodory's Conditions For The Initial Value Problem,” Proceedings Of The American Mathematical Society, %1. kötet125, %1. szám5, pp. 1371{1376 S 0002-9939(97)03942-7 , 1997. |

|

[24] |

Filippov, A.G., „Application of the Theory of Differential Equations with Discontinuous Right-hand Sides to Non-linear Problems in Automatic Control,” in 1st IFAC Congr., pp. 923-925, Moscow, 1960. |

|

[25] |

Filippov, A.G., „Differential Equations with Discontinuous Right-hand Side,” Ann. Math Soc. Transl., %1. kötet42, pp. 199-231, 1964. |

|

[26] |

Harashima, F.; Ueshiba, T.; Hashimoto H., „Sliding Mode Control for Robotic Manipulators",” in 2nd Eur. Conf. On Power Electronics, Proc., pp 251-256, Grenoble, 1987. |

|

[27] |

P. Korondi, L. Nagy, G. Németh, „Control of a Three Phase UPS Inverter with Unballanced and Nonlinear Load,” in EPE'91 4th European Conference on PowerElectronics, Proceedings vol. 3. pp. 3-180-184, Firenze, 1991. |

|

[28] |

P. Korondi, H. Hashimoto, „Park Vector Based Sliding Mode Control K.D.Young, Ü. Özgüner (editors) Variable Structure System, Robust and Nonlinear Control.ISBN: 1-85233-197-6,” Springer-Verlag, %1. kötet197, %1. szám6, 1999. |

|

[29] |

P.Korondi, H.Hashimoto, „Sliding Mode Design for Motion Control,” in Studies in Applied Electromagnetics and Mechanics, ISBN 90 5199 487 7, IOS Press 2000.8, %1. kötet16, 2000. |

|

[30] |

Satoshi Suzuki, Yaodong Pan, Katsuhisa Furuta, and Shoshiro Hatakeyama, „Invariant Sliding Sector for Variable Structure Control,” Asian Journal of Control, %1. kötet7, %1. szám2, pp. 124-134, 2005. |

|

[31] |

P. Korondi, J-X. Xu, H. Hashimoto, „Sector Sliding Mode Controller for Motion Control,” in 8th Conference on Power Electronics and Motion Control Vol. 5, pp.5-254-5-259. , 1998. |

|

[32] |

Xu JX, Lee TH, Wang M, „Design of variable structure controllers with continuous switching control,” INTERNATIONAL JOURNAL OF CONTROL, %1. kötet65, %1. szám3, pp. 409-431, 1996. |

|

[33] |

Utkin, V. I., „Variable Structure Control Optimization,” Springer-Verlag, 1992. |

|

[34] |

Young, K. D.; Kokotovič, P. V.; Utkin, V. I., „A Singular Perturbation Analysis of High-Gain Feedback Systems,” IEEE Trans. on Automatic Control, %1. kötet, összesen: %2AC-22, %1. szám6, pp. 931-938, 1977. |

|

[35] |

Furuta, K., „Sliding Mode Control of a Discretee System,” System Control Letters, %1. kötet14, pp. 145-152, 1990. |

|

[36] |

Drakunov, S. V.; Utkin, V. I., „Sliding Mode in Dynamics Systems,” International Journal of Control, %1. kötet55, pp. 1029-1037, 1992. |

|

[37] |

Young, K. D., „Controller Design for Manipulator using Theory of Variable Structure Systems,” IEEE Trans. on System, Man, and Cybernetics, %1. kötet, összesen: %2Vol SMC-8, pp. 101-109, 1978. |

|

[38] |

Hashimoto H.; Maruyama, K.; Harashima, F.: ", „Microprocessor Based Robot Manipulator Control with Sliding Mode",” IEEE Trans. On Industrial Electronics, %1. kötet34, %1. szám1, pp. 11-18, 1987. |

|

[39] |

Sabanovics, A.; Izosimov, D. B., „Application of Sliding Modes to Induction Motor,” IEEE Trans. On Industrial Appl., %1. kötet17, %1. szám1, p. 4149, 1981. |

|

[40] |

Vittek, J., Dodds, S. J., „Forced Dynamics Control of Electric Drive,” EDIS – Publishing Centre of Zilina University, ISBN 80-8070-087-7, Zilina, 2003. |

|

[41] |

Utkin, V.I.; „Sabanovic, A., „Sliding modes applications in power electronics and motion control systems,” Proceedings of the IEEE International Symposium Industrial Electronics, %1. kötetVolume of tutorials, pp. TU22 - TU31, 1999. |

|

[42] |

Sabanovic, A, „Sliding modes in power electronics and motion control systems,” in The 29th Annual Conference of the IEEE Industrial Electronics Society, IECON '03, Vol. 1, Page(s):997 - 1002, 2003. |

|

[43] |

Siew-Chong Tan; Lai, Y.M.; Tse, C.K., „An Evaluation of the Practicality of Sliding Mode Controllers in DC-DC Converters and Their General Design Issues,” in 37th IEEE Power Electronics Specialists Conference, PESC '06. Page(s):1 - 7, 2006. |

|

[44] |

Slotine,J.J., „Sliding Controller Design for Non-Linear Systems,” Int. Journal of Control, %1. kötet40, %1. szám2, pp. 421-434, 1984. |

|

[45] |

Sabanovic A., N. Sabanovic. K. Jezernik, K. Wada, „Chattering Free Sliding Modes,” The Third Worksop on Variable Structure Systems and Lyaponov Design , Napoly, Italy, 1994. |

|

[46] |

Korondi, H.Hashimoto, V.Utkin , „Direct Torsion Control of Flexible Shaft based on an Observer Based Discrete-time Sliding Mode,” IEEE Trans. on Industrial Electronics, %1. szám2, pp. 291-296, 1998. |

|

[47] |

Boiko, I.; Fridman, „ L Frequency Domain Input–Output Analysis of Sliding-Mode Observers,” IEEE Transactions on Automatic Control, %1. kötet51, %1. szám11, pp. 1798-1803, 2006. |

|

[48] |

Comanescu, M.; Xu, L., „Sliding-mode MRAS speed estimators for sensorless vector control of induction Machine,” IEEE Transactions on Industrial Electronics, %1. kötet53, %1. szám1, p. 146 – 153 , 2005. |

|

[49] |

Furuta.K. , Y.Pan, „Variable structure control with sliding sector,” Automatica, %1. kötet36, pp. 211-228, 2000. |

|

[50] |

Suzuki S, Pan Y, Furuta K, „VS-control with time-varying sliding sector - Design and application to pendulum,” ASIAN JOURNAL OF CONTROL, %1. kötet6, %1. szám3, pp. 307-316, 2004. |

|

[51] |

Korondi Péter, „Tensor Product Model Transformation-based Sliding Surface Design,” Acta Polytechnica Hungarica, %1. kötet3, %1. szám4, pp. 23-36, 2006. |

|

[52] |

Vadim Utkin, Hoon Lee, „The Chattering Analysis,” EPE-PEMC Proceedings 36, 2006. |

|

[53] |

Koshkouei, A.J.; Zinober, A.S.I., „Robust frequency shaping sliding mode control” Control Theory and Applications,” IEE Proceedings, %1. kötet147, %1. szám3, p. 312 – 320, 2000. |

|

[54] |

HASHIMOTO, H., and KONNO, Y., „‘Sliding surface design in thefrequency domain’, in ‘Variable Structure and Lyapunov control’,ZINOBER, A.S.I. (Ed.) (),,” Springer-Verlag, Berlin, pp. 75-84, 1994. |

|

[55] |

Koshkouei, A.J.; Zinober, A.S.I., „Adaptive backstepping control of nonlinear systems with unmatched uncertainty,” in Proceedings of the 39th IEEE Conference on Decision and Control, pp. 4765 – 4770, 2000 . |

|

[56] |

Kaynak, O.; Erbatur, K.; Ertugnrl, M., „The fusion of computationally intelligent methodologies and sliding-mode control-A survey,” IEEE Transactions on Industrial Electronics, %1. kötet48, %1. szám1, p. 4 – 17, 2001. |

|

[57] |

Lin, F.-J.; Shen, P., „H Robust Fuzzy Neural Network Sliding-Mode Control for Two,” Axis Motion Control System IEEE Transactions on Industrial Electronics, %1. kötet53, %1. szám4, p. 1209 – 1225 , 2006. |

Chapter 3. Universal robot controller

3.1. Introduction

At the previous decades the industrial robots and NC machines becomes available for smaller companies. Industrial robots has slightly different programming mode than other Industrial machines like CNC machines and Lathes. Programming of NC machines is more standardized (G-code), while different programming languages and interfaces exist for different robots even from the same manufacturer. It is important to follow standards at production, however this standardization is absent in industrial robotics. Despite the standard G code NC machines usually do not have any appropriate port (and sometimes I/O opportunities) for high level communication in a manufacturing cell. Due to these limitations of existing NC and robot controllers, many researches were started to develop a new, open-source, flexible middleware software and hardware architecture for replacing outworn controllers [1, 13].

We are the first in the field of universal control development who are combining the following two popular existing concepts to merge their benefits: the reliable LinuxCNC and the flexible RT-Middleware technology.

The organization of the paper is as follows: Section II describes the origin of LinuxCNC software system. Section III introduces the RT-Middleware technology, Section IV presents three different concepts for hardware architecture, Section V gives an example solution with experimental results, and Section VI concludes the paper.

3.2. RS274NGC G-code standard and LinuxCNC

The US government sponsored Public Domain software systems for numeric control of milling machines were among the very first projects developed with the first digital computers in the 1950`s. In fact, the need and concepts of universal motion controllers is not a novel issue in the industry. The universality was described by modularity, portability, extensibility, and scalability requirements before two decades when the development of LinuxCNC was already started [2]. The project was originally launched by the National Institute of Standards and Technology (NIST) in 1989 [2, 3] and the software was moved under public domain in 2000, allowing external contributors to make changes and reuse the code. The characteristics of universality are still forming an actual topic as the available hardware elements are improved and the control architectures are developed. Today, LinuxCNC is a very reliable and popular open source software system that can be used under General Public License for numerical control.

3.3. Rt-middleware framework

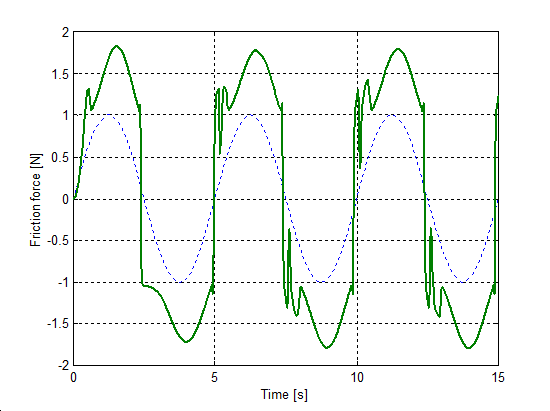

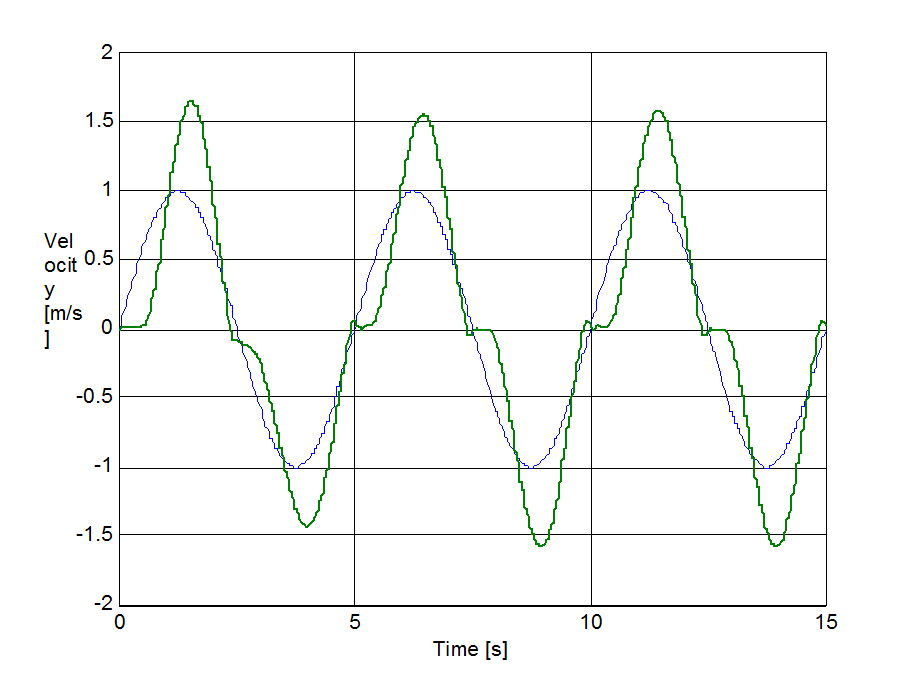

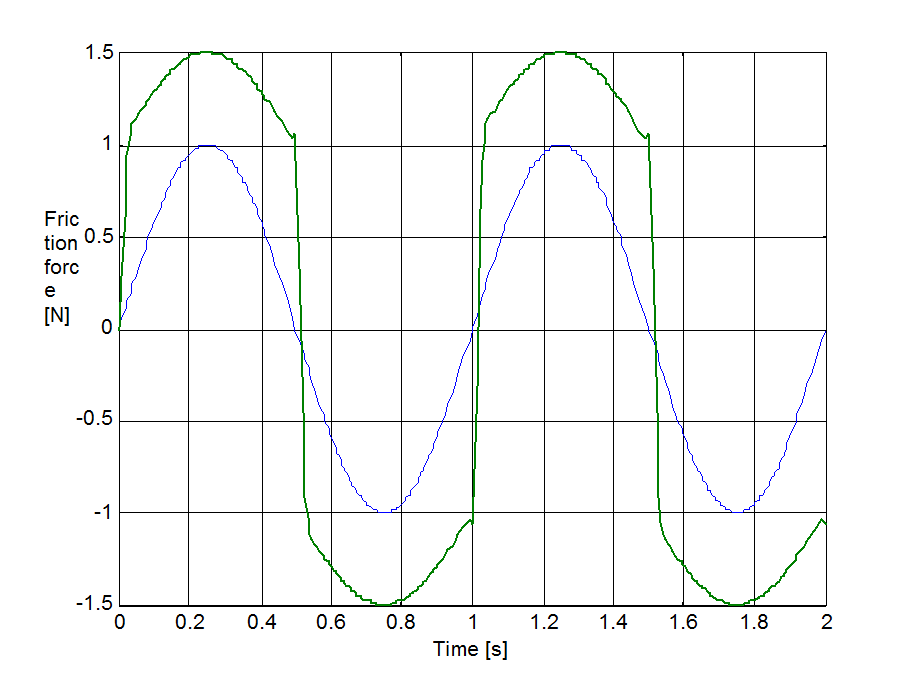

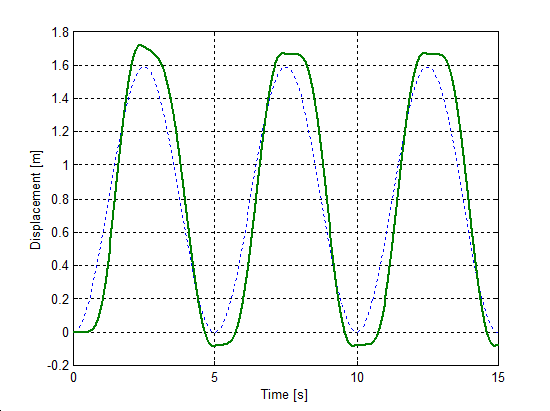

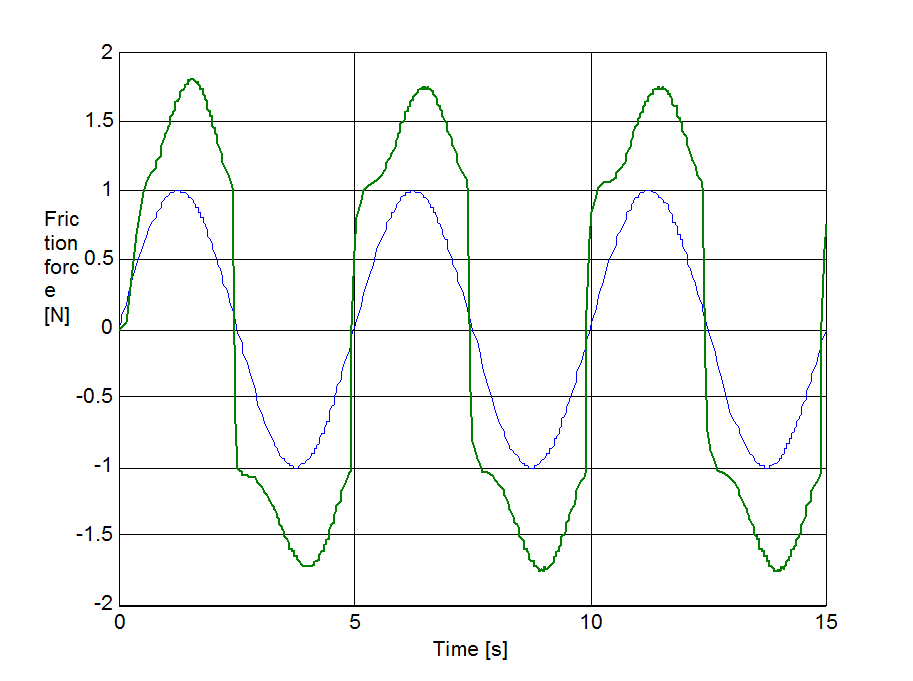

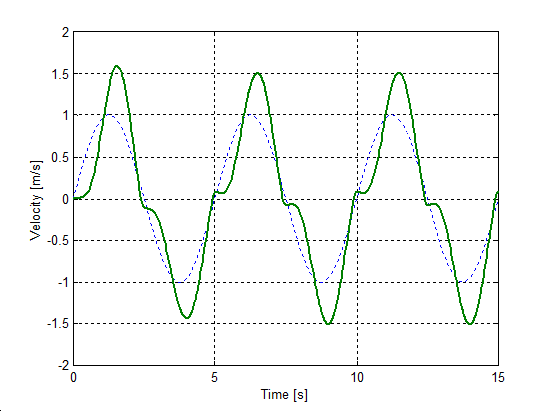

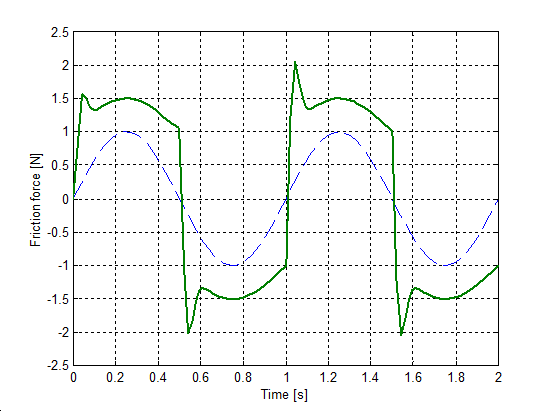

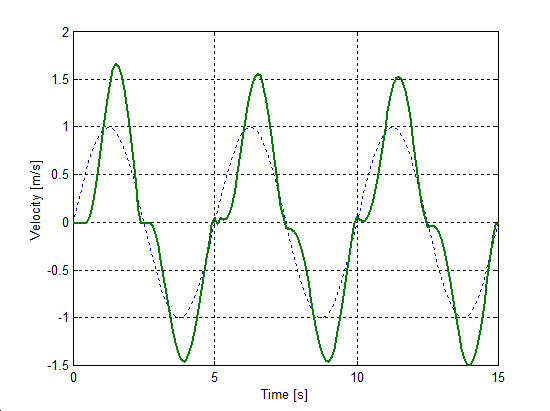

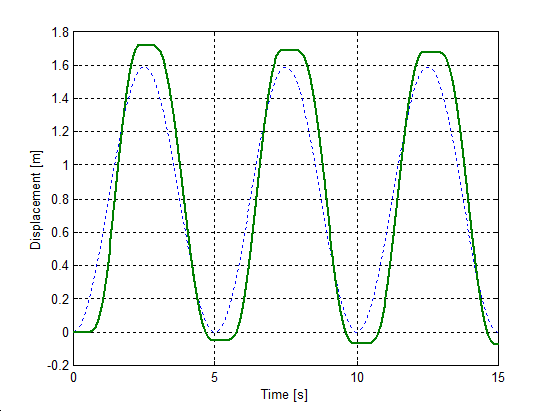

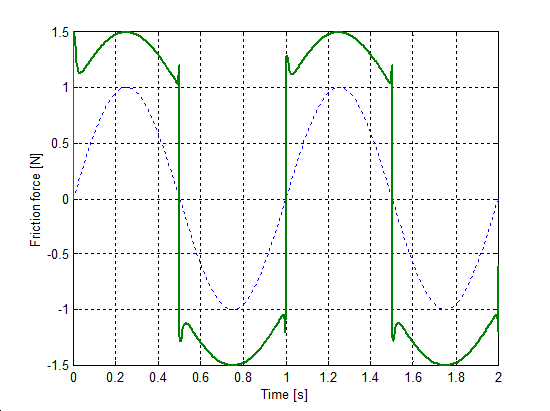

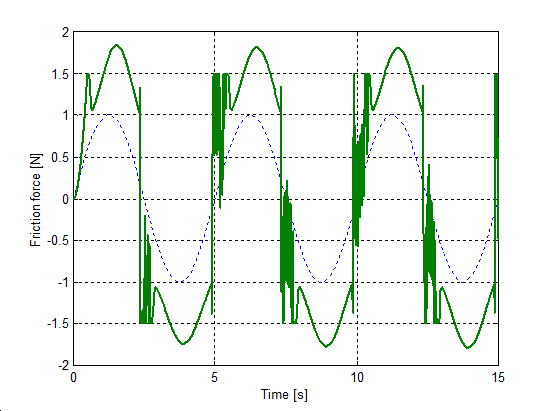

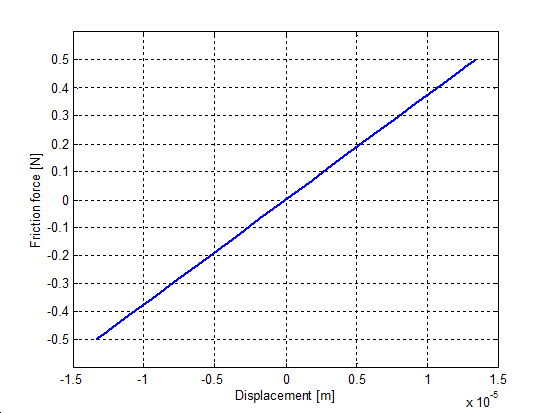

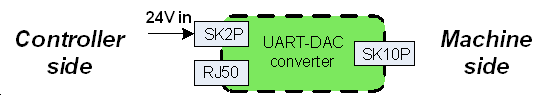

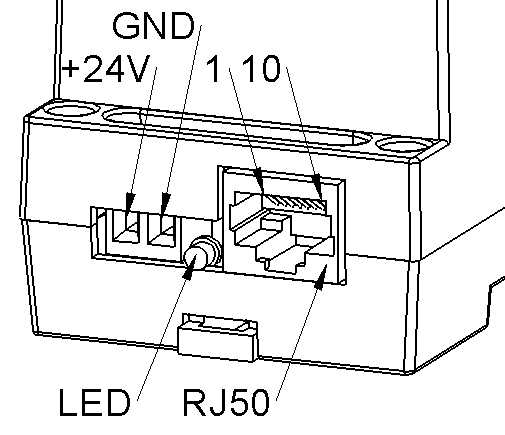

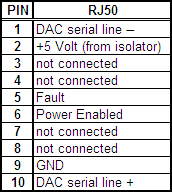

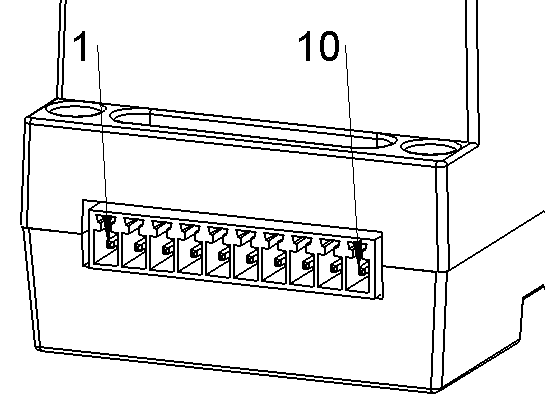

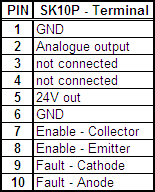

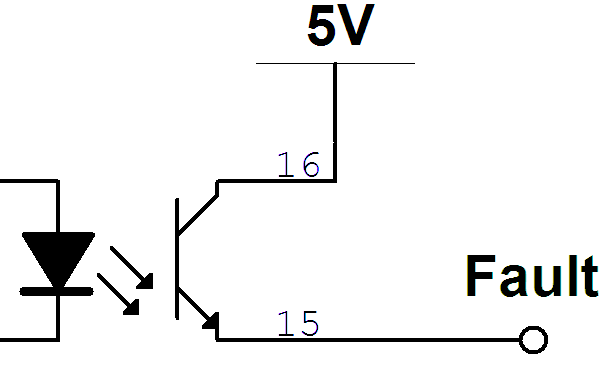

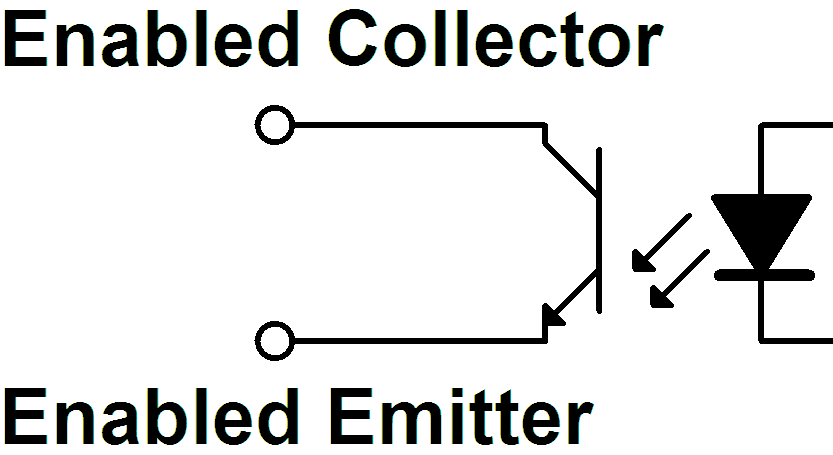

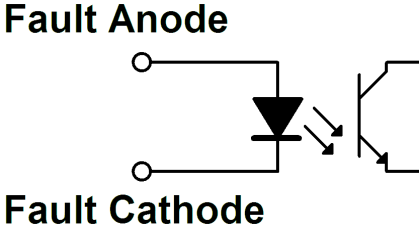

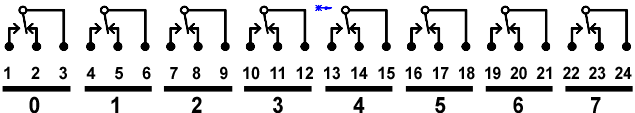

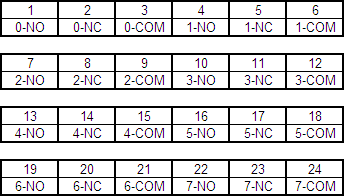

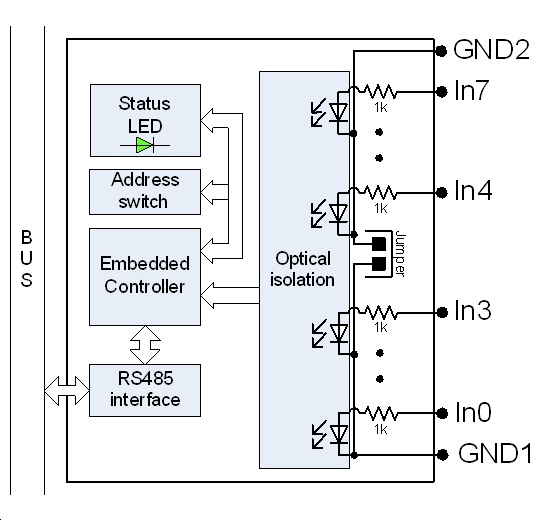

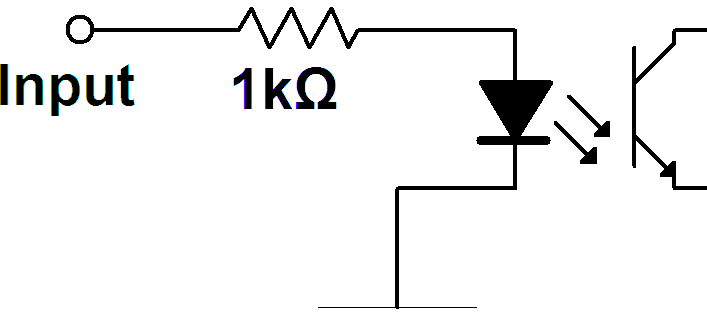

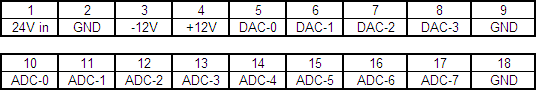

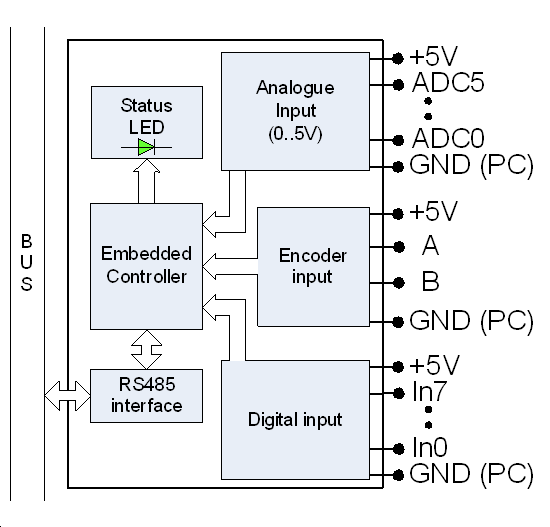

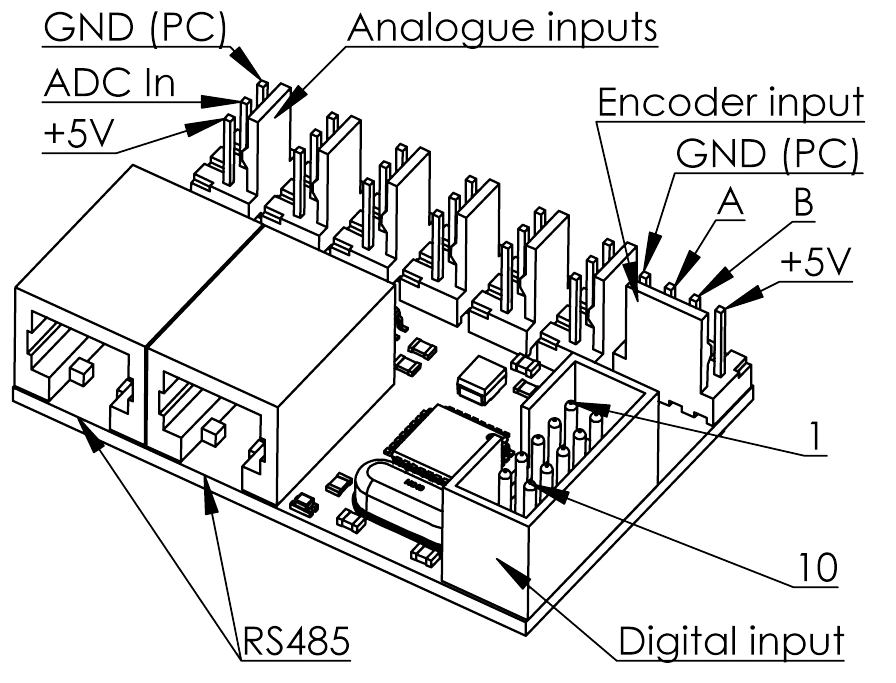

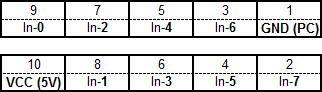

The Japanese Ministry of Economy, Trade and Industry (METI) in collaboration with the Japan Robot Association (JARA) and National Institute of Advanced Industrial Science and Technology (AIST) started a 3 year-national project “Consolidation of Software Infrastructure for Robot Development” in 2002 [4]. An ICE Extension was proposed in [14,15]. With the intention of implementing robot systems to meet diversified users' needs, this project has pursued R&D of technologies to make up robots and their functional parts in modular structure at the software level, and to allow system designers or integrators building versatile robots or systems with relative ease by simply combining selected modular parts. The robot technology middleware have been developed as infrastructure software for implementing the proposed robot architecture, named "OpenRTM-aist" (Open Robot Technology Middleware).